The Challenge of AI False Positives

Greetings everyone! Hope you're all doing well. I've been bothered by something for a few days and I'm here to talk about it. I know this is going to stir up controversy just like my last post did on the Steem Watcher community about two months ago. Although I really wanted to post this in the Steem Watcher community again, due to some reasons, I'm sharing it in a different community this time. I hope it sparks a healthy discussion, maybe even leading to a solution, or at least raises some awareness among the moderators about this issue.

Ever since the advertising of ChatGPT and other large language models (LLMs) on social media started, I think hardly anyone connected to the writing profession is unaware of them. Ethically used, these LLMs can greatly assist you and help present your work more effectively. However, today, I'm not here to discuss the 'use vs. abuse' of LLMs—that's a topic for another day.

My focus today is on the reliability of the different tools we use to detect AI-generated content. How do we identify false positives? Should moderators rely solely on detector results to make their final judgment, or should posts be manually analyzed as well?

Reliability of AI-detectors

As AI tools advance, so do the AI detectors, becoming increasingly strict. Some are now so sensitive that they often yield false positives, and as a community moderator, I've observed these myself—I've even been a recent victim in one community. For those unfamiliar with the term false positive, in the context of AI detectors, a false positive occurs when the AI detector labels your own written content as AI-generated.

About 10 days ago, I wrote an article in the Steem Alliance community, which a moderator flagged as AI-generated and issued me a warning without attaching any screenshots or proof to support their claim. This was frustrating for me as the article was my own work. I then scanned my article with four detectors I use for moderating posts in my community; all of them declared it human-written. I commented asking for justification, but received no response from the moderator.

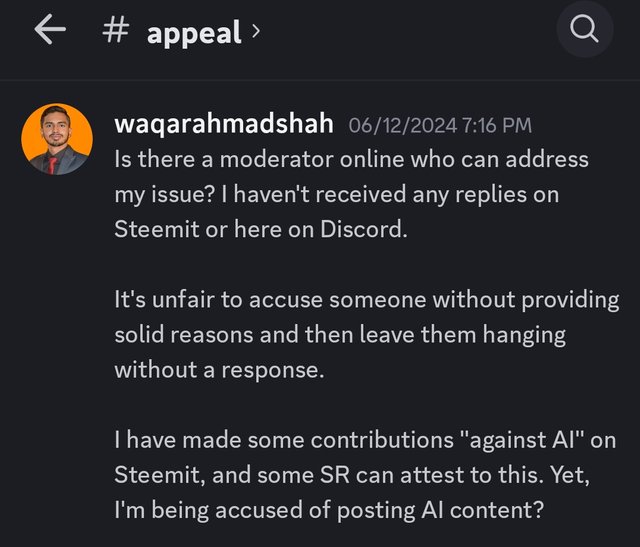

Later, I sought justification on the Steem Alliance community's Discord server in the appeal section and was told the moderator was unavailable due to his exams. This led to some heated arguments, and eventually, I had to seek help from a reputed friend who spoke to the Steem Alliance admin to help resolve my case.

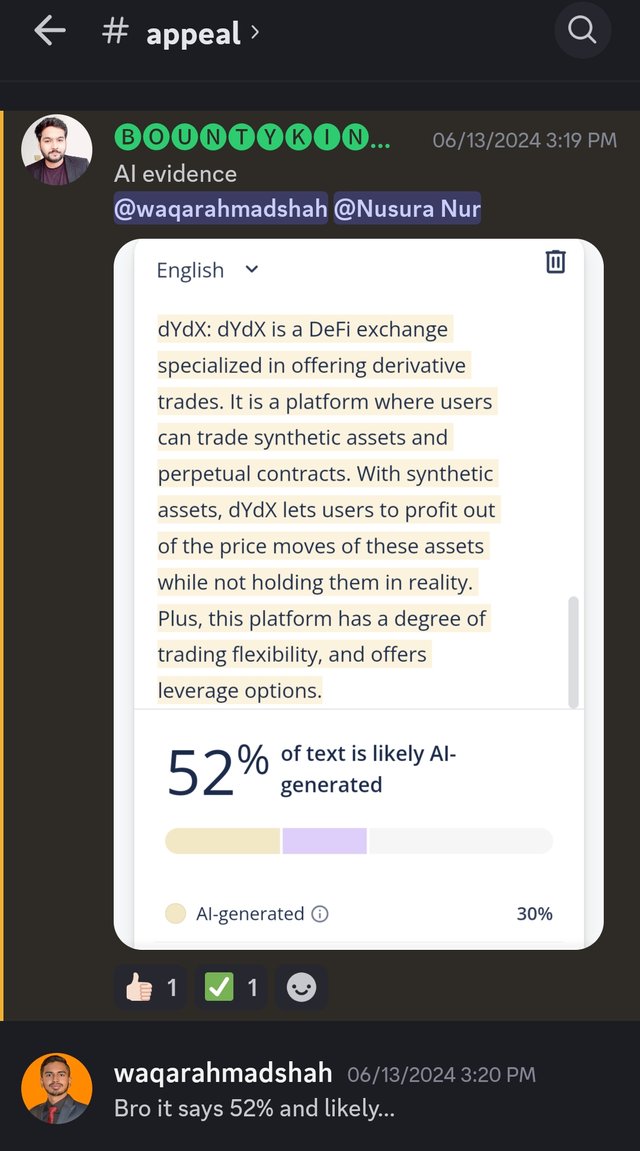

After about 24 hours, they finally sent me a screenshot as 'AI evidence,' which you can see below.

Unfortunately, they did not share their AI detection method with me. I urged them to manually analyze the post and not to declare content as AI-based solely on one detector's result. They still didn't acknowledge their mod's mistake, and eventually, they told me no more discussion was needed on this topic. This is why I decided to leave it there and resolved not to post in that community for now because I can't explain to everyone that my content is my own.

Another interesting point is that some communities have moderators who aren't very familiar with the English language; they mostly use translators. If these moderators judge your content as AI-generated just by looking at the tool's percentage, how can you convince them it's your own work? It would be like casting pearls before swine.

Now about false positives. I've observed several posts recently where more than 40% of the content was shown as AI-generated in ZeroGPT, but when you read the post or check the author's history, it's clear the content is originally human-written. Moderators who rely solely on tools find it hard to understand this.

Example of a False Positive

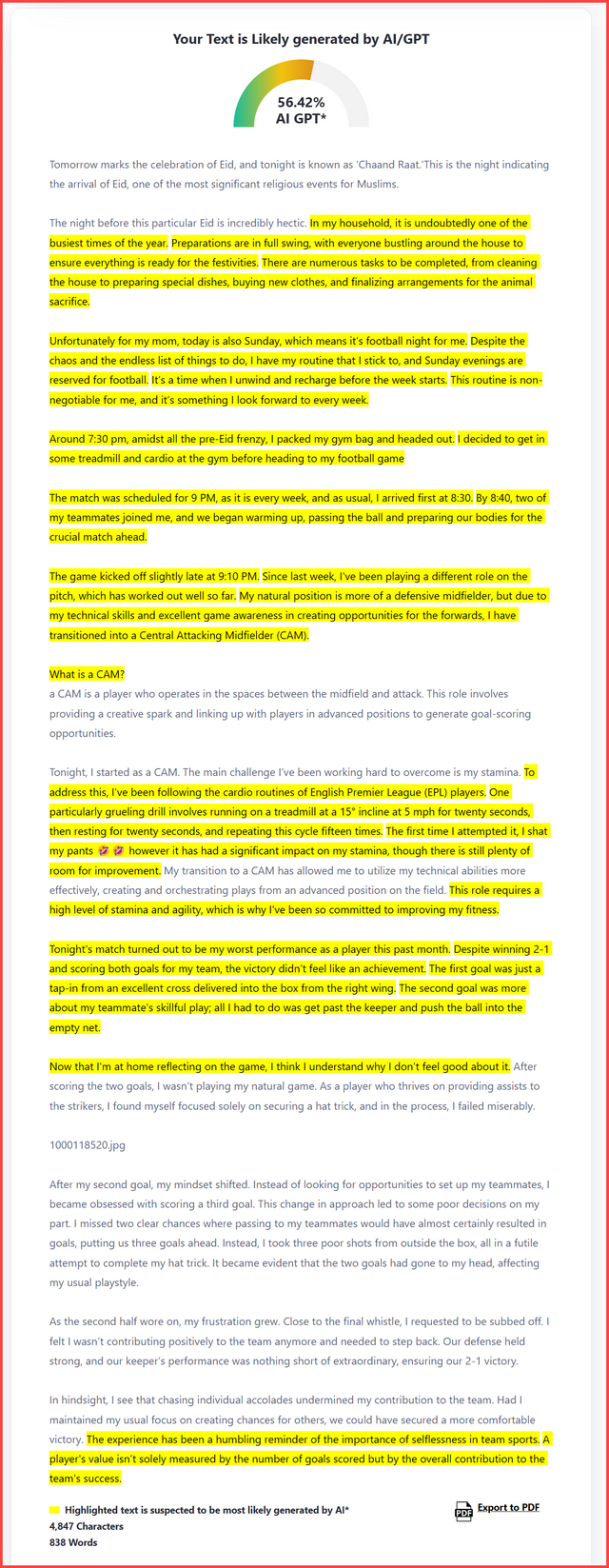

Let me give you an example of a false positive. Many of you might know @huzaifanaveed1. I enjoy reading his articles and always try to learn something. He's been an inspiration and has been writing since before ChatGPT was even released. He even won the 2021 Steemit best author award. There's no question of his content being AI-generated, but if you ask the detectors, they might declare the following post as AI-generated, which is ridiculous and clear proof that these detectors are not reliable. Therefore, I'm saying this again you must manually analyze a post before making any final judgment.

My AI-content Detection Method

Here's how I handle AI-content detection. First, I scan the post with ZeroGPT; if the AI percentage is over 70%, then I use Copyleaks, GPTZero, and Content at Scale respectively for further scans. If two out of these four detectors declare it human-written, I take no action. But if three declare it AI-generated, then I manually analyze the post myself. If it feels robotic and lacks a human touch, I declare it AI-generated; otherwise, I do not.

Reasons Behind False Positives

As per my observation, false positive results often occur when:

- You write perfect English without any grammatical mistakes.

- Your writing style is more academic or essay-like rather than casual or informal.

- You tend to write in a bookish style.

- Your writing accidentally becomes coherent or follows a robotic pattern.

- When you scan less than 300 words.

- Integration of AI into translation and grammar checking tools.

Final Thoughts

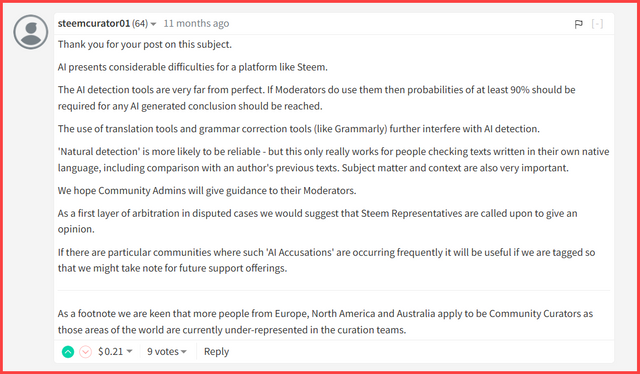

To sum up, AI-content detection remains a problem. If we follow the guidelines shared by SC01, we can avoid situations where innocent users are wrongly declared as using AI based solely on detector results.

Some AI detectors now even offer a premium plan that includes a "humanizer" feature that rewords AI content to evade detection.

Ultimately, it seems we'll always need to rely on manual detection, and this is only feasible if the person analyzing the post is an expert in the language, understands it well, and is familiar with concepts like language diction...

I think that this sentence encapsulates everything:

To me, AI generated articles (and comments) feel very different to human written. I can "feel" this in the writing style and this "feeling" could also be linked to some of the work I've done trying to "humanise" AI generated content. When you try to get AI to write like a human, you quickly discover its limitations - which can be overcome with a lot of effort - by which time it's quicker just to write it yourself anyway!

In many ways, it's no different to detecting plagiarism - where somebody has adapted content to avoid detection from tools. You know when the writing style's different and it amazes me how often somebody can't string a grammatically correct sentence together but can write a perfect Crypto article.

The best solution - only support articles that "feel" authentic and "must" be authentic. Opinion based, rather than a list of facts or trying to teach something. If somebody's trying to teach you something - whether it's "10 reasons that a dog shits outside" or "An Introduction to On-Chain Finance and its benefits" - you can be pretty certain that there's been no Proof of Brain involved.

TEAM 5

Hi, friend! We haven't had an AI debate for a long time ;-))

Yes, I'm happy to repeat my pleas: trust your reading instincts. Compare authors' texts with their other statements. Are they stringent?

There are a few references in texts that are actually clear evidence that an AI was at work: Beginnings like: "In the picturesque hills surrounding a dreamy little village a bright boy named Eric / Max / Tom /... grew up." "It's always important to note that there can also be negative influences when you..." "Everyone admired him for his wisdom and strength."

The AI makes some bold assumptions based on the processing of accessible social media data: People want to dream, people tend to think in black and white, and people like to admire and be admired. Well...

Unfortunately, that's the rubbish that AI gets to eat. So what do we expect it to spit out?

Worst of all, some of our writers are starting to model their writing style on that of the AI. Maybe it's better than their own. Who knows...

I sound nasty, I know. The fact is that the purely technical differentiation has never been possible beyond doubt and is becoming increasingly uncertain due to these effects. So: leave it alone!

TEAM 5

A couple of days ago, one of my friends on steemit sent me an article of a very well reputed blogger on Steemit whom I have known since 2021. There's no question that her article would have been AI generated but Zerogpt seemed to disagree. This made me ponder on the credibility of these sites.

Similarly, I have been reading your posts for a while now, but now if I run your post through AI detecting site this is what it shows

I think you missed out on a point and that is if someone uses Grammarly there's a chance that AI detector would label it as AI created content because Grammarly makes your sentence structure perfect and eliminates grammatical mistakes.

My thoughts

I think it's very easy to catch AI content specially an AI comment for example AI would read the entire post and construct a statement for you based on facts and figures without any emotions being poured into the comment. I have had encounter with users who used AI comments on my post, they're always in a monotone, fact based and a summarized form of the original post. It is important, infact very important to go through the entire post manually and not completely rely on these AI detecting sites. There are countless posts in the crypto academy written through AI, ask me now, and I'll point them out for you. When you're into something for a very long period of time, you have this sort of 'gut feeling' that is correct most of the time but verdicts cannot be passed based on feelings can they?

Also another issue that you mentioned about moderators not being familiar with English language is a genuine issue and I'm a strong advocate of writing in our own languages. When others can, why can't we? But then again, we haven't been trained to write in Urdu, specially on a cell phone, have we?

Regards

TEAM 5

No, I did not miss it. In fact, I mentioned it in the last bullet point in the "Reasons Behind False Positives" section of my post.

You are right, and that was indeed the very purpose of this post: to create awareness.

We can write in Urdu, but you know typing in Urdu is very difficult. Moreover, I think formatting your post in Urdu on Steemit is also a big problem. I believe this issue has been discussed before, but I can't remember which post it was.

Sorry for pulling Harry Potter into this but I can't help quoting here a statement by Arthur Weasely...

AI cannot "think" of course but the effect is the same - it simulates a human brain. Sometimes, it gives great results but mostly it's faulty and very robotic (I mean what else can you expect from a bot).

I think your assumptions about false positive results are somewhat correct. I haven't experimented enough to give further opinion on this.

Personally, I use a similar approach to yours for languages that I don't know. But for English and Urdu, I generally don't use any AI-detector. Sometimes, it's the robotic tone that gives away and sometimes it's simply the author's writing style, which in some cases I'm already familiar with, so I know when it doesn't sound like him/her.

We all need to be more open and flexible in quality control. I don't deny the kinds of scams we are facing on steem but that doesn't mean we should shut our brains and catch AI authors solely with AI detectors.

Mostly it's this. AI will always use statements that are in monotone (talking acc to my experience)

TEAM 5

So sorry for this incident! I missed the notification so sorry again for the delayed response. These things will keep happening so how you have adapted is the only way to raise awareness.

Oh no! Not you again!

Best of all, this time I am innocent. (•ิ‿•ิ)

The only thing I want to say is that I urge communities not to let moderators label a user's post as AI content. It should be referred to the admin, and if the admin is not sure, ask a Steemit representative. That is why we are here, to help.

Plagiarism Free / AI Article Free

* #burnsteem25

* Community

* Charity

null 25% ✔️

steembetterlife ❌

worldsmile 10%❌

Appeal to community members:

Verified by @𝘩𝘦𝘳𝘪𝘢𝘥𝘪

Upvoted. Thank You for sending some of your rewards to @null. It will make Steem stronger.