Solving Nodes RAM consumption with RAID 100 alike and 2 PERC Controllers

The problem with exponential growth of data and memory consumption is somewhat problematic, or at least it will be in near future. Most of Industry Grade servers, such as Dell PowerEdge’s that I’m using supports up to maximum 240GB of Ram. With about 500M of data growth daily, we can expect that industry grade server very soon are not going to be enough for running a witness / seed node with enough plugins to support direct operation of wallet operation and price feed publishing.

My witness node has the following set of plugins and public api’s enabled:

Public API: database_api login_api account_by_key_api network_broadcast_api

Plugins: witness account_by_key

Current memory consumption is: 54G

Another problem with non-persistent memory is requirement to re-sync the database in case of failure. RAM is efficient and Fast, still, i need to periodically stop my node in order to backup shared_memory.bin from /dev/shm so I don’t end up with full resync in case of power outage.

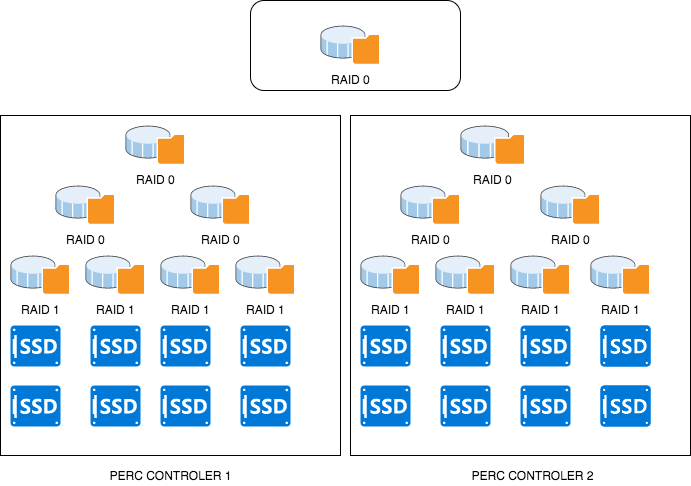

The Approach:

- 2 X PERC H740P handling 8x 1TB EVO SSD’s each.

- RAID 1 on the low layer for redundancy and persistence.

- RAID 0 on top, with software raid bridge on top of two controllers.

How it looks like:

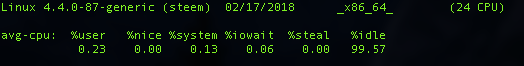

Here we are with IOWait of my witness node (in production):

I think i can totally live with this level. 1TB persistent Storage is definitely to serve for a long way. Moreover, no more re-syncing, easier backup. Node is up, will see how it's going to perform and if there's going to be any missing blocks. So far, everything works as expected to my surprise, with customer grade SSD's, that are not even SAS, just regular SATA with which PERC is backwards compatible.

This is one of approaches to address the increased amount of data. The next one I will be trying to implement, is based on "HAProxy" like, split of traffic between multiple nodes based on the block number. As this would be Layer7 inspection and re-encryption, i don't expect miracles here but it's worth trying, as we are going to need Load Balancing sooner or later.

There was a good proposal by @gandalf or (@gtg) to load balance based on different plugins loaded on different nodes. This could be interested approach as well, but moving forward and NAT-ing the packet based on transaction type.

What's important here, is that I managed to achieve much better results with ESXi Virtualized host, rather then the Raw Ubuntu on the server. It seems that drivers are messy, or it could be other kernel tweak that I am missing. However, based on the fact it runs on ESXi HyperVisor, I am sure we can achieve even better performances with kernel tweaks.

If you would like to support me, you can do that via steemit website:

If you are an advanced user, You can do it with unlocked cli_wallet by executing:

vote_for_witness "yourusername" "crt" true true

Thanks to all supporters and all the members of Steemit Community.