Teaching AI to play Flappy Bird - the concept of perceptron | Neural Networks #1

Repository

https://github.com/jankulik/AI-Flappy-Bird

What Will I Learn?

- You will learn the basics of neural networks

- You will learn what are the components of perceptron

- You will learn what is the decision-making process of perceptron

- You will learn how perceptron can learn itself

Difficulty

- Basic

Source

Overview

In one of my recent articles I presented a simple AI model that learns itself to play Flappy Bird. Today, I would like to take a closer look on how this model works from the technical side and learn the basics of neural networks. In the first episode of this tutorial I will discuss the general concept of perceptron, its core components and the learning algorithm. In the second episode I will show how to implement those concepts into Java code and build a fully fledged neural network.

Here is a video showing the learning process of this AI:

Perceptron - the simplest neural network

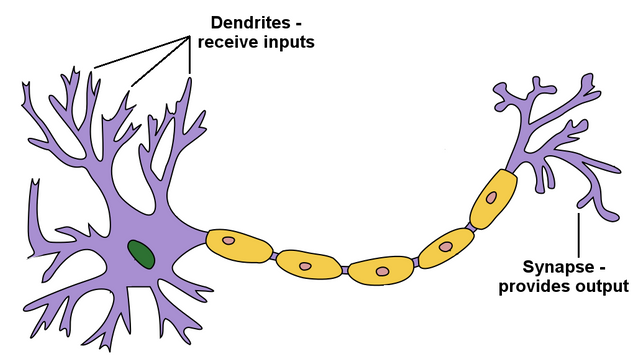

Human brain can be defined as an incredibly sophisticated network containing 86 billion neurons communicating with each other using electrical signals. Brain as a whole is still an elaborate and complex mistery, but structure of a single neuron is already quite exhaustively researched. Simplistically, dendrites receive input signals and, accordingly to those inputs, fire an output signals through synapses.

Source

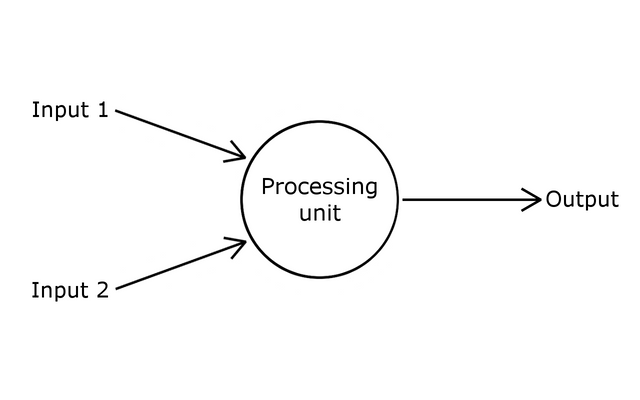

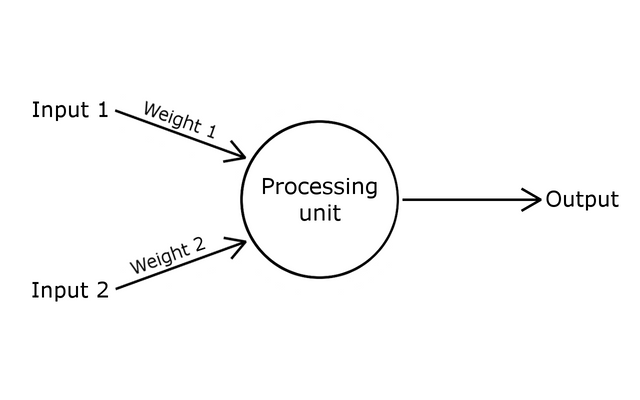

My Flappy Bird AI model is based on the simplest possible neural network - perceptron. In other words, a computational representation of a single neuron. Similarly to the real one, it receives input signals and contains a processing unit, which provides output accordingly.

Components of perceptron

Inputs

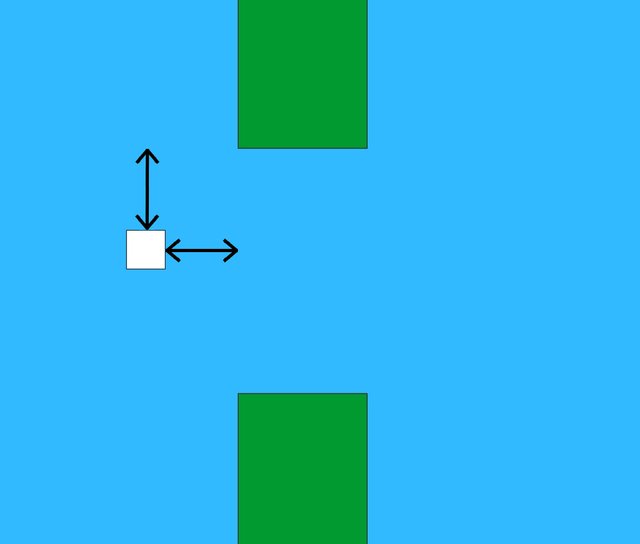

Inputs are numbers that allow perceptron to perceive the reality. In case of my model there are only 2 inputs:

horizontal distance between bird and the pipe

vertical distance between bird and the upper part of the pipe

In order to create an adaptive and self-learning model, each input needs to be weighted. Weight is a number (usually between -1 and 1) that defines how the processing unit interprets particular input. When perceptron is created, weights are assigned to each input randomly, then they are modified during a learning process in order to achieve the best performance.

Processing unit

Processing unit interprets inputs according to their weights. Firstly, it multiplies every input by its weight, then it sums the results. Let's assume we have such inputs and randomly generated weights:

Input 1: 27

Input 2: 15

Weight 1: -0.5

Weight 2: 0.75

Input 1 * Weight 1 ⇒ 27 * -0.5 = -13.5

Input 2 * Weight 2 ⇒ 15 * 0.75 = 11.25

Sum = -13.5 + 11.25 = -2.25

For such inputs and weights our processing unit will return the value of -2.25.

Output

The final output of the perceptron in our case should be binary (true or false), as it needs to decide whether to make the bird jump or not. In order to convert our sum (in our example -2.25) into such output, we need to pass it through activation function. In this case it may be really simple: if sum is positive it returns true, if sum is negative it returns false.

Sum = -2.25

Sum < 0 ⇒ Output = false

Under such circumstances our bird would not perform a jump.

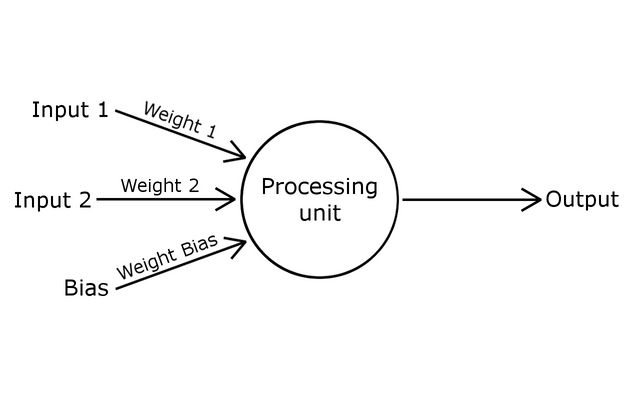

Bias

Situation becomes more complicated when the value of both inputs is equal to 0:

Input 1: 0

Input 2: 0

Weight 1: -0.5

Weight 2: 0.75

Input 1 * Weight 1 ⇒ 0 * -0.5 = 0

Input 2 * Weight 2 ⇒ 0 * 0.75 = 0

Sum = 0 + 0 = 0

In that case, regardless of the value of weights, the processing unit will always return a sum equal to 0. Due to that, activation function will not be able to properly decide whether to perform a jump or not. A solution to this problem is bias, which is an additional input with a fixed value of 1 and its own weight.

Here are the results of sum with bias (remember that bias is always equal to 1, but its weight may be any number):

Input 1 * Weight 1 = 0

Input 2 * Weight 2 = 0

Bias * Bias Weight ⇒ 1 * Bias Weight = Bias Weight

Sum = 0 + 0 + Bias Weight = Bias Weight

In that case, bias's weight is the only factor having impact on the final output of the perceptron. If it is a positive number bird will perform a jump, otherwise it will continue falling. Bias defines the behavior and perceptron's understanding of reality for inputs equal to 0.

Learning process

If bird had collision with the pipe, it implies that its perceptron made some wrong decision. In order to avoid such situation in the future we need to adapt the perceptron by adjusting its weights. The output of our perceptron is binary, so there are only 2 possibilities:

bird collided with the upper pipe - it probably jumps too often; output is too often true - weights need to be decreased.

bird collided with the lower pipe - it probably jumps too seldom; output is too often false - weights need to be increased.

Weights can be easily adjusted using the following equation:

New Weight = Current Weight + Error * Input * Learning Constant

Error can be described as a difference between expected and actual behavior. In our case error will equal to

1or-1, thus defining if weights will be increased or decreased. If we need to increase weights (bird jumps too seldom) error will be equal to1, if we want to decrease weights (bird jumps too often) error will be equal to-1.Input assigned to weight that we are modifying. We need to consider input in the moment of bird collision.

Learning Constant is a variable that defines how rapid will be the change in the value of weights, thus controlling the pace of learning. The bigger learning constant is, the faster Perceptron learns, but with the smaller precision. In the Flappy Bird model I have used

0.001as a learning constant.

I thank you for your contribution. Here are my thoughts. Note that, my thoughts are my personal ideas on your post and they are not directly related to the review and scoring unlike the answers I gave in the questionnaire;

Content

Your contribution has been evaluated according to Utopian policies and guidelines, as well as a predefined set of questions pertaining to the category.

To view those questions and the relevant answers related to your post, click here.

Need help? Chat with us on Discord.

[utopian-moderator]

Thank you for your review, @yokunjon! Keep up the good work!

Thank you for your review.

Hi, again, @neavvy!

I have always liked to know about neural network programming, although I have never developed a program as such. I'm still not used to AI philosophy, especially artificial neurons or perceptrons.

Many years ago, I had made, in language C, a program of riddles that it was "learning". This program asked you about the characteristics of an animal and if it could not guess it, it added it in its database for next guesses. It was actually a tree made with pointers. At that time it was my first attempt to create artificial intelligence.

By the way, what language did you use to program?

BTW, Look at this

https://hackernoon.com/how-to-make-a-simple-machine-learning-website-from-scratch-1ae4756c8b04

Keep in touch

Thank you for reply dear @jadams2k18 :)

Wow, that sounds really interesting. Was this guessing and learning algorithm efficient?

I used Java in a Processing IDE.

This article is really interesting, thank you for sharing :)

Nah! When you closed the app, at that time, we call them executables; it forgot everything because the program didn't save all the knowledge it learned.

These programs ran on those computers that used floppy disks 5-1/4. They had a monitor or screen, the fat ones that were monochromatic and only have an amber color that burned your retinas, besides that surely it made you a free x-ray test, every time you turned it on.

Dear @neavvy

I must quote this content:

But despite this very fine description, I still think that its complexity remains infinite. the AI could emulate in everything but never overcome, always the human Brain will look for the way to solve any problem no matter how complex it may be. If at some time the AI will succeed, it will be for a short time.

But after all your explanation in a very didactic way it may seem very simple, which is not, Friend I congratulate you for this ..

Regards

A virtual hug for you.

You're right in what you propose friend @lanzjoseg.

As artificial intelligence programs improve, they become smarter. The human brain is also evolving in response to changes in the world. It is certain that as we see its size with respect to time, has increased.

I am sure that just as species evolve to survive, as Darwin indicated, the human brain should not be the exception.

Cheers!

You are right @jadams2k18. Human brain evolves extremely fast.

Thank you for your comment my dear friend @lanzjoseg!

Yes, I get your point. Human brain is definitely complex mistery and I think humanity is not going to figure it out within next years.

Thank you :)

I enjoyed reading this article. I remembered my first forays with logic and basic programming.

The addition of variables might make the model more effective. But this procedure is so simple and so efficient.

I remember once when we learned the "Bubble Sort" method. Only one student was able to complete the procedure and he achieved it with many lines of code.

A couple of years later we met at my house and managed to perform random data sorting using the bubble method. Only this time the program consisted of no more than ten lines of code.

This is the difference between effective and efficient programming.

Dear @neavvy, I congratulate you for having an extremely efficient programming.

Thank you for your comment dear @juanmolina!

Sorting algorithms are indeed a bit tricky. Have you seen this visualization of Bubble sort in dance? I really love it.

Wow...LOL!

Thank you @neavvy, my friend.

Hi @neavvy, I'm very curious about your code.

I downloaded Processing and installed it in my mackbook, then I ran the code. the window was too big (in my macbook screen)

To get some background in processing, I studied some lessons in funprogramming.org. After that I modified the code by changing the height of the window (690 instead of 1920):

void setup() { size(1080,690); ... }The window now fits pretty good in my screen, However the birds and pipes are big in comparison with the new window size. I guess I have to resize them too.

Any clue to resize them? :)

BR, Daniel

Awesome post!

Machine learning is such an interesting topic!

Thank you @chasmic-cosm :) I saw you are interested in fractals - this is also a fascinating area!

You got a 30.70% upvote from @ocdb courtesy of @neavvy! :)

@ocdb is a non-profit bidbot for whitelisted Steemians, current max bid is 45 SBD and the equivalent amount in STEEM.

Check our website https://thegoodwhales.io/ for the whitelist, queue and delegation info. Join our Discord channel for more information.

If you like what @ocd does, consider voting for ocd-witness through SteemConnect or on the Steemit Witnesses page. :)

Hey, @neavvy!

Thanks for contributing on Utopian.

We’re already looking forward to your next contribution!

Get higher incentives and support Utopian.io!

Simply set @utopian.pay as a 5% (or higher) payout beneficiary on your contribution post (via SteemPlus or Steeditor).

Want to chat? Join us on Discord https://discord.gg/h52nFrV.

Vote for Utopian Witness!

Congratulations @neavvy! You have completed the following achievement on the Steem blockchain and have been rewarded with new badge(s) :

You can view your badges on your Steem Board and compare to others on the Steem Ranking

If you no longer want to receive notifications, reply to this comment with the word

STOPVote for @Steemitboard as a witness to get one more award and increased upvotes!

The knowing whether it hit the upper or lower pipe combined with the generalization that if it hits the top it needs to flap less and if it hits the bottom it needs to flap more were the parts I was missing.

The simple environment and neural network help a whole lot here. By knowing just how tweaking the weights will effect the output behavior and that it's a matter of only signal frequency you can make progress toward error reduction.

As you start using more complex networks, perhaps with many inputs/outputs and multiple wide layers, or in more complicated environments, perhaps even ones where some or all outputs have magnitude and are not just binary, knowing how to tweak what and when quickly becomes astronomically difficult.

Have you done anything with larger networks in richer environments?