Please refer to the latest announcement

For information about the (former) Utopian project please read the latest official announcement https://steemit.com/utopian-io/@utopian-io/utopian-is-joining-a-new-family-imminent-changes

For information about the (former) Utopian project please read the latest official announcement https://steemit.com/utopian-io/@utopian-io/utopian-is-joining-a-new-family-imminent-changes

Hello, and thank you for the opportunity to address the issues.

I'm asking you this so we could get the insight in what they, as the customers want (something free/fast, something solid that is expected to be edited again, or something perfect). According to that, we could tweak the scoring system or dive the priorities.

This is very important as I've seen something strange from my perspective.

As a Serbian, I'm also speaking Croatian and Bosnian. Besides that, I also speak English, French and Russian.

In several projects, I've found a lot of inconsistency in translations, with completely conflicting meaning.

I wrote to project leaders, got some response, but... Nobody actually cared.

That is not a good sign and I'm suggesting that we should pick the projects more carefully, in order to support only those who are serious.

I'm thinking out loud, wondering if the projects we support are willing to give something in return. At least, a backlink and thanks. I'm asking this because the list of supported projects, with logos and fancy js "gauge clusters" could attract projects who are willing to actually pay for services.

Sorry if the message was too long

Best wishes

Alex

This is something we are working on. We have just on-boarded @lovenfreedom as our VIPO manager. One of the critical aspects of this position is to create the communications bridge between us and the projects we are supporting, in order to gather their feedbacks as well as requiring them to be proactive.

Well I'd say you did a great job to bring your concerns to the projects. If they don't care, then I agree probably we should higher up the requirements. Do you have any specific suggestion on this topic?

This brings me back to the first point. By creating the communication bridge we will require projects satisfied with our support to give back. We still need to define exactly what, ideas are around SP purchase and delegation, credits and more. Let me know if you have any specific suggestion on this topic as well.

In order to have solid ground for negotiations, the quality needs to be fostered. It leads to an important thing we are missing now (according to Discord discussions). All of us, LM, TR, need to understand that we are replaceable. My (a bit long shot) suggestion is to encourage LMs to change their translators if they are not good enough. For me, it's a mystery why there are translators who never do good translation - still translation. Also, LMs must be responsible for errors. We already have quantity, now we need quality.

From my POV, you should primarily focus on the real economy (real money). In that case, Utopian/DaVinci could have the edge, as your contributors are sort of free (paid by coins) and you will be more convenient when negotiating with real investors. What I see now is that most crypto projects rely heavily on crypto which is nice for the first stage and hype, but later... Predictability and sustainability are better sounding words.

Thanks @elear for starting a discussion. I can see people have been expressing themselves openly and this is a good thing. There are lots of arguments I agree with.

First of all, let's talk about the questionnaire:

We have been brainstorming and discussing the questionnaire issue for months on Discord, but I can't say I am happy with the proposed draft. Why should we pay so much attention on the presentation post? 2 questions (#1 concerning presentation/formatting and #2 concerning writing style/language/engagement - of course the basic project details should all be there in order to consider the post complete). We could also skip this question or just narrow down the options to a) basic and b) well-written, therefore the translator who wants to get these extra points will know what to do.

Linear rewards like @alexs1320 has also mentioned. This is an argument I do agree with, when we have a 1100-word and a 1600-word contribution, we can't reward them the same (considering they are of the same difficulty level and semantic accuracy).

Defining more clearly the number of mistakes as well as the type of mistakes (semantics, spelling, typo) is also important as these define the quality of the text the LM receives.

Pre-defining difficulty levels of all projects is also important and leads to a common agreement, leaving no room for unfair scorings among all language groups (as there have been projects rated of "high difficulty" by some LMs while others rated them as of "average" or "low").

From what I (and others here) see, we are trying to "cut down on" points from very good translations if this questionnaire is implemented. This will most likely un-motivate people. We want to keep balances and make everyone happy (as long as this is feasible). "Punishing" good contributions because they are good I don't think will get us anywhere. Instead, we should try to help "mediocrity" get better. The feedback from the LM is supposed to help a translator become better and better, why should score lower excellent contributions? Just because the category's VP % is limited? And why not just drop the score/upvote % ratio a little?

Now regarding the category as a whole:

IMHO Translations have been expanding too rapidly. We have so many people on board in a few months, I doubt this will be sustainable in the long run and I am not the only one to point this out (@scienceangel has stated the same).

@rosatravels has tried to make the "translations community" more engaging and active, she has made very beautiful posts featuring LMs and translators, but let's be honest, translation posts are not "engagement material", their target group (here on Steemit) is very limited. We try to make our posts more enjoyable and give them a more personal tone or explain a few things about the project we are working on or some tools and features it offers, but nobody will read what we write in there (nobody else other than the LM who reviews it).

If you heard the last utopian weekly, several users pointed out that our average score is too high (it's in the 70s) I know it's not pleasant to hear but I think it would be fair to try to lower it a bit. Other categories score in the 50s and 60s. It doesn't mean "punishing" good users we will try to maintain high rewards for great contributions (that will still be able to reach 100 with more emphasis on lack of errors) but I hope you understand we must deal with the final score issue. Right now it's just too easy to reach high scores. About having too many users, it's kind easy to say it now, but remember that we come from a higher Steem price (which could have enabled us to support more users) and also most of the recruiting was done in summer where I don't know if you remember the activity was much lower than today. I don't think that having several translators is an issue but we will have to uniform the number of contributions per team, so maybe thinking about setting contributions limits per team may be something to consider too. So teams with more translators won't consume more VP.

I have missed a lot of updates these days with the new house and stuff, so I don't have the whole picture.

What I want to say is that high scores were easily attainable from the start, people have got used to the good numbers (we have had that conversation in the moderator chat some months ago, I don't remember the details now). I can speak for my team, I know we will still keep working even if the scores are lowered. I have supported lowering the score/voting strength ratio for a long time, what I meant by punishing is that if this questionnaire (the one I saw 2 days ago) is implemented, some users may be put off by the lower scores they will be getting for the same effort (and I don't mean those who are close to the good-excellent threshold, but those who continuously provide excellent examples of work).

Now, regarding the limit of contributions/team, we already set a limit on contributions/translator, I think it's more or less the same and more fair. If we set team limits then translators in larger teams will be even more "confined". Maybe setting a maximum limit of translators/team would be more fair (4-7 sounds reasonable depending the language and how broadly it is spoken).

I see lots of people have posted their views, I need to catch up with some more posts today...

I don't envy you, moving it's thought I did so myself in June (during our first recruiting window) so I appreciate that you found the time to answer my comment. In the new questionnaire we tried to reward more contributions without errors (also to add some objectivity to the scoring) and increase the score difference between average and excellent translations. About the number of users I think each team should not have a set number. We should look instead at the outputs of the teams. For example 5 German translators will make fewer contributions that 5 Spanish translators. So we need to allocate a fixed amount of contributions per team and then recruit enough users to keep that number balanced.

Thank you, @elear, for this opportunity and for setting us up with a meeting on Monday. I’ll do my best to participate.

In the meantime, my thoughts became too big for a single comment so I went ahead and wrote my first #iamutopian post.

My general thoughts run along the same lines as some comments I’ve read here already. I agree with most observations @scienceangel made with regards to the new questionnaire, I agree with @dimitrisp’s comments on communication, and I definitely support @egotheist plea for allowing LMs to translate (although I understand the rationale behind not letting them). My post covers:

With regards to the new questionnaire I understand what’s being given as a reason for granularity but I also agree with @scienceangel that our aim should be to have perfect to nearly-perfect translations with good presentation posts. Each translator should be encouraged to submit only contributions that reach those thresholds and therefore granularity in the review questionnaire would be useless in differentiating final scores.

As we move toward utopian v2 the presentation of the post is less significant. What remains significant is to give to reviewers as much details as possible for a proper review and that will be considered in the score. Let's talk Monday!

This is a brave move. A bit scary because I am so involved in this category for 6 months, maybe too involved. But I know many moderators and translators love this opportunity to speak out and this part I appreciate very much because only in this way, we can make some headway to solving some problems.

The translation category started off quite fun in the beginning in June, but slowly as the months went on, I felt the heavy burden that we seem to be fighting a losing battle in face of many challenges in this category.

I've tried very hard to get translation category going, reporting the ongoing work, doing research on quality metrics, putting 100% of my effort to help all users, answering the moderators questions, interviewing the LMs to create better communication & a closer bond of a working team relationship... but only to find out today that "this is not really helping the category as it is not contributing in building a trail to vote the translators and LM". Perhaps someone else is more suited to take over this CM role so I will fade out. I was also told there are unfairness and resentment built so it would be good if users can come out to share openly what is bothering them.

Honestly, I think you did a good job in trying to improve things. It was obvious how much effort you put into your work because you wanted the category to succeed.

The current problems are definitely not a sign of failure at your end but merely a proof that as soon as money is involved, people will always try to game the system.

Can you please shed some more light on this point?

@elear, the quote there is exactly what @aboutcoolscience said to me the other day.

@rosatravels is the MVP of Translations (if not Utopian as a whole)! I don't know if I could handle all the responsibility she has, and at the same time make sure everyone is as happy as possble with how things are :)

I'm not a member of Utopian Translation Team neither Davinci Team, but here are my thoughts.

My first question could be "How do you decide which language should be added next?" There are some languages I don't even heard before, it could be my ignorance but there are more widely used languages and people waiting for those languages to be included in translation categories.

Unfortunately I'm not familiar with how all the process going on on translations category. However being in a small group of team may cause some overlooking issues? There are usually 3 translators with 1 moderators afaik, and who suppose to check everything going well on each category.

I saw many contributions with missing informations, like they even forgot to put their own crowdin profile and I never seen they are warned about it. Plus those projects are usually open to only team members so it's hard to check the work done from outside.

It's weird that more than half of the contributions are translations, that makes me think if it's too easy to get rewards through translating and if it needs to be adjusted according to it. I know that 400+ words is enough to get a reward. Which was 2k once upon a time, IIRC.

If the project is closed, a less intuitive but a way to do random checks is by going to the project's activity page:

It sucks, but it's better than nothing

Additionally;

I found many (like MANY) weird transfers to the same exchanges, between translators and moderators, odd behaviors on accounts like having zero comments on the blockchain, zero interactions but almost hundreds of translation contributions. Some of them doesn't even have a profile picture, but started directly with Utopian and only continue to translate through Utopian and nothing else.

With some languages it will be hard to know for sure if the people are genuine. I for one, don't know a thing about Vietnamese, so unless we know the LM longer and trust him/her, we can just never be sure if the translators are all genuine (and with that I am not saying it's that particular team who is doing any crazy transfers...I just gave an example). So I think @elear's idea of bringing in external translation experts to do checks sometimes might be the best solution for this.

I believe the decision is based upon availability of LMs for those languages and their actual expertise. Additionally the voting distribution may not be enough to expand too fast and some languages may have to wait longer than others. Is there any specific example of languages should be considered first?

I proposed this idea recently with the core team: to have periodical audits, undertaken by teams . of external professionals who aren't in any way linked to the existing teams, to reduce bias and mitigate potential abuses.

This is a good point. Definitely to be brought up with the DaVinci management

Translators will disagree. There have been improvements to the questionnaire to make it more granular and this component has been made more significant for the calculation of the final score.

Would you mind creating a list and share it with me if you are available for that?

https://hackmd.io/ek2E1Er1Sp-9VZecZweccQ here is the list, sorry it took long. And might be quite messy, I tried my best to keep it objective and clean as possible. Didn't include any personal opinion, you just need to take a further look if you find anything suspicious, I don't know like their first transactions on steemd, who they batch-voted, batch followed just after the account creation. Voting behaviours on steemreports, transfers in detail on steemworld.

cc: @elear @scienceangel @egotheist @imcesca @dimitrisp @jmromero @rosatravels @aboutcoolscience

Thanks @oups for putting in this extra effort to help us. We appreciate this information and will do research on them.

Thank you very much @oups for putting together such an exhaustive list. If I saw correctly, there almost were no teams without at least some transfers between members. This should be definitely investigated more deeply to see what was the purpose of all those transfers between the members of teams.

One such list has been shared if the sources I saw are correct. Were any actions taken as a reaction to the list?

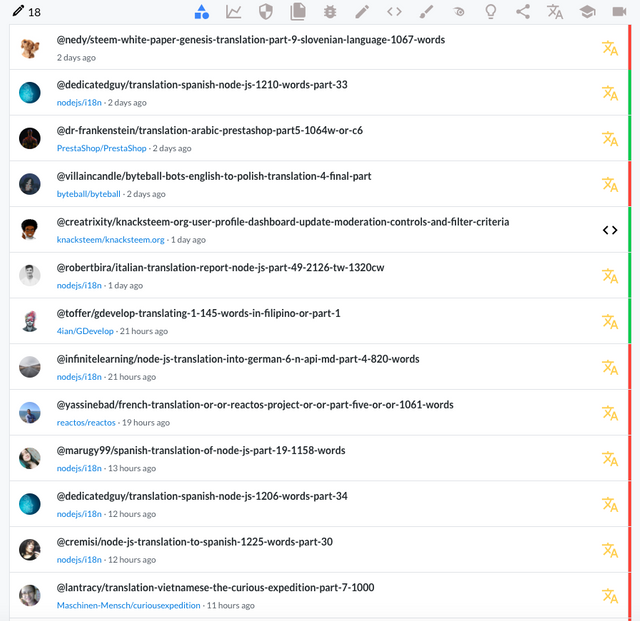

Great, I'm not trying to undervalued their work in no way. It's just there are too many translation contributions. Possibly because there are too many translators but this is how utopian.rocks looks like,

Since I'm Turkish :) not that I'm going to translate but I'd like to see it amongst the allowed languages since there are already many of the languages there, then why not Turkish, simple man's logic.

Sure, it could take some time tho. I occasionally checked a few languages, now it's time to practice more Python and provide the whole list. 👍

Hi @oups, thank you very much for your interest to help us improve Translations category and prevent abuse. These are very serious accusations:

As one of the LMs, I fully support providing a list with names of LMs/Translators who are responsible for such behavior, followed by detailed check of their accounts in search for evidence.

In case such accusations turn out to be true and proven, those people should be immediately suspended from their positions.

Thanks as well for not getting it in wrong way, I'm just trying to do the right thing. I'm not actually want to accuse anyone but make things more transparent to be able to increase the level of trust.

Can you DM me the names of the translators/moderators via Discord?

Sure thing, after I compiled the whole list. I'll share it with anyone interested..

Count me in too, please.

I'm interested too, so hit me up when you're done compiling the list 😉

If you suspect that some users may be abusing the system please try to contact us directly to avoid that the message gets lost or distorted by the time it reaches us. Regarding the choice of the teams to activate it depends on several factors, from the quality of the applications to the abuse history of the team. For example we were told that the Turkish team committed several abuses in the past so we have been extra careful before activating the team. I think the newest Turkish applications we received are pretty decent but please read the comments above, users are already complaining that we are too many, you also said it yourself that we have too many translation contributions, so we must alternate periods of growth and consolidation. Now we are in a consolidation phase and we need to fix a few things before bringing more people in.

As I already set out several times on the DaVinci Discord, the current system, how translations are evaluated, is unfair for translators whose LM is stricter and more careful. In the past I didn't care about it that much, because I still received a decent upvote. However, currently if you have a stricter LM, you will decrease tremendously the chance of receiving an upvote. As I often said, the evalutation scheme for translations should be tangible, so that it becomes more transparent and comprehensible for everyone. I fully support the accuracy and precision of my LM @Egotheist, because that is what we need in this category.

I understand @infinitelearning. Do you think some LMs maybe more biased toward their teams than others? What would be the most objective way for LMs to follow? What about a more granular and extensive Utopian Quality Questionnaire?

I don't think some LMs are more biased towards their teams than others. I think that some LMs are simply more generous concerning the evaluation of the translations. Currently, I'm not in the position to name LMs here, mostly due to the fact that I don't speak most of the languages in the translation category. But what I can say for sure is that I strongly doubt that the very high scores often seen in the translation category are due to the outstanding quality of the translations.

A system which could work would be: Every LM has the possibility to make his own translations who will then be checked by another LM, so there are at least two LMs in each language category. I don't think an extensive questionnaire will lead to better results. Rather the questionnaires should be tangible, meaning that you have to declare how many "sentences" you corrected. This should be divided into three classes of mistakes (heavy, medium, easy).Depending on how many words were translated, this system is flexible. I think the terms who are currently in use are not objective at all.

I know that Utopian tries to bring value to the Steem blockchain, but I don't see the point of evaluating the style of the post, merely the score should be based on the translation in Crowdin. Let me know what you think @elear.

There is much unfairness on the current questionnaire that sometimes lead to awesome contribution's score to get lower.

I wonder if this could be fixed on the new set of questionnaire

Example 2: the translator accurately translated 1,034 words LM will grade them as accurate,. while the translation is only 1,034 words vs 1300 accurate translation lets say the string contains 20 words each with the string that has an error, there still 1,150 accurate translation that scored lower than the 2nd example. We Should also Consider in scoring the number of words that accurately translated by strings vs The total words translated

Lack of communication inside the team.

LM's and Translator will get to know each other.

They could share ideas and tips for making fewer mistakes.

brainstorming etc.

Some of the team has a lot of translators

Good point

We are working on this

point taken

LM @ruah, it's good to hear from you about the imbalance in number of translators per language. Actually, 5 is fine for Team Filipino but those in other teams exceed that number.

I'm curious about how rewards are being distributed per team. Let's say Utopian has allotted 20 votes for each team with 5 translators, members getting 4 votes each seem fair. But does Utopian value languages equally and/or reward translators equally?

Hi, LM @ruah I read your statement and I agree with you about this.

Let say the minimum words is 1,000 and I translated 1, 300 words and I made 3 mistakes so I have 297 accurate remaining words right? Enough to cover up my 3 mistakes, but because of those 3 mistakes our we possibly to get a lower score?

yes, those minor imprecisions will cover your good deeds, but things will change after the new questionnaire so don't worry. The davinci team is doing a great job for improving all the things about translation.

A nice idea. However, I miss a few things such as:

Talking will happen during the weeklys as usual. This is to avoid feedbacks will get lost.

Comments can come anytime. I will reply to each comment as soon as I can. No comment will be left without a reply (unless there's no reason for a reply of course)