Updates On World Generation and Passing Data From CPU to GPU

This update focused mostly on world generation and a few related items. For Into the Great Abyss, the plan is to have procedurally generated planets that each feel unique. But at the same time the goal is to have a recognizable set of features that allow the user to derive some information about the planet from an aerial perspective alone. What we want is for the player to be able to make decisions about where they want to explore based on what they can see from the map. Suppose you are rapidly expanding your base and you need more stone and ore, you would then decide to expand more towards the mountains rather than toward the nearby desert. We want this sort of information to be intuitively communicated to the player from the map alone.

One major challenge, however, is that those sort of high level details are all generated on the CPU. When we generate the world we will determine the distribution of resources in the main game program and store that in a set of objects that correspond to each location on the planet. This poses a major problem because all the planet generation is on the GPU. The first of the linked pull requests deals with this problem.

This contribution covers the following additions:

- A robust system for passing world data to the GPU

- Expansion of world generation

- Functioning seeds for world generation

- Several types of coherent noise algorithms for biome specific heightmap generation

- Connection of heightmap generation with base scene generation

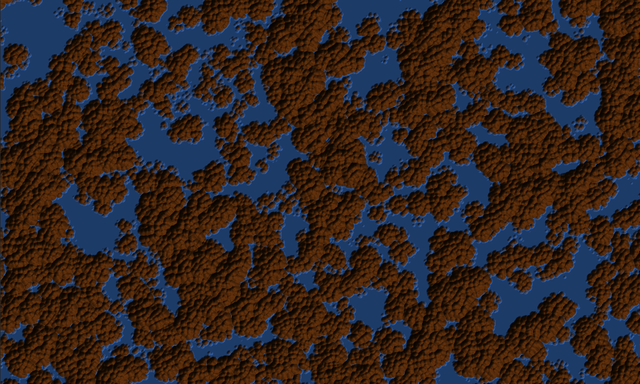

Here is what the world map looked like before these PR's. It was exactly the same every time the game was run.

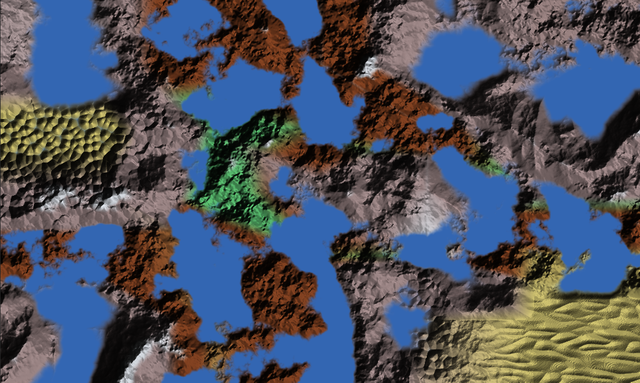

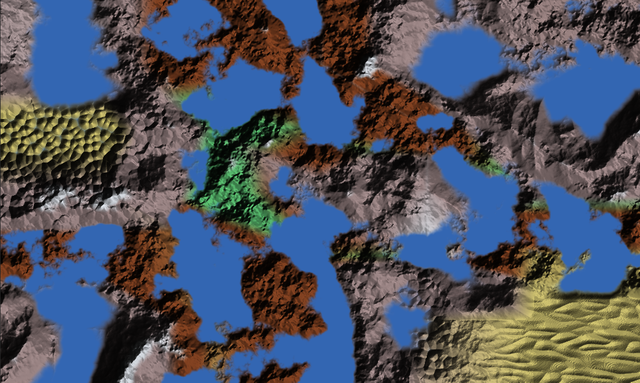

And here is what the world map looks like now

I admit, the colors make it look a little bit messy. And I am not at all happy about the overall style of the map. But the functionality is now in place and it is possible to start tweaking the parameters into place to get something that looks a little nicer. Most importantly you can see now where the various biomes are, the mountains and deserts stand out especially.

New Features

Several types of coherent noise algorithms for biome specific heightmap generation

Specifically two noise generation algorithms were added. One is for generating mountains, the other is for generating hills. They are both based on fbm noise, but have unique properties that give them specific features.

The mountain noise specifically took a lot of work to get right. It is based off of ridged-multifractal noise. But it combines a few other properties. First it uses the derivatives of each of the noise layers to offset the next layer to get a more organic look. Second it uses two layers of noise simultaneously to create peaks rather than ridges. Third, it uses the cumulative sum of the noise derivatives to smooth out flat features and to highlight rough features.

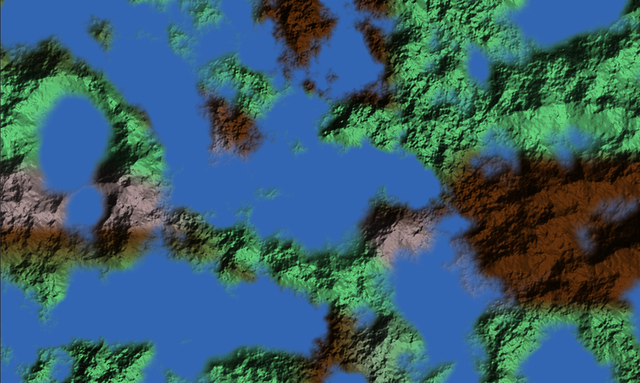

With the addition of mountain noise the heightmap gets a lot more interesting. Here is what it looks like when using mountain noise instead of the original noise.

Notice the organic looking ridges forming around the map?

Noise algorithms are more art than science sometimes, and often take a lot of work to get right. This one in particular took a long time to achieve look I was going for. The code to generate the mountains is below:

float mountain(vec2 p) {

mat2 m = mat2( vec2(1.6, 1.2), vec2(-1.2, 1.6) );

float total = 0.0, a = 0.45;

vec2 d = vec2(0.0);

vec2 p2 = p + vec2(1000.0, 512.12);

vec2 d2 = vec2(0.0);

for (int i = 0; i < 6; i++) {

vec3 n = noised(p + 2.5 * d);

vec3 n2 = noised(p2 + 2.5 * d2);

d += n.yz * a * -n.x;

d2 += n2.yz * a * -n2.x;

total += ((1.0 - smoothabs(n.x)) * (1.0 - smoothabs(n2.x)) * a) / (1.0 + dot(d, d)) / (1.0 + dot(d2, d2));

p = m * p;

p2 = m * p2;

a *= 0.6 * pow(total, 0.5);

}

return total;

}

If you are unfamiliar with shader code this may look a little nightmarish, that is okay. It takes everyone a little while to get comfortable with shaders. There are also a lot of magic numbers in here. That is something that is inherent to procedural generation, you end up tweaking a lot of values to get things just right.

The code for these changes is spread across PR 97 and PR 102

A robust system for passing world data to the GPU

It is very important for us to be able to pass in a lot of location specific data relating to things like humidity, temperature, rockiness, and height into the shader that draws the map. These four properties can be used to determine the biome, and consequently how each section of the map should look.

If you have worked with shaders much you understand the frustration of trying to pass much information from the CPU side of your program to the GPU. Godot uses shaders that are nearly identical to glsl shaders, in particular, they share all the same limitations around uniforms. If you aren't familiar with shaders, uniforms are variable definitions that you can use that tell the shader that the variable will be written to from the CPU and it can be read anywhere inside the shader program. In Godot uniforms are limited to basic types (float, int, vec2, vec3, vec4, etc.) You cannot pass in an array directly to your shader, nor can you pass in a custom object.

For Into the Great Abyss, we want to pass a list of variables that correspond to each tile on the map. At a minimum this meant 4 floats for each tile for 25 tiles, a total of 100 values which would need to be passed in. While possible, this is not a desirable result. It would mean at a minimum 25 lines of variable definitions at the top of every shader program which requires access to planet information.

Our solution for this problem was to draw the map as a series of quads instead of one large quad and then pass the tile specific information in as a vertex color instead of as a uniform. There are two major benefits to this approach: 1) the values are automatically blended based on distance to the vertex, so blending of properties between tiles is automatics, and 2) each shader file only needs to access one variable now to get all its tile specific information. Currently each vertex is assigned a random color value, but in the future the vertex information will be determined by a procedure.

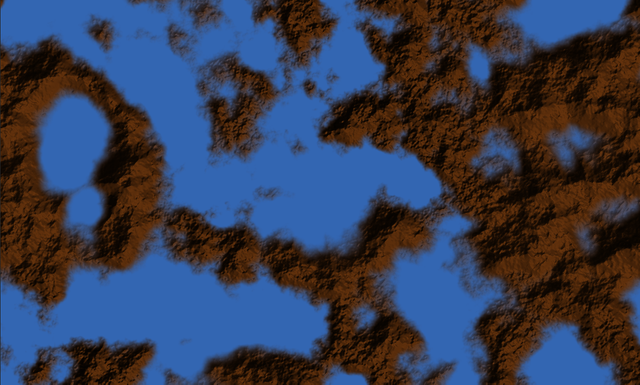

If you were to draw the quads with their assigned colors it would look like this:

The shader can then read those color values and output a map that takes them into account.

Just to highlight how this could be used in the generation step I colored the map randomly based on the input values

With this change we can now pass in a arbitrary number of variables easily. Additionally if we decide to alter the number of tiles on the map it is as simple as changing the range of a for loop, rather than having to reset 25+ values in the shader by hand.

The code for this change is found in PR 97

Functioning seeds for world generation

This change was a very big step towards getting unique planets working. While before there was a unique seed that was set each time the game was run, it had no impact on the actual generation of the planet. Additionally, it was limited to integers. Now the seed can be any string and it actually affects the world generation. Currently it does so in two ways 1) it changes the tile parameters that were mentioned in the changes above, and 2) it alters the hashing functions inside the shader so that the noise functions output different results even with the same input values.

The code for this change is found in PR 102

Expansion of world generation

In addition to the noise functions as discussed above, the world generation now takes into account the tile-based planet data (also discussed above). The code for computing normals was overhauled and is functioning better. And finally basic raymarched shadows were added to give an additional sense of depth to the map.

Shadows are a tricky thing to add to generated heightmaps. You have to sample the heightmap along a ray stepping towards the sun and check if the ray ever goes below the terrain. In code this looks like:

float shadow(vec3 position, vec3 sun_direction) {

vec3 p = position;

float r = 3.0 / resolution.x;

float shaded = 1.0;

for (int i = 0; i < 30; i++) {

p += sun_direction * r;

float h = texture(heightmap, p.xz).x;

if (p.y < h) {

return 0.5;

}

}

return shaded;

}

This is a very common way of checking for shadows on a generated heightmap. With this addition it is easier to get a sense of scale on the map. The mountains appear larger than the hills, even when viewed directly from above.

Now the map is drawn with some sense of biomes. This allows us to convey a lot more information about the planet on the map itself. Here is a picture of the current state of the map so you can see the different biomes. Right now the whole thing looks messy and awkward. But that is okay for now. Later once we have a clearer picture of all the things we want from the map we will take a stab and making it look nice. Right now it is enough to be able to see the data we have passed in.

These changes can be found mixed into PR 97 and PR 102

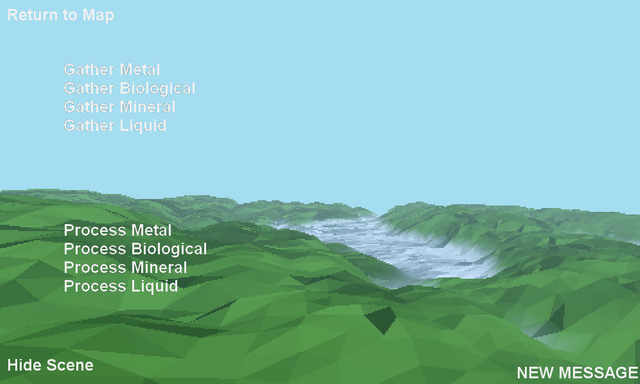

Connection of heightmap generation with base scene generation

Previously when entering the base and looking at the local 3D view you were greeted with a random 3D scene. Now the scene is drawn using the the section of the heightmap that the base is located in. This change required a custom shader to be written for the 3D scene that would take the heightmap used in the map drawing, cut out the section that was important, and then draw the 3D scene based on that section of heightmap. Additionally the terrain also changes color when it is below the waterline. There is a lot of work to be done on 3D scenes. But this initial step is in the right direction.

The changes here are all found in PR 100

Those are all the recent changes. Let me know if you would like more detail about any of the changes (especially the noise generation, I could talk for days on that).

For more details on the project check out the github repo! Also if you would like to contribute you can open an issue or submit a PR!

Posted on Utopian.io - Rewarding Open Source Contributors

Well with the new graphic and technology cpu became weak.so here the gpu enters.gpu has lot of cores and desined for graphic and algorithem solving so ye the world need to evolve abd also use gpu

Thank you for the contribution. It has been reviewed.

Awesome screenshots, I look forward to game play.

Need help? Write a ticket on https://support.utopian.io.

Chat with us on Discord.

[utopian-moderator]

Hey @clayjohn! Thank you for the great work you've done!

We're already looking forward to your next contribution!

Fully Decentralized Rewards

We hope you will take the time to share your expertise and knowledge by rating contributions made by others on Utopian.io to help us reward the best contributions together.

Utopian Witness!

Vote for Utopian Witness! We are made of developers, system administrators, entrepreneurs, artists, content creators, thinkers. We embrace every nationality, mindset and belief.

Want to chat? Join us on Discord https://discord.me/utopian-io

awesome work with some nice algorithms! one thing i don't understand is how the player actually explorers these lands. does it work like a traditional rts game?

Currently the player explores by expending a certain amount of resources and energy. Typically the map is not uncovered the way it is in these screenshots. When gameplay is further along I will make sure to make a post about it!

wow. sounds like a lot of strategy is needed. can't wait to see more!