Scientists Build Computer Code That Can Decipher Moving Pictures From Brain Data

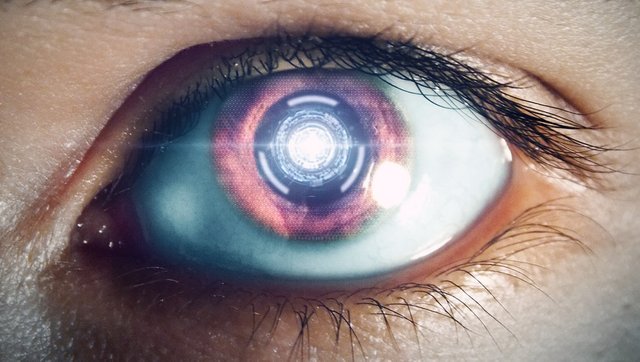

Researchers have been working on bringing visual prosthetics (also known as bionic eyes) to life for quite some time. They have been long working on strategies in order to reactivate parts of the brain that are able to process visual information in people who are blind.

The number of spikes in neuron electrical activity that are created when brain cells fire is what makes up the basic code for our perception. This is at least what the traditional thinking was. However, neurons are constantly speeding up and slowing down their signals. A new study by scientists at Salk Institute shows that being able to see the world actually relies on not just how many spikes of activity there are during a certain period of time but also the exact timing of said spikes.

Salk Professor John Reynolds is the senior investigator of the study. He says in vision, it turns out there is a huge amount of information present in the patterns of neuron activity over time. Increased computing power and new theoretical advances have now enabled us to begin to further explore those patterns. The complete study was published in Neuron Journal on August 4th, 2016.

The human brain is made up of a very extensive network of neurons that are what allows us to see everything from simple shapes to complicated stimuli. This occurs because certain groups of neurons are excited by a horizontal or a vertical edge. Reynolds and his team put their main focus on an area of the brain called V4 which is a visual area located in the middle of the brain's visual system that is able to pick up on contours. Neurons throughout V4 are sensitive to the contours that define the boundaries of objects and assists in helping us to be able to recognize a shape no matter where it may be in relation to other objects in the space surrounding us. However, Reynolds and postdoctoral researcher Anirvan Nandy discovered back in 2013 that V4 was far more complicated than initially thought. Some neurons in the area focus solely on contours inside a designated spot within our visual field.

This better understanding of V4 had the team wondering whether the activity code of V4 may be even more nuanced, taking in visual information not just in space but also in time. Nandy, lead author of the new paper says we do not see the world around us as if we are looking at a series of photographs. We live and see in real time and our neurons capture that.

The scientists collaborated with Salk theoretician and postdoctoral researcher Monika Jadi in order to build a computer code that they called an ideal observer. With access simply to the brain data, the computer was able to decipher the moving pictures that had been seen. One version of the ideal observer had access to the amount of times neurons fired, while another version was given access to the full timing of the spikes. The second observer mentioned was able to guess the images more than twice as often with complete accuracy in comparison to the first observer with more basic information.

These new findings came about thanks to improved ways of recording from and stimulating the brain as well as better theoretical modeling efforts. The group has plans to do more than just observe V4 but to actually activate it by using light through a cutting edge technique known as optogenetics. Reynolds says this is like taking the visual system for a spin. It will help researchers to be able to better understand the relationship between patterns of neuron activity and how the brain perceives the world around us. This holds the potential of laying the groundwork for far more advanced visual prosthetics.

Like what you read (without annoying ads :) ) ? Then please upvote our posts and follow us for the best daily science news on SteemIt!