Advancement Of "Artificial Intelligence" Into "Artificial Consciousness"

Introduction

No doubt, we have witnessed the rise of intelligent machines of which the term "Artificial Intelligence" would suffice the most for their description. But like it has always been speculated that a point is approaching when machines (AI, as the case may be) would outsmart humans. But looking at this holistically, majority of the emphasis has been laid on the cognitive abilities (cognitive domain) of machines in comparison to the human brain power. But what about other domains like; the efferent domain, and the psychomotor domain? Would an advance in this aspect create machines with human-level of consciousness? Let's find out.

[Image Source: Pixabay: CC0 licensed]

Concept of Artificial Consciousness (AC)

Okay! Here's the thing; AI is majorly based on some pre-programmed algorithms that enables the machine to review and relate information from a database to a real-life scenario. But this does not necessarily mean the ability of self-awareness. Truth is; we have seen major advances in the field of AI, like; the self driving car, the chatbot, not to mention the medical AIs (DaVinci, robo-surgeon, etc), yeah; this is brilliant, but does this equate to consciousness? Permit me to reiterate the words of a renowned futurist; Ian Pearson again:

AI could be billion times smarter than humans. - Ian Pearson. Source. [Paraphrased by me]

The predefining word here is "smarter", but what areas would it cover? Will it include the human-level of consciousness, and the ability to process huge amount of information in real-time without having prior data concerning it? These are some of the qualities that are the absolute prerogative of the human brain. In my previous post, I made mention of the possible replacement of humans by machines in jobs. Okay; that would also save us the stress of being workaholics, but would these machines also be responsible for their actions?

There has been a major debate in relation to machines achieving self-awareness, and among them is the issue of ethics. Here's a very valid argument; would a self-aware or conscious machine be regarded or referred to as "person" under the ethical law? And when it contravenes any predefined rule or even harm an individual, would it be liable and responsible for its actions? People have been calling on the establishment of super-aware machines, but this questions have been majorly left unattended to.

Beyond Self-awareness

It has always been believed that almost all biological abilities of the human body can be simulated by technology (no wonder our bodies have been often referred to as biological machines, so as to prove the possibilities of its simulation/replication). That is also the reason it has been believed that consciousness or self-awareness is a feature that can be replicated by technology.

[Image Source: Pixabay: CC0 licensed]

But there are more things to consciousness than is just seen peripherally. This include; but not limited to; the ability to perceive the surrounding and to draw out inferences from observing them. It also includes the ability to receive an entirely new set of information, storing them, and retrieving them together with some other previously stored information in response to some real-life scenarios that require cognitive processing (mark the word "real-time").

This does not stop there. Now let's consider the creative power of the mind, the behavioural pattern of humans and also the sense of belonging/freedom that is inherent in humans. These are some of the things that are relational to our efferent domain, of which consciousness is a part of it. And remember, these characteristics are without prejudice to some logical calculations. So with this in mind, we know what to expect from a "conscious machine".

Now we have seen that the cognitive ability is not the only thing exclusively preserved for the human brain, we also have the efferent abilities and the psychomotor abilities. But what advances have been put in place to advance this course? Okay let's take a look at this.

The Artificial Brain

This is also called the "artificial mind" and it is any hardware or software embedded with the cognitive abilities relative to the human brain. You won't be wrong to refer to this as the "brain simulator" or "brain emulator".

First of all, there have been researches by various cognitive neuroscientists to understand the details of how the brain of human works. Now, with the understanding of this, a simulation can be made from it. You may ask; how far have they gone? Till now, one of the best advances is the AGI (Artificial General Intelligence) which is an enhancement of the normal Artificial Intelligence that we have known, which enables machines perform; with high precision index; intellectual tasks that were the absolute prerogative of humans to perform. The futurist; Ray Kurzweil, normally refers to this as the "Strong AI", but you can also call it "Full AI".

But the question is; does this equate to consciousness? Judging by the pre-defined explanation I gave earlier, you would agree with me that some areas are still found wanting. But no doubt, this is actually a positive move in the right direction.

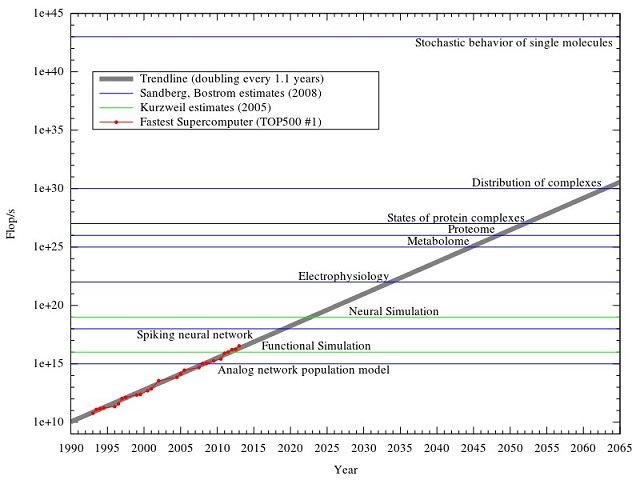

I won't also have you ignorant that these machines would need some level of processing power to be able to simulate effectively the human brain (remember it has been estimated that the human brain can store up to 2.5petabytes of data, not to talk about the speed at which it can process information). In relation to this, I would drop an extrapolated chart of the required processing power of machines to simulate the human brain; as indicated by some futurists and proponents of emerging technology (Ray Kurzweil, Nick Bostron, and Ander Sandberg).

[Image Source: Wikimedia Commons. Author: Authors (Ray Kurzweil, Nick Bostron, and Ander Sandberg) Tga.D. CC BY-SA 3.0]

From this chart, you can infer that by the year 2060, an effective distribution of complexes would be achieved, which; no doubt; would be the next step in the realization of the absolute conscious machine.

Conclusion

As technology is advancing, the boundaries of biology are being crossed. We have seen yet another boundary that is about being crossed; and that is "consciousness". Technology has created a way to hack and simulate the human biological body in an unnatural way. And with the trend of event, we could have a fully conscious machine sooner than we imagine. Does this mean that the future would favour technology over biology? Well, let's keep an open mind and watch what the future holds.

Thanks for reading

References for further reading: Ref1, Ref2, Ref3, Ref4, Ref5

All images are CC licensed and are linked to their sources

gif by @foundation

I definitely think we are just a step in evolution. We'll be either surpassed and destroyed by ai or we'll bring doom upon ourselves in some other way, too many to count, human intelligence is limitless.

If an alien would study our planet I'm sure it would come to a conclusion that its a planet of tradigrades. They are here way more time than we are, far more resilient and they are everywhere. I don't see any connection that more intelligent creatures are also more resilient. So far dinosaurs beat us by far.

There is a possibility of passing our crown to AI. What will happen next we should recognize from history and evolution.

I quite agree with you. Humanity could be lost to AI. But remember, there's also another possibility of a merger with these AIs.

Thanks for dropping by

Good article. "Consciousness" may be the same of "self awareness" but even that is not so easy to define.

It would be very hard to prove one way or another whether or not a software program is "self-aware".

Throughout the history of mankind we have made easy, but often wrong, assumptions about the level of consciousness of animals and other life forms.

For example, trees are also very complex. Are they self-aware? The easy answer is no, but really if one comes to think of it, how could this be proven?

Very difficult indeed.

Well said. The perfect definition of "self-awareness" still lies in the grey area. And this has made it difficult to be simulated artificially. But one thing I believe is this; it is not impossible to simulate

Self-awareness and consciousness is just a human construct of making us feel more important.

Trees are important for our own existence. The end goal for all is equal - multiplication. I see no difference between trees and humans from that point of view.

Very good post!

We can't stop the development! Exciting and frightening at the same time. I can easily Imagine a "Supercomputer" connecting various Hardware on the Planet in order to supply the Computing power. Some developments like Golem, Sonm and some more are pathing (already on air) the way in the Cryptocurrency space on Blockchain technology.

Welcome to Skynet!!!! ("Terminator" sciensfiction)

A quite interesting thought would be to "put/copy" the human consciousness in a machine! Imagine we could live forever exploring the whole universe without getting old.

By the way, there is sooo much going on in the tech sector but my fuc... Battery of my laptop is still on Lithium-ion (early 1990s) and getting low! In stead of working on such big topics why don't the scientists develop a better/other Power source first - hahaha.

Inspiring Post! Well done. Followed Upvoted.

That could be the dawn of singularity, you know

Thats where we are heading to. To me it sounds logic. Why should the super Intelligent machine not use Internet... . It will for sure like to grow, expand, learn so it will most likely use all data/information resources it can access especially when it has consciousness!

Era of AI at the corner

Nice Write up boss

Lol. Not really AI now, but AC :D.

Thanks for dropping by

Until we can fully understand what consciousness is and prove otherwise, I think we have to assume that AC is inevitable. It certainly raises a number of ethical issues.

Great post @gprince I enjoyed it.

Gprince? Hope you're not mistaking here?

Well, thanks for dropping by

In class the other day, we had a fascinating conversation with our professor about AI. The crux of his argument was that if we build AIs that are at the very limits of our knowledge, meaning they are as "smart" as we can possibly make them, then how do they evolve? His argument is that they evolve on their own, which would make them "smart" in a way not understood by us. This leads to AIs that evolve beyond human comprehension that are capable of doing things humans are not. At this point, then what is the role of the human, are we even necessary?

Part of me wonders if this is the evolution of humanity? Neanderthals to Homo Sapiens to Cyborg Transhumanism?

Being A SteemStem Member