Why Is It So Hard to Make a Realistic Twitter Bot?

Twitter: the Internet’s town square, Procrastination Central, and the go-to place for level-headed political commentary. OK, maybe not that last part.

If you’ve spent more than ten seconds on Twitter, you’re probably more familiar with tweets like:

“Forget #Congress, this guy should be kicked out of the country! Save #America!”

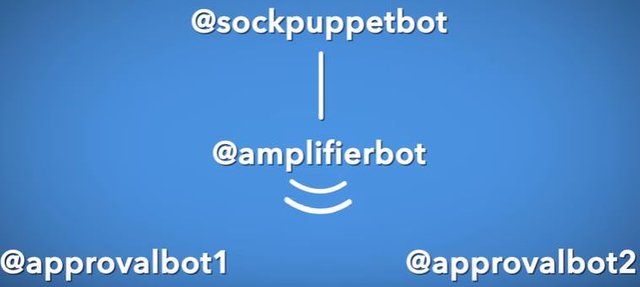

Fiery tweets can save or sink political campaigns and individuals’ reputations. But it’s not just humans tweeting. Thousands of messages like that example are blasted around the Twitter verse by bots, or automated accounts. The prevalence of them, somewhere between 5 and 15% of active accounts, has stoked fears that conversations on the platform could be driven by mindless puppet machines. But how influential bots actually are is still a subject for research and debate. What’s more striking about them from a technology standpoint is just how simplistic they are. See, chatting with you convincingly is still beyond the abilities of even the most advanced bots. So if one wants to pass as human, it has to rely on simple tricks, and on you not looking too closely. The term “bot” covers a wide variety of automated activity, but they’re all just programs trying to accomplish some pre-set goal. There are plenty of benign ones providing simple services like earthquake information or unrolling threads. These accounts are pretty open about their botliness. The more worrisome accounts are those that pretend to be human and coordinate to influence public discussions. On the front line are the sock puppets, accounts that parrot content from a hidden author. They’re controlled through software, but with humans pulling the strings. Amplifier bots then retweet and remix trolls’ and sock puppets’ posts for a wider audience. Approval bots bolster these tweets by liking, retweeting, or replying. And sometimes, attack bots engage directly with individual accounts to harass or discredit them.

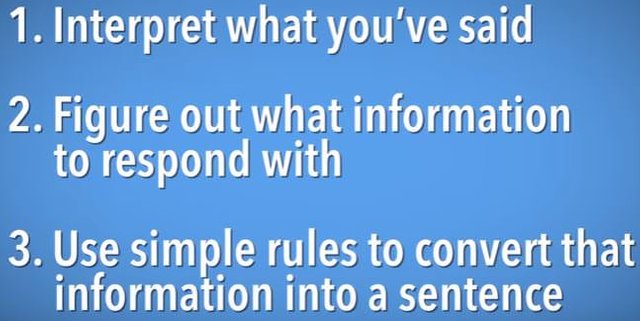

Now, despite spamming up your timeline, most bots are…well, slightly smarter than rocks, but about as dumb as a fax machine. They’re basically just short if/then flowcharts that either spit out lines humans have written, post links and retweets, or mash together fragments Mad Libs-style. Like, one might be programmed to scan Twitter for any tweet containing the word “corgi”. And if it finds one, it’ll reply with a cute dog .gif. That might sound difficult, but it actually just requires some basic programming skills. If you prod a little, the fakers often give away their facade with behaviors like posting with inhuman frequency, using absurd numbers of hash tags, and promoting other bots. Despite their shallowness, though, influence bots can get surprising traction by liking and retweeting each other. They also piggyback on popular hash tags. It all works because they assume, usually correctly, that humans won’t look twice. But for all those clever tricks, none of these bots are great conversationalists. In fact, most are totally incapable of any dialogue. That critical component of human-like interaction has proven very hard to automate, whether on Twitter or anywhere else. And it’s unlikely we’ll reach that milestone soon. Right now, dialogue is easiest when the designer can predict what the bot will need to talk about, for example, customer complaints. In that case, the chat bot can follow a three-step cycle: First, interpret what you’ve said. Second, figure out what information to respond with, say, the departure time of your flight. And finally, use simple rules to convert that information into a sentence.

Many bots, though, are trying to converse more open-endedly, whether for entertainment, for political influence, or just to annoy you. But designers can’t script these interactions as thoroughly because there are just too many possibilities to code for. So, they have found a few ways around that. Sometimes bot builders craft templates for inane responses that rehash what you’ve said. This tactic was made famous by ELIZA, a 1960's psychotherapist chat bot. ELIZA reflected your statements back as questions, like “Does it bother you that you’re sad about your mother?”Another method is to fish through previous conversations for the most relevant responses humans have given in similar contexts. With enough experience, the bot can manage a few lines of coherence. And finally, a bot can try to learn correlations between words you’ve said and words it should say, effectively treating chatting like translation from one to the other. The thing is, none of these methods work that well for open-ended conversation. That’s because, when we use language, we’re not just spewing words. We’re trying to express thoughts about the world. The computer, on the other hand, has no idea what it’s talking about. All it knows is patterns of words, with no understanding of how they correspond with reality. To participate in the infinite variety of human conversation, it would need to draw on experience with gravity, politics, sleep, and everything else we talk about. It’s a problem so complex that researchers joke it’s AI-complete, meaning you’d need to solve all of AI first. But for now, the chattier bots do have some tricks to hide their linguistic incompetence. Some change the subject whenever they don’t know what to say. Others might ask lots of questions to avoid saying anything substantive. Most commonly, though, they resort to the Wizard-of-Oz trick: If a bot gets out of its depth, a human swoops in and takes over to maintain the illusion that they were there the whole time. So if you spot a bot on Twitter, try asking it a simple question, like whether a shoe box is bigger than Mount Everest. It probably won’t answer intelligibly, but if it does, you can safely assume there’s human behind the scenes. And ultimately, that’s a nice reminder of who’s really responsible for what happens on social media. Because no matter how many bots there are… it’s still people running the show.