Intel Movidius - Machine Learning Is On Steroids

Have you ever heard of people talking about machine learning or seen advertising for yet another smart device (such as: iPhone X, Huawei P20, Mate 10, LG ThinQ, LG TVs), but you only have a pale idea about the meaning of the term and wonder how something that just needed supercomputers, suddenly working in consumer electronics? Are you tired of nodding and pretending that you know what's going on and what does the term mean? Time to change it!

Machine learning is based on the concept of existing basic algorithms that show interesting characteristics of a given set of data, but without the need to write a computer code specific to a specific task. Instead of writing the code, you provide input data from the basic algorithm and create your own logic that uses this data.

As an example, we can use the classification algorithm. It divides data into different groups. Without changing a single line of code, the same classification algorithm can be used to recognize handwritten numbers or to move an e-mail message to a spam folder or leave it in your inbox. All you need to do is provide other learning data, and the algorithm will provide a different grouping logic for the data set.

Machine learning algorithms are considered in two categories. These are teaching methods with supervision and without supervision. There is a simple but very important difference between them.

Suppose you are a real estate agent. Your business is growing, so you hire a group of interns to help. But you have one problem, because you just have to take a look, and you already have a good idea of how much a property costs, while your helpers because of lack of experience can not make a proper valuation.

So you decide to write a program that will help trainees valuate the value of houses in your area (and you - leave earlier for the holidays). In this program you will take into account the prices of similar properties and such features as the size of the building and the district in which it is located.

To do this, you collect data for three months: you register all information about the sale of houses in your city. With each transaction you note the number of rooms, area, description of the district and, most importantly, the sale price. These are learning data for "training" / machine learning.

This data will be used to teach price prediction for other homes. This teaching is called supervised learning. It consists in the fact that you know the final answer, that is the price. The program allowed you to enter known house data together with the price and you have learned the logic of these valuations with the input data collected.

To build a program, you must enter learning data into the machine learning algorithm. This algorithm tries to estimate which mathematical operations must be performed in order for the results to be correct. It's a bit like a math test with cleared arithmetic symbols in the actions for which you have the answer key.

In teaching with supervision, you let the computer guess these relationships. Once you find out what mathematical activities were needed to solve this specific type of task, you can solve similar ones!

Teaching without supervision

Let's return to the original example with a real estate agent. Let's think what would happen if you did not know the end price of each house sold. The only data you have as an agent is the size, position, etc. These algorithms can make really interesting operations. This is what we call machine learning without supervision.

This is similar to the situation in which someone gives you a number written on a piece of paper and says: "I have no idea what these numbers mean, but maybe you can help me guess if there is a pattern or set of data here. Good luck!".

What can you do with such data? At the beginning, you can have an algorithm that automatically decrypts various market segments from your data. You may find out that the buyers of houses in the vicinity of the local university choose small buildings with fewer rooms, and residents of the suburbs prefer those with three large-size bedrooms (or at least four-room ones)? Knowing the different market segments represented by various clients will help you focus your marketing efforts.

Another interesting use of the algorithm would be automatic detection of real estate with unusual features, which are particularly different from the rest. Perhaps you will learn that these unique homes are huge residences. So you can concentrate on them and send your best helpers who will be able to achieve higher income.

It's great, but is predicting the price of a house being sold really considered "learning"? A person has a brain that, when faced with most situations, is able to adapt to them, even if there are no exact instructions.

A man who sells real estate will instinctively feel and will have a "nose" about the right price in a given market, the best marketing strategy, the type of customer that the house will be interested in, etc. The purpose of research on artificial intelligence is the ability to replicate this behavior.

Existing machine learning algorithms are not yet good enough to behave as multifacetedly as the human brain. They work well if they focus on a limited task. So in our case, instead of the term "teaching" it will be more accurate to "solve equations" to solve some task based on the sample data provided to the system.

Unfortunately, the "machine recognition of what equation solves a specific task based on certain example data and results" is a rather unfortunate name, hence it is briefly referred to as machine learning or machine learning.

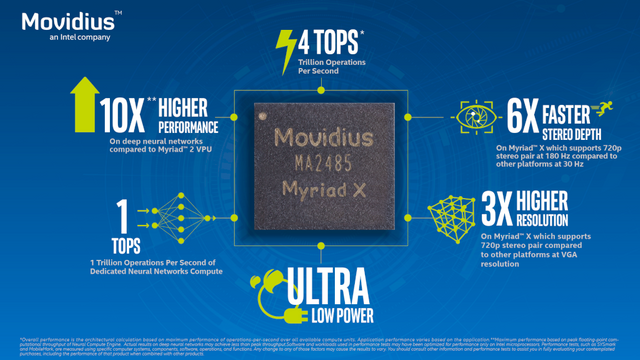

Intel Movidius - inference, specification, properties

Of course, when you read it in 50 years and there are already developed algorithms for strong artificial intelligence, this entire guide will seem rather outdated. However, one of the biggest successes in the SI arena for now is simulation of the brains of animals using supercomputers (an example of IBM and the cat's brain). Undoubtedly, this process requires unimaginable computing resources, which is why supercomputers are still required to train and then to solve queries. For example, Chinese Baidu speech recognition models require not only 4 terabytes of training data, but also 20 computational exaflops, or 20 billion mathematical operations - throughout the training cycle. Try to run it on your smartphone. This is where the inference comes in!

A properly trained neural network is basically a comprehensive database. If anyone intends to use all this training in the real world, let them know that this is unrealistic. What we need is an application that can quickly apply a trained model on specific data that this model has never seen, and instantly return results, often in near-real time. This process is just an inference, inference: downloading smaller batches of real data and returning quickly with the correct answer (in fact, predicting that something is correct or not).

Although this is a relatively new area in the field of computer science, there are already two main approaches to modifying neural networks for speed and latency in applications that run on other networks.

The first approach examines which fragments are not used or are rarely used. It gives you the opportunity to clean the network (so-called Pruning). Of course, in few cases it is paid for with a small loss of prediction precision (generally no more than 1-4%).

The second approach is looking for ways to connect different layers of network to one efficient computing step. It also reduces the number of weights, and hence - the number of parameters and features that need to be passed to the network. It is an approach similar to compression. Graphic designers can work on their huge, beautiful images with a width of even a million pixels, but when they go online, they will change them into JPEG files, which will significantly reduce their size without great loss of readability. They will be almost identical to the original, indistinguishable from the human eye, but with a lower resolution and lossy compression. Similarly, in the case of inference, we get almost the same accuracy of the forecast, but simplified, compressed and optimized in terms of performance of the runtime environment.

Secondly - and perhaps more importantly - inferences can be equated with a function call on an optimized network. There is no problem if the in-training computer does not need anything more, but in autonomous cars, monitoring, image recognition, mobile phones, etc. there are numerous problems. Naturally, despite the introduction of the above-mentioned optimization, it is not possible for the neural network to work on a mobile device. It is necessary to transfer data to servers operating in the cloud, so that they will process the data and return the response.

Intel Movidius

Imagine that our network is supposed to perform face recognition, popular recently in smartphones thanks to the iPhone X. In theory, we could send to the servers in the cloud the entire image from the camera and process it on servers, but it is not a good idea, if only because on the speed of mobile internet, coverage or data transmission fees. In practice, this is done in such a way that the image is pre-processed on a mobile device, and only a list of numeric parameters is passed through the network.

It sounds simple, but it is not. Our example of face recognition means de facto transferring millions of parameters, and several times per second - preferably 60 times, to ensure smoothness. The next factor is the load on the processor, and hence - shortening the operating time on the battery power supply.

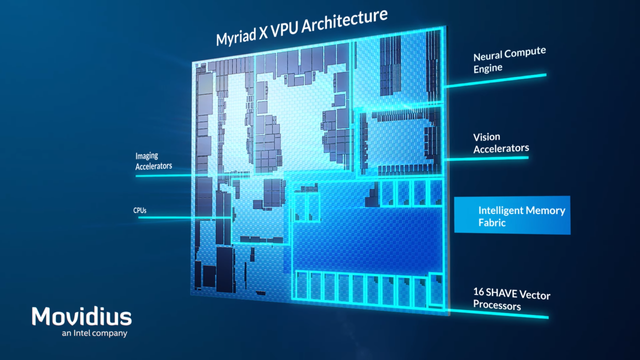

In summary, this process should last for milliseconds, save energy and often perform, 60 or more times per second. Of course, general purpose processors are able to do this, but they do it inefficiently, slowly. That is why special computing units arise, the architecture of which is adapted to specific operations, performing the task in a timely manner, but useless in other applications (similar to ASICs designed for extracting cryptocurrencies).

Thus, specialized units are created, such as the layout in the iPhone X supporting facial recognition and the Intel Movidius device, which we write about in this article.

The basic technical specifications:

- Processor: Intel Movidius VPU

- Built-in support of machine learning platforms: TensorFlow, Caffe

- Connectors: USB 3.0 Type A

- Dimensions: 72.5 mm × 27 mm × 14 mm

- Working temperature: 0-40 ° C

- Hardware requirements: x86_64 class computer with Ubuntu 16.04 or Raspberry Pi 3 B, or VirtualBox virtual machine with Ubuntu 16.04

- Operational memory: 1 GB

- Storage medium: 4 GB.

The advantages of Movidius can certainly include an attractive price, low energy consumption, promised performance, connection with Python 3 and popular machine learning platforms, Raspberry Pi support, good documentation and an open source form. On the other hand, there are no operating systems other than Ubuntu. It is not the only available solution of this type. Before buying it is worth looking at the Fathom Neural Compute Stick model, which also uses the Myriad V2 chip and is longer on the market, as well as Laceli AI Compute Stick, which is a newer device and more efficient, though without the algorithmic facilities for optimizing the network.

If you liked this post - Please resteem it and share good content with others!

Support My Work.

Bitcoin : 1FqpgzPScTn265f1G4YbajCMFamhKeqBJq

Litecoin : LMnVEPNgmdV26oAnwTEHs42Chd1Ci37nG2

Dash : XrGprbs2hiGPja4mYpKo7urew4YXDvrAmC

Resteemed by @resteembot! Good Luck!

Check @resteembot's introduction post or the other great posts I already resteemed.

I had never heard of Intel Movidius until I read this post. Well written and

excellent research.