Everything we do has an impact for the rest of our lives? Decision rules and decision trees

The decision theory

IPWAI [CC BY-SA 3.0 (https://creativecommons.org/licenses/by-sa/3.0)]

This post is about my thoughts on decision theory. The inspiration for this post was from a conversation I had elsewhere on the Internet. In the conversation I made the statement that the quality of my decisions is based on the quality of the information I have access to. Of course there could be more to it but for the most part my ability to scale up my decision making capacity is limited by the resources I have or had at the time. This would apply not just to a human being being asked to make a decision but to any computer which also must arrive at a decision from information it has access to.

One problem with the Internet is that while it gives us access to a lot of information it does not necessarily filter for us information by quality. As a result we can easily be deceived by false information also known as disinformation. This disinformation can even include scientific papers which simply cannot be reproduced or by bad advice we receive from people who we think have more knowledge about a certain subject than ourselves. Bad information results in bad decision making and my hypothesis or argument is that this is the primary limiting factor which distinguishes between what society deems "good" and "bad" persons.

A perfect example? If we take medical research papers you can find a lot of interesting results which are relevant in a certain context. The results are correct and if you as the patient just go into the rabbit hole you will find yourself flooded with data. The problem of diagnosing from these results is a matter of relying on decision algorithms which doctors tend to use to arrive at a diagnosis. This is a sort of logical deduction process where by exclusion over a series of results on the decision algorithm they eventually get an answer (decision/diagnosis).

Doctors also rely on what is known as an index of suspicion. This sort of calculation is based on current or known statistics. For example certain illnesses have certain probabilities from the statistics and this can inform which diagnostic tests make the most sense. A high index of suspicion would mean that a diagnosis of a particular illness is strongly probable but we have to keep in mind that medical decision making is often about the measurement and or comparison of risks. To give one drug rather than another is based on the known risk factors of the drug and the particular history and risks it can bring to that particular patient. In this way the doctor who knows the patient can figure out that for example if a patient has known acid reflux it might not be the best idea to give NSAID (non steroid anti inflammatory) due to the very well known possible side effect that it can cause ulcers.

People are not like robots

When people make decisions people do so under the influence of their emotions, or just something as simple as lack of sleep. A person who did not get good sleep who usually makes good decisions could become forgetful of stuff they learned in the past. A person who has lack of sleep who is expected to make very high quality decisions is now limited by their biological incapacity. This is because people are not robots and the biological capacity to make high quality decision is limited by what is known as decision fatigue.

Decision fatigue is one of the biggest and main problems behind why people make bad decisions even with the best of intentions. If I'm for example required to make 1000 critical decisions a day, every day, for months at a time, sooner or later I'm going to develop decision fatigue. This is where the resource limitations begin to make the big difference and my hypothesis is that people who are poor often make poor decisions because there are less resources to offset the decision fatigue. The initial clickbait aspect of the title of this post is the question, everything we do has an impact for the rest of our lives?

Suppose it is true that everything we do has a life long impact? The person who has the resources to delegate more of their decisions to others has a greater capacity to avoid decision fatigue. The ability to delegate decisions is not equally distributed in society. The people with the resources to delegate more of their decisions than others have an advantage if the persons they delegate the decisions to are competent enough to make high quality decisions. So for example if you do not have to decide what your meal plan should be because you can afford a nutritionist who prepares all your meals for you then you've avoided a lot of decision fatigue just on something like this but a person who doesn't have this luxury has to make all of these decisions for themselves.

Having a lot of choice is a good thing but there is also a correlation which I expect to occur where the more choices you give a decision maker the more difficult it is to make a high quality decision. For example if we have a store and we offer a menu with only two choices of what to eat, and we label those two choices "healthy" and "unhealthy", with the nutritional values proving the healthy choose supplies all the nutrition a person needs to maintain health, then shouldn't it be quite easy for the health oriented person to make the right choice? On the other hand if you have a super market with thousands of choices, with nutrition labels in the small print, with thousands of different research papers acting as data points as to what is or isn't currently considered healthy by the nutritional and dietary establishment, well now the choice becomes computationally expensive for the typical person.

In my opinion based on this example I could make a controversial claim. Perhaps obesity isn't because the person is morally a "bad person"? Perhaps obesity is simply the result of bad choices. Perhaps those bad choices are the result of decision fatigue in a lot of people who simply are overwhelmed with information on what is or isn't healthy or confused by bad labeling, or they simply are asked to choose what to eat on a daily basis until eventually their internal constitution (discipline) wears down. In this environment because there are so many bad choices it becomes a challenge to filter out the bad choices to find the good and this challenge is the source of the decision fatigue.

The solution could be to delegate decisions to algorithms

If you assume that the typical person in this world has the desire to remain healthy then by this same assumption you can make a similar case that a typical person will want to make high quality decisions all the way around. What exactly do I mean when I make some controversial seemingly futuristic statement such as decisions should be delegated to algorithms?

Let's look at how nutritionists and dietitians make food choices and to start discuss the actual purpose of food. Human beings eat to stay alive primarily. If you do not eat you simply cannot live for very long which means eating or getting the nutrition is a requirement for survival. From here some humans have different values and some value more the enjoyment they get from eating, while others are more focused on the health benefits the fuel (calories) provides. The ability to reach a decision depends ultimately in my opinion on the values of the decision maker.

Suppose you care mostly about staying healthy? In this context what you eat has to benefit your health. A simply cost vs benefit analysis algorithm could help you to determine what to eat and what not to eat. The cost benefit analysis could include factors such as the nutrient density, the impact on the microbiome, the digestibility, the storage cost, the preparation cost, and more. All of these little calculations of course are taxing but if the goal is to be healthy then these data points all have to be studied and tracked. From these data points a list of the top foods can be reached and those foods on the list become "healthy".

It's of course not even that simple because we don't all have equal ability to digest food. If you have food allergies, if you have irritable bowel, if you have any abnormality, you now have to factor in stuff like FODMAP and the kind of fiber in the food. Then of course there is the amount of energy density of the food which determines the rate at which a person can put on or take off weight. If a person is trying to gain healthy weight then the energy sources (calories) must come from healthy sources and the person if they are an adult will likely have to lift weights to make sure the weight is mostly muscle.

As you can see just managing nutrition requires a lot of thought and calculation. For this reason the idea that we can delegate our nutrition management to an algorithm begins to make sense. We don't have to decide what to eat because our meal planner app can do it for us. We don't have to decide when to work out and how much because our fitness app becomes our personal trainer. These of course are the luxuries which the very poor do not have as they may not have access to these algorithms to act as their nutritionist, their coach, their health maintenance etc. Yet these decisions of what to put into and how we use our bodies are among the most important and critical decisions we have to make in our lives,

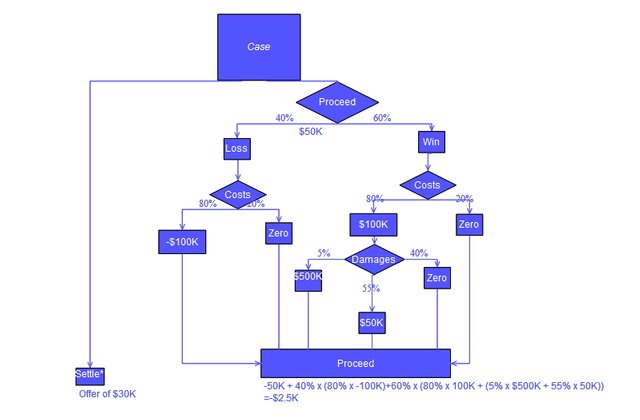

As you can see most decisions are made on the basis of cost benefit analysis. This can be represented as a ratio where the better decision is the decision with the most benefit for the least cost. In ethics this would mean to be able to get the most gain while causing the least harm. This alone is enough to create pro and con lists, to simply weigh every option against every option, to essentially use algorithm to compare. The problem? The human brain isn't as good as using a sorting algorithm as a CPU and a CPU can compare 100,000 choices in seconds without ever developing any sort of fatigue. The worst case scenario is your smart phone runs out of juice or overheats but it's not going to completely bug out from lack of sleep like a human.

Why do I bring all of this up in the context of Tau? I'm involved with the Tau project because I believe it can provide an answer to these sorts of problems. Decision support or decision amplification as I envision it is simply a matter of delegating an increasing amount of decisions to algorithms when there are no qualified humans available for us to delegate to. For people who are aren't born into a wealthy family or a very wealthy social situation, they will likely have to rely much more on these algorithms because they do not have a personal chef, a personal trainer, a fitness instructor, and any unlimited number of trusted experts to turn to to help them to make all these little decisions.

Ethical decisions primarily also relies on having trusted experts around you. This could be mentors, it could be parents, it could be whomever, but a lot of people simply don't have people to turn to. Usually people recommend a psychiatrist to people who seek advice but the problem with that is that a psychiatrist isn't really an expert in the particular problem area which needs to be solved. A psychiatrist can help a person to better understand how their own mind works and manage their mind, but this will not necessarily help a person to make more ethical or higher quality decisions over time. It may help some people, but a psychiatrist for example will not be able to help a person navigate a legal landscape any better than a doctor or fitness coach can help a person choose an investment.

Agoras promises to connect both people and machines. This connecting people and machines is a great idea in my opinion. The ability to delegate to algorithms in my opinion is a main use case in my opinion. If for example you were to ask Siri to help you decide if you should remain someone's friend could Siri answer this question? What about Cortana? Would these even be private enough for people to feel comfortable asking these sorts of questions? Until digital assistants can help with ethical dilemmas that people face they will not in my opinion have much impact on ethical decision making.

I do think Tauchain someday will reach a point where people can ask their digital assistant for a solution to an ethical or legal dilemma. When it gets to that point then it will in my opinion be able to have a major impact on the problem of decision fatigue. If I can ask the computer to create the perfect diet for me without having to spend many many hours creating spreadsheets, doing research into nutritional science, medicine, health, etc, then this is less decisions I have to make, less time I have to spend consumed by the many many small yet critical decisions, and more time I can spend on bigger yet just as critical decisions.

Conclusion

A person who is poor, who has limited Internet access, such as if they can only access it through a smart phone, and only at certain times a day, this person would have to fit more research into less time. Their decisions also may be much harder, as every decision they make could seem critical. Of course we know people who are making really tough decisions still want to watch television, listen to music, enjoy good food, dance, and try to get the better sides of life, but realistically the point of this article is to show the cost of making high quality decisions consistently is prohibitively high as it does not scale up if the number of those decisions become beyond the capacity of that individual to cope with. Delegating to algorithms, applying computers, is a means of automating decision making as a stop gap mechanism to avoid decision fatigue, just as a gambler can bet by some internal algorithm they apply or they can let their emotions dictate.

People will still make bad decisions no doubt. The idea is that if you increase the capacity of the general person to make high quality decisions you can allow people who are trying to make increasingly better decisions to do so. This is not going to prevent people who intentionally want to harm others (or themselves) from doing that. Any technology which can be used to help people can also be weaponized by people who seek only to hurt others or and themselves. Fire allows us to cook our food but also allowed for the burning of the Library of Alexandria.

To listen to the audio version of this article click on the play image.

Brought to you by @tts. If you find it useful please consider upvoting this reply.

We have delegated some heavy lifting to diggers and other machines, time to delegate thinking ;) Which is what slowly but surely we have started to do in the XXI century (how often do we decide the route or leave it to navigation software?).

We try to make the best decisions based on available information and our internal state. Thus we can argue that we always make the best decisions possible.

The Universe seems to be deterministic, maybe with a pinch of randomness, which leaves no space for will and decisions. Do we make any decisions at all or are we just observers experiencing illusion of making decisions?

An algorithm is only as good as the person who is "programming" it or setting the rules. Even the most sophisticated AI needs some rules or parameters according to which success is measured, right?

A personal algorithm just for you "to create the perfect diet without having to spend many many hours creating spreadsheets, doing research into nutritional science, medicine, health, etc," - wouldn´t that require an equally lengthy period of programming/learning before a meaningful output can be expected?

Here is a hint, the best algorithms don't come from the mind of the programmers. The best algorithms are from nature itself. I don't when I'm looking for an algorithm, decide in a vacuum what it should be. I might have a hypothesis or an idea initially but the algorithm forms itself from iterative improvements, measurements, improvements, measurements, etc so that over time you arrive at an algorithm which was far more efficient than what your brain could come up with.

Algorithms use the latest data or knowledge to improve themselves. The programmer merely types in what they discovered. Of course you can also say math is invented not discovered and that it's all abstract from the mind of the programmer and this wouldn't necessarily be wrong. I just think it's math and math can be checked by anybody to see if it's correct or incorrect.

The data exists. It's just no being used to improve the diet of the individual. Lots of data exists about what you like to eat, what your blood tests results were, what your composition is, what your imagining test results are such as your CIMT, CT, Ultrasound etc.

So the data is out there and you can use that data to for example say that your arteries are 10 years younger than your numerical age or 10 years older than your numerical age. And if you combine all data points you might even be able to estimate the biological age. This is implying that your age is something measurable rather than an arbitrary number.

Rules are needed but the good news is most of the rules and formulas are public knowledge already. I can tell you lists of formulas which if you plug certain data in you can get different estimates out. The problem is having to do it manually. A personal trainer knows these formulas but if you have to build your own spreadsheets and track everything yourself it just takes more time. AI could track numbers, just wear your fitbit or your wearable + app, and it will track certain numbers on it's own, and those numbers go into different equations, different formulas, and then the output is beautified with what you'd think of as AI but it's really just a bunch of number crunching and easy stuff.

More sophisticated AI can do much more and automate more, but it depends on the size of the knowledge base. For example a knowledge base with a lot of knowledge about medicine can act like an expert system if it has things set up right and you ask the right questions. This sort of AI already exists if you search online, but it's just not being used in a way where anyone can access this sort of thing and use it for their sorts of problems. There isn't any AI for example to help most people with moral or social dilemmas.

As in my example, if you ask any personal assistant if you should be friends with a certain person it will not help you. You're suddenly completely on your own here. But it will tell you where to go eat? It will tell you how to get there?

This phrase has reminded me of something that I think Umberto Eco said about the fact that Social Networks on the Internet had given greater visibility and democratized a lot of idiots.

I do not believe that this author was absolutely against the Networks, but if I accept that with the "liberal economy of ideas" came a certain production and dissemination of opinions and ideas of very doubtful quality or reliability, that is what I think is refers when he calls idiots to those who generate these things and cause noises in the flow of knowledge...

Yet I don't talk down to the crowd. I think the design of the network and the incentives does not encourage wisdom. In other words the ignorance is not voluntary. If you wanted to not be an idiot but all of the Internet, its information flows, advertising, disinfo campaigns, encourage you to be an idiot, to become more ignorant over time, well then what else should society expect?

In other words the ignorance is being generated by the mechanism design. The problem isn't the outcome (ignorant people spewing bad ideas), the problem is why encourage ignorant idea propagation?

For example, is there any automated fact checking on any of the major social media platforms? No. They seem fine letting people be ignorant if it's favorable to their interests. You don't see fact checking yet you see posts censors for violating community standards? So they can pay for moderation, for censorship, but not for automated fact checking? Why give priority to one and not the other?

If or better yet, when I am ignorant, I want to discover the source of my ignorance. The source of my ignorance is low quality information. This problem of ignorance is similar to the problem of obesity, where people over time have to learn how to filter out bad foods, it's the same with bad information.

The problem is encouraged ignorance and involuntary ignorance. Sort of like the person who does not know how to read who gets blamed for not having access to books except the bible. If a person does not have access to books they will have a difficult time teaching themselves to read and even if they learn to read they will have a difficult time developing a large vocabulary if they only have one book.

Google gives access to the results of science. The problem is there is bad science out there. That is to say a lot of studies which look interesting but which cannot be replicated or haven't been replicated. A lot of studies on mice for example which never have been tried in humans, but this doesn't stop a supplement company from using the mouse model to sell the supplement as somehow possibly being beneficial to humans. The humans essentially are taking part in a trial by buying and using the supplement.

My point is, facts need to be checked, science papers have to be reviewed, logic has to be mapped, and there is no way around this. This is why I like Tauchain because at least you get the logic mapped so that completely contradictory ideas are identified. It still will not be enough because which facts are going to be the official facts? Some will be able to use Tau or any platform with any source of authority they choose and they could for example say only the facts which agree with their religious beliefs are the true facts. Automatic fact checking only would work if you all agree on the source of authority for what is or isn't true. If you believe God is true, and you believe the word of God is in the scripture, then why would you let some less true facts get in the way of ultimate truth? It's a question of how people prioritize facts as well.