RE: As a rule of thumb, the optimal voting time is before 5 minutes

I think it makes more sense to think if it as scaling down rather than amplifying (the amplification is generally less than 1.0).

That's what I thought, too, but now I don't think so. Here's the example from the linked post:

Alice has 10 rshares which are augmented by the rewards curve to be 33 rshares.

Bob has 5 rshares which are augmented by the rewards curve to be 12 rshares.

Charlie has 20 rshares which are augmented by the rewards curve to be 80 rshares.

All three were scaled up.

As to discouraging Sybil accounts:

This was the stated goal, but...

I agree with you here. I never paid attention at this level of detail before. I just took the claim at face value. But, it seems to me now that this would have had the opposite effect (if any). Basically, a large enough stakeholder can get nearly 5x their voted rshares with a single account or with multiple accounts splitting up their stake.

It also implies that - up to some point - smaller accounts are better off voting at 100% than at smaller percentages. I had never really understood that before. 4x5 at the above numbers would give 48 rshares, whereas 20 gives 80.

(Updated. Ignore the previous version of this comment. Too many graphs open at once.)

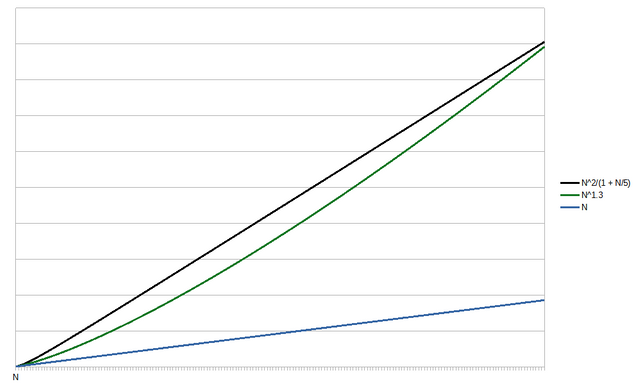

It seems to me now that something like N1.k would have been far better at discouraging Sybil attacks while still addressing the N2 inequality problems. For example:

By increasing the value for k, you can make the lines cross at any value that seems reasonable.

All of this is irrelevant to the argument about vote timing, though, since it doesn't depend on the rewards curve.

If I'm reading the code right, for author rewards, it looks like the curve is:

where s is:

My recollection from when I looked into this a few years ago was that rewards get zonked pretty hard until they get somewhere in the $5 range (not sure how accurate that recollection is, or if it would be the same today).

It looks like the reward curve for curation might be "convergent square root" rather than the convergent linear curve, though.

ok. I guess there are two things going on.

N2/(1 + N/5)was an example, not the formula that they implemented (don't ask me why he used an example that wouldn't be implemented).n^2 / (n + 1).Here it is before converging:

And here it is after converging:

(both with log scales on the X axis)

I'll update the original post tomorrow. Out of time for tonight.

I may look into things in more depth today and possibly do a more involved writeup on this topic, but I was poking around and found an old script that I wrote to help comprehend the convergent linear curve, it spits out what the rewards would be for various accounts if they did a 100% vote and were the only vote on a post:

I'm not going to have much time to look into it more today, but FWIW, I saw an HF21 post from Steemit yesterday where they said that they expected the inflection point for post value to be 20 STEEM.

Here's that same table with % of new vs. old added:

As percentages, I'd say minnows, orcas, and whales are generally consistent with what I saw in the spreadsheet yesterday. I'm not sure why it goes down from minnow to dolphin, though - or from Minnow to your account? I would not have expected that...

Update: Here's what the spreadsheet says the curve would look like as percentage of linear (rshares across the X axis); looks like the 100% vote for SC01 would be roughly 4.25 * 10^14, so a whale would be in the neighborhood of 2 * 10^12:

Except for the downward movement after minnows, I'd say that's consistent with your script's output.

That's right, posts that generate 20 Steem + will have an advantage over posts that generate less than 20 steem. that is, the reward will be somewhat higher for posts that generate more than 20 steem +

And stated values before payment of posts show 30-32% higher values before the payment takes place. I don't know if it matters for your math.

My guess would be rounding issues and the numbers in the table only having three decimal places.

Maybe I'll play around feeding different numbers into this tomorrow. Looks like that was written before the rule change, though, so it will need to change to your formula from above and/or to

N2/(1 + N/5).I think that's something like what I described in section 3. As I understand, the square root is based on voting order. From the link above, it used to be "square root". Not sure what the difference is between "square root" and "convergent square root", though. Maybe it just means taking the square root after applying the convergent linear algorithm?

I'm having a hard time following the code, but my current thinking is that only the author reward curve matters (to figure out the total amount of rewards to give to the post), and then it splits into author and curator rewards based on the percentage. Then I'm not seeing it do any scaling of rewards based on the order (maybe it's in there in a way I haven't seen yet). It might be worth checking a block explorer and looking at the curation rewards on a post that has paid out and see if they are just simply related to the rshares that came from each vote without any "it's better to be an earlier voter" element.

One thing that is order-related is that your share of curation rewards seem based on the delta between the curved reward before your vote vs. the curved reward after your vote, so there's probably some big bang-for-your-buck potential in being the vote that pushes the rewards on a post from the nonlinear low region to the nicer part of the curve.

Interesting. Here's the convergent_square_root function from the code:

Along with the marginal changes for that curve:

And here is the mvest / rshares ordered by time of vote from this post.

Noteworthy: the lowest mvest/rshares was the last voter: @steemcurator01 with 0.00077932..., and the highest was the 3rd voter, @curx, who voted after about 4 minutes and received 0.005784... mvests per rshare. So, the 3rd voter received 7x the reward ratio as the last voter. Voters around 5 minutes were scoring around 0.0035. Even the two voters at about 2 & 3 minutes beat the 5 minute score.

Presumably, the zeroes didn't commit enough rshares to get above the rounding threshold.

(The X axis is ordered by time of vote, but it's not properly scaled, due to the concentration of voters near the beginning of the voting period.)

So, yeah, I don't know how it happens in the code, but the order of voting definitely seems to make a difference.

Do you have an easy way to see what the actual curator payouts were for the post? I'm trying to see if my understanding of how it works maps to reality.

Not really. It took me quite some time this morning. The only way I know to do it is to get the rshares from the post, itself, using the condenser_api/get_content call, and get the curation rewards from the virtual operations in condenser_api/get_ops_in_block, then marry them up using a spread-sheet or script/program.

It's probably easier to start from the curation rewards and work backwards to the post. I did it post then curation rewards and finding the right block number with the curation rewards was a real chore.

Having trouble figuring out how to get the info I want from the standard API. But in case this is helpful for you, here's how you can get some useful info about that post from SDS:

https://sds0.steemworld.org/posts_api/getPost/remlaps/programming-diary-24-the-steem/true

I believe the "weight" parameter in the votes represents the share of curation rewards, and seems pretty close to the actual payouts (I think the differences are based on the .001 resolution). Haven't figured out why that number doesn't match what I think it ought to be yet.

OK. I made some progress but am still kind of bewildered. I now think the convergent square root curve is used (in the vote operation itself, I didn't notice at first that it picked the "curation" curve since I was so used to seeing the other curve in the rewards code). I think it sets the curation-payout-weight based on the delta between the new convergent-sqrt of rshares and the old convergent-sqrt of rshares. But my numbers aren't lining up with the actual payouts on the post. I'm wondering if maybe the data structure my script is getting for the votes isn't in order.