Could a Neuroscientist understand a Microprocessor?

Hey everyone! I am still working my blog series on psychedelic neuroscience, but this a somewhat tedious undertaking. Since the topic requires knowledge of certain technical aspects, I decided to review one of my favorite recent articles!

Could a Neuroscientist understand a Microprocessor? *

Many researchers (and most bloggers) will typically depict science in terms of technological development, but I want you to appreciate the beauty of an experiment that draws attention to how limited our understanding of intelligent behavior actually is. Jonas and Kording (2017) * demonstrate this in a hilarious manner by investigating how a Commodore 64 microprocessor generates the behaviors of Donkey Kong (1981), Space Invaders (1978), and Pitfall (1981) with all the modern tools of cognitive neuroscience.

It has become one of my favorite articles because it demonstrates the typical workflow of neuroscience and how current attempts to reconstruct the mind from Big-BrainData ( e.g. the Human Brain project) may be conceptually limited! This is important to know because reverse engineering the brain is on the forefront of AI development. So let’s dive into differences between artificial systems and natural systems and how a neuroscientist would dissect a microprocessor. Consider this an introductory blogpost to cognitive neuroscience .

Defining Behavior:

The ultimate goal of cognitive (neuro)science is to isolate discrete types of behavior which correspond to discrete underlying mechanisms. First you must define the processing unit, this could be a transistor, a neuron, an organism or even a superorganism. The basic principle here is that the unit will receive an input (the stimulus) and produce an output (behavior); the process describes an operator that predicts the output based on the input. As you may have noticed, this draws on an allegory of: imagine that the brain works like a computer. Indeed cognitive neuroscience was inspired by the development of computers in the 60s and this behavioral approach hasn’t much changed since then. Several cognitive processes make use of such operationalization, for instance attention, executive control or working memory, so it is warranted to ask whether a neuroscientist could understand a microprocessor. This approach isn’t so much concerned about how behavior works in a complex natural environment, but how single behavioral processes function in a simplified control environment. During my bachelors work I conducted behavioral experiments on mice. These mice were genetically manipulated to have dysfunctional NMDA-receptors in the Hippocampus, which meant that they were incapable of learning spatial information. Here you can see how this is tested in the Morris Water Maze:

Test group:

Control Group:

The mice were training all day, yet as you can see the test mouse was far worse at finding the beaconed platform than his control counterpart. The set-up of these experiments aims to reduce as many behavioral components as possible, but even tasks as simple as the water-maze probably require multiple cognitive processes to function simultaneously. Looking at individual atari games seems to be the equivalent of how neuroscientists conduct business. Like the brain, the microprocessor is of course capable of doing much more than these, but the aim of current paradigms is set on isolating these behaviors in a reduced environment. We should assume however, that all these components are quite intertwined when we make decisions in everyday life. Isolating a few behaviors from a microprocessor should still be far easier, since the underlying complexity of this system is presumably far simpler than that of a brain. Limiting the behavioral measures to three Atari games reflects the limited nature of neuroscientific behavioral measures quite well in my opinion. It would be more sensible to run individual program scripts of these games, but there is no such equivalent method available in cognitive neuroscience.

Microprocessors vs. Humans:

Microprocessors are built to perform a specific function whereas our brain functions evolved under loose evolutionary constraints with far greater degrees of freedom. Survival of the fittest is a very vague instruction, as different species can attain unique strategies for achieving this goal. Our systems contain far more variance than an engineered one. Transistors are deterministic, whereas neurons behave quite randomly. When presented with the same stimulus, the neuron will only produce the same output on average!

Furthermore there is only one principal kind of transistor, whereas there are over hundred different types of neurons, each with a different biochemical make-up, different types of activation channels and different wiring-patterns. Neurons have about 10 000 synapses; far more outputs than a simple transistor. Transistors have a straightforward function, whereas it still hasn’t been fully deciphered how an individual neuron encodes information. There are several different class neurons with different chemical properties, which may encode information differently.A microprocessor as a model organism exhibits far less complexity than a biological organism. Understanding its behavior should be easier, right?

Connectomics:

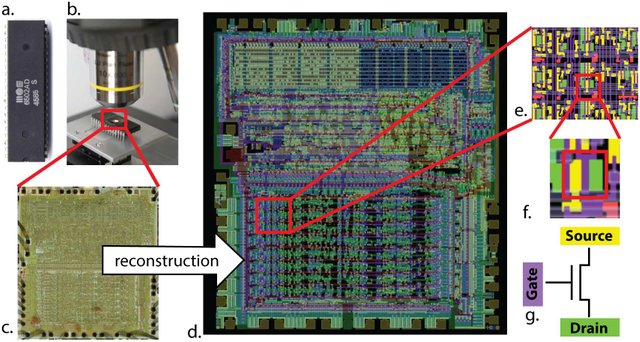

The goal of the human brain project is to eventually run a full brain simulation. This will require a fully reconstructed 3-dimensional computation model. After behavioral analyses, neurobiologists dissect the mice, extract their brains and cut these into thin microslices. Unlike the microprocessor, the brain is organized in three dimensional space. Not to worry. The problem is that the brain Individual neurons can be identified under the microscope with various staining methods. Winfried Denk and colleagues at the Max Plack Institute have also come up with an automated process wherein a mouse brain is cut into tiny cubes that are individually scanned across all three dimensions via electron microscope. This enables scientist to reconstruct the three dimensional structure on a computer interface:

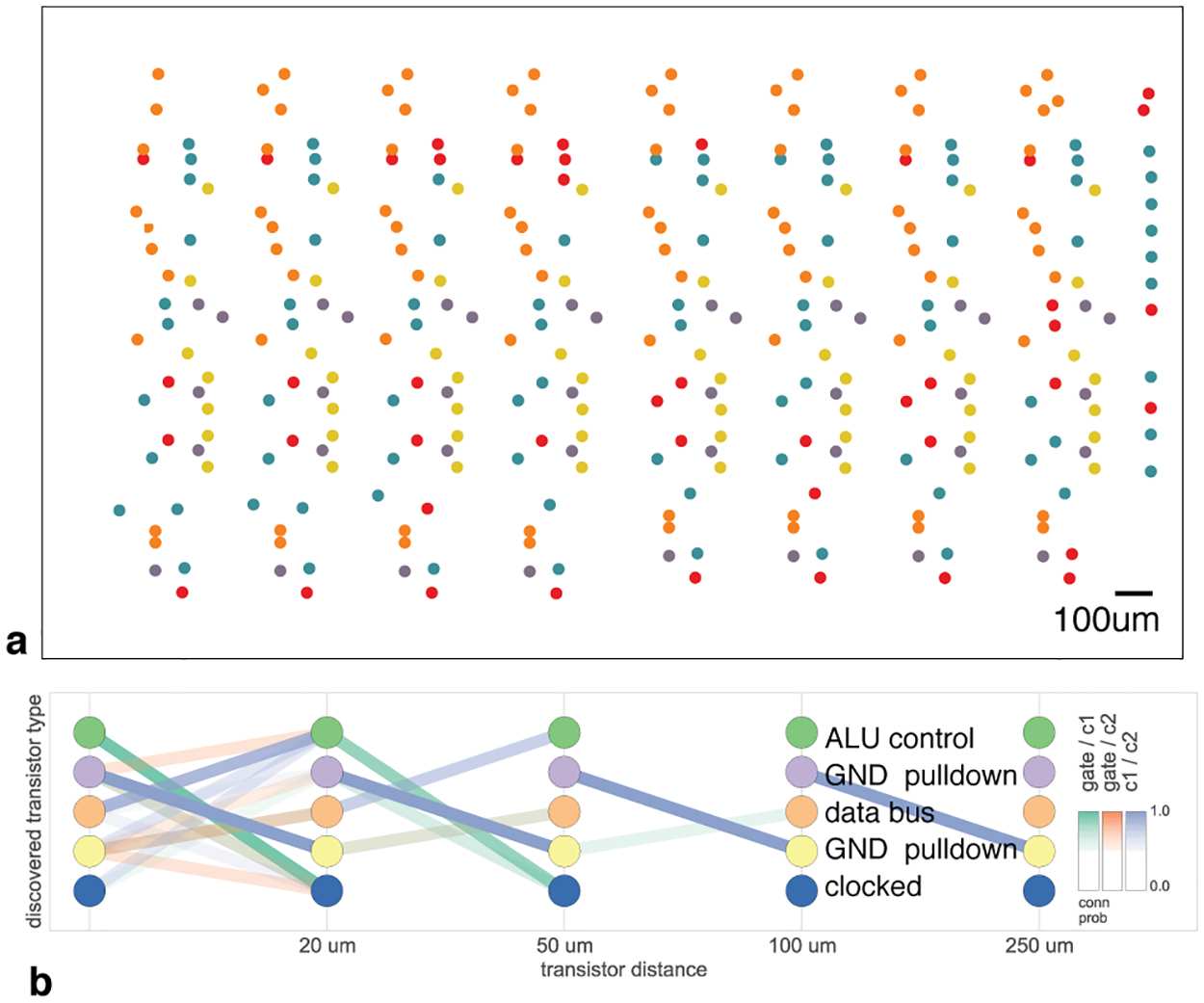

As you can see, the microprocessor was also dissected and the silicon components were chemically stained and identified with a microscope:

Source:*

They stained the processor with silicon dies and examined it under light microscope. Computer vision algorithms identified the metal and silicon regions and were able to reconstruct the transistors. This approach proved successful, since they were able to reconstruct and emulate the microprocessor!

Source:*

Lesioning:

Lesion studies are the foundational method of neuropsychology. There are many famous patients who obtained head injuries that caused peculiar changes in cognitive functioning. Famous accounts are of Phineas Gage and E.V.R., both of whom obtained severe damage to the orbitofrontal lobe.

.gif)

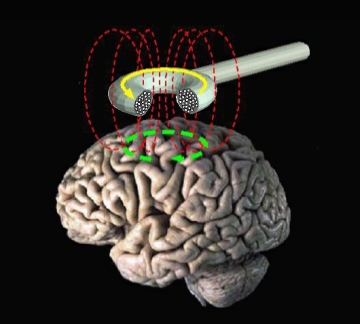

The patients obtained sever loss to executive functions such as action planning, but their IQ remained intact. E.V.R. used to be a successful accountant, but after his lesion he lost his job, divorced his wife and married a prostitute. Other interesting cases can be found in The Man who mistook his wife for a hat , a novel by Oliver Sacks. Individual case studies are now outdated, because they aren’t comparable across the population. Each individual adapts differently to their injury, as many functions may quickly be taken over by other brain regions. Contemporary neuroscience no longer assumes individual brain functions to be localized within discrete brain regions. It has become far more plausible that several different brain regions perform complex interactions with one another, such interactions may even include other individuals within a complex environment. It makes far more sense nowadays to analyze complex interactions than to point at the amygdala and proclaim: Look! We found the center of emotions! In order to look at such interactions, one can induce temporary lesions with TMS coils. This technique uses magnetic coils to inhibit individual brain regions, but only on the outer cortex.

In organisms such as mice, individual neurons can now be turned on and off with optogenetics. This method is comprised of a genetic modification that is too invasive for humans at the moment.

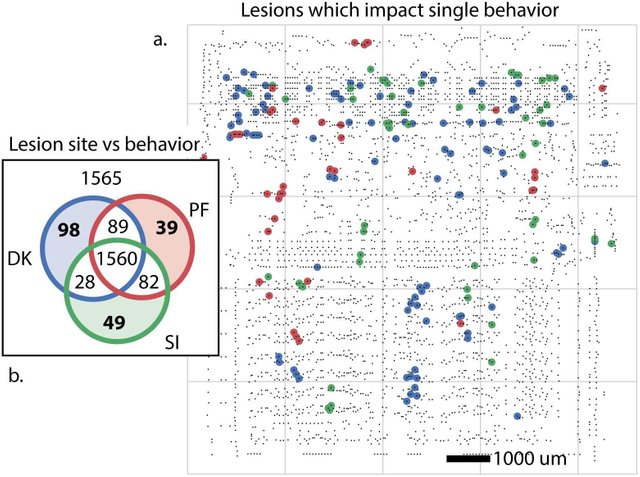

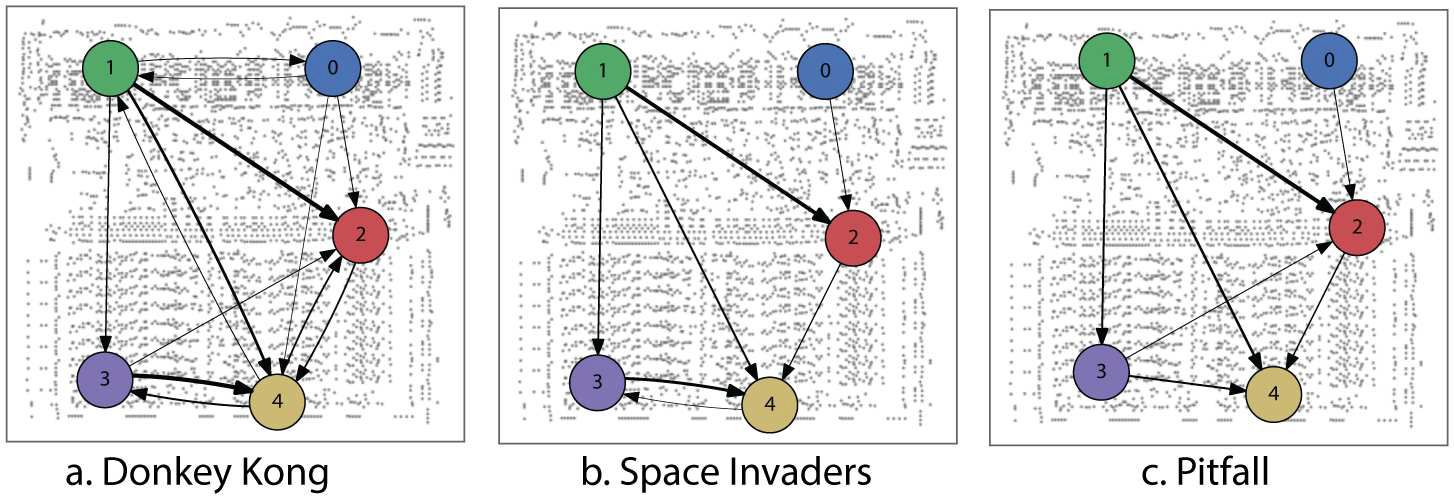

The authors investigated the effects of destroying individual transistors on the computer games behavior:

Source:*

As you can see there is a large overlap between different games (behavior). This is because systems may heavily depend on one another, but this doesn’t necessarily describe its function. For instance I may heavily depend on the availability of oxygen, but oxygen does not explain my behavior in every given context. As you can see, most transistors are so fundamental that they are required for all three atari games. This reflects how neurobiologists go on about their research quite well. Individual brain regions/neurons are turned on or off, to see how the organism changes its behavior. This still has many caveats.

Single unit recording:

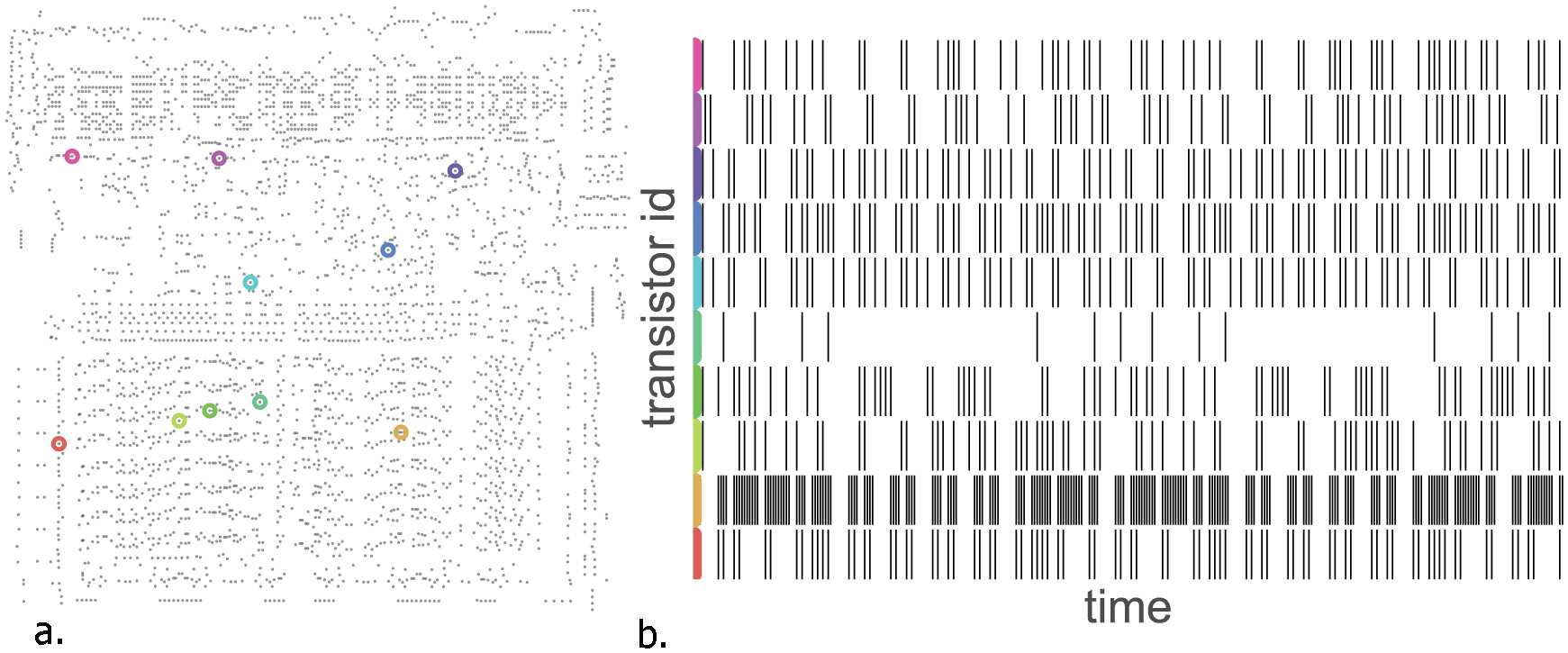

Knowing how something is built still doesn’t describe how it works. Understanding how something works requires a model that describes how information is propagated throughout these structures. Since neuron ensembles aren’t composed of uniform units such as transistors, this problem already arises at the neuronal level. As mentioned before neurons have very individual firing-properties and this problem is addressed with single cell recordings:

Source:*

After the firing-rate (or spikes) are acquired for the full spectrum of possible input stimuli, neuroscientists will want to know when the neuron (or in this case a transistor) is most likely to fire. They caluclate tuning-curves which tell us which information the neurons will respond to with the greatest probability. The information score can then be correlated to behavior. This was done likewise for the microprocessor:

Source:*

Local activity:

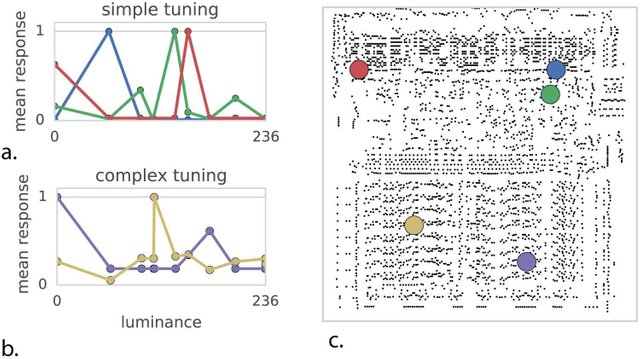

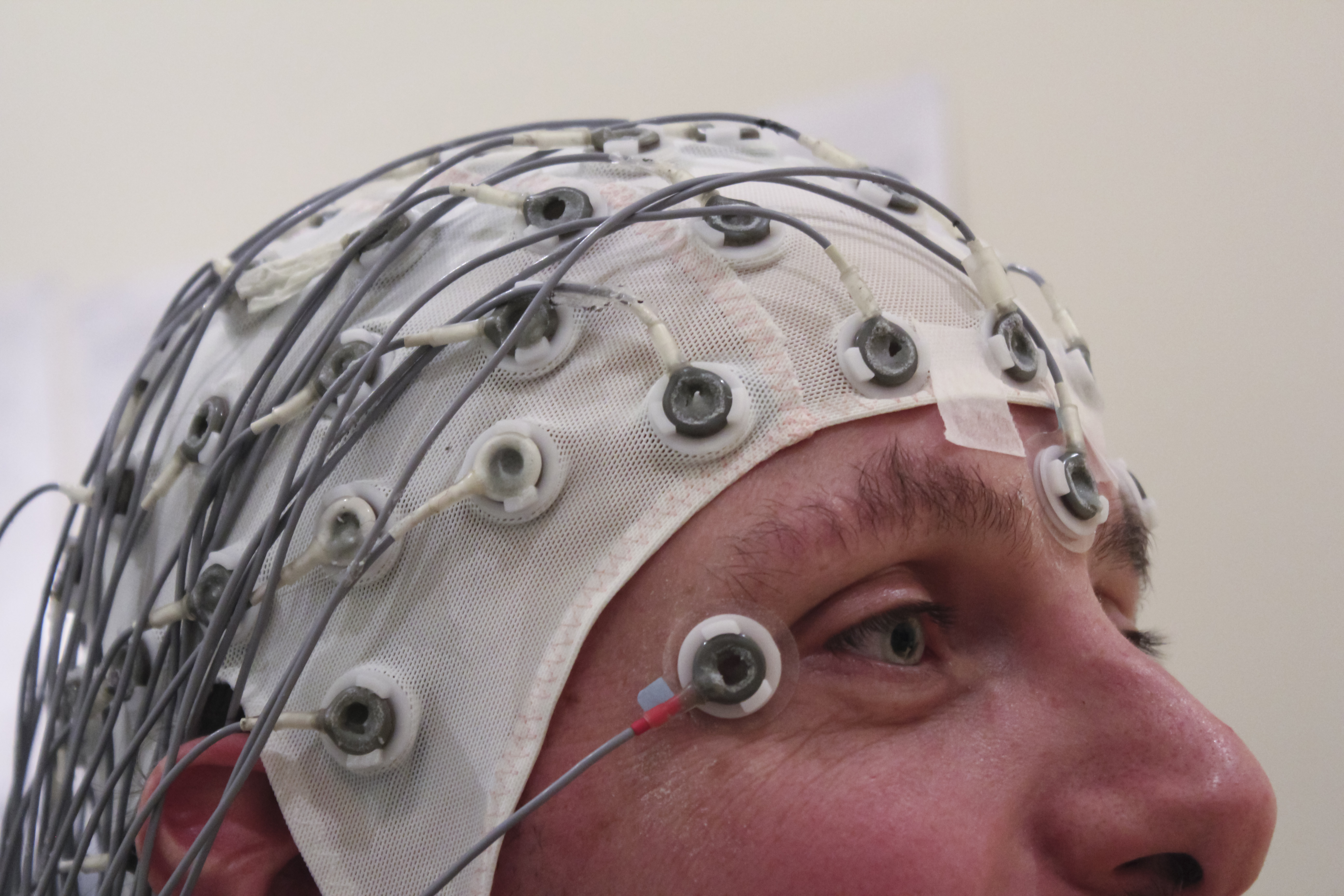

Knowing how single units correspond maximally to a given attribute of behavior still doesn’t tell us much about how the behavior is orchestrated. Information isn’t just a property of a single neuron, but of neuron collectives that excite each other. The two principle ways of isolating mechanisms that correspond to distinct behaviors are is in time (temporal) or space (spatial). EEG has a high temporal resolution, whereas fMRI has a high spatial resolution. When many neurons fire at once, they generate an electric field called ‘Local Field Potential’. Sudden changes in these potentials are measured with EEG, but this isn’t ver accurate at localizing brain activity.

EEG-recordings were performed on the microprocessor as well:

Source:*

Connectivity analysis

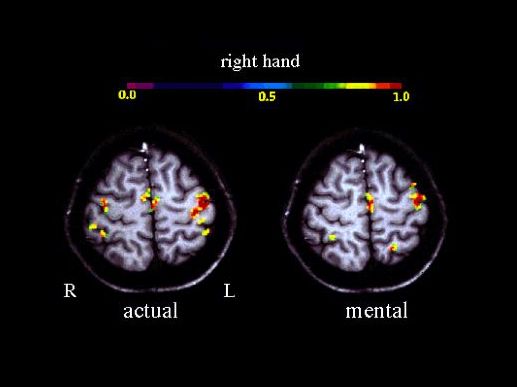

Spatial localization is performed with fMRI, which measures changes in the regional blood flow. These kind of recordings tempt lay people to make various far-fetched claims about brain regions because MRI machines can generate all the nice brain pictures. Psychologists have found that simply by showing a picture of a brain with a highlighted region will convince people that the findings are true. It is worth noting that fMRI measures the BOLD-signal, which is a statistical probability of increased blood flow and not the actual firing of neurons (action potentials). The BOLD signal captures the average amount of blood flow during a behavioral condition, the signal is thus a mixture between input signals that neurons are receiving and the output signals that a neuron is emitting.

These pictures of labeled brain regions are quite deceiving as to the true nature of what is being measured.

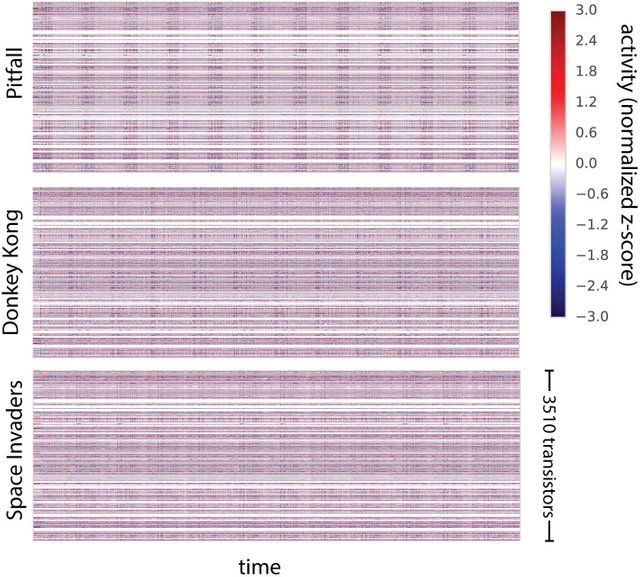

In the same manner as Neuroscientist use EEG and fMRI to measure the average activity of brain regions, the authors of this paper measured local activities of the microprocessor:

Source:*

For the reasons I mentioned previously, this still doesn’t sufficiently describe what the microprocessor is doing! When neuroscientists speak of functional connectivity, this means that they analyze which brain regions are active simultaneously during a behavioral condition. This says nothing about what operations the regions are doing, only that they are somehow connected to one another (on average).

Causality

This lack of insight into whether the brain signals that are being measured reflect cause or effect is a serious problem in neuroscience! One solution is to analyze statistic causalities. The idea is to look at the data-series of region X and see whether it contains regularities that can predict the data-series of region Y. With machine-learning, I can imagine great advances in this approach.

Source:*

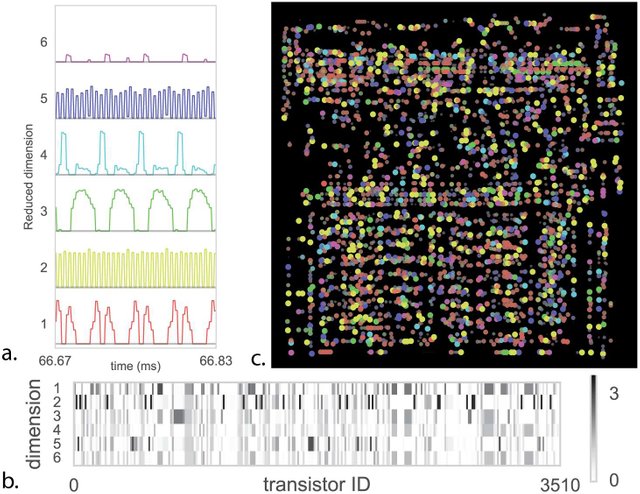

Dimension reduction

Another problem that comes with whole brain imaging in neuroscience is the sheer amount of raw data. Like I said before, we are trying to isolate discrete behaviors so the Big-BrainData that comes out of whole brain recordings is way too elaborate. The brain is busy maintaining itself and we only want to find processes that correspond to simple decisions. It’s like finding a needle in a hay stack. To overcome this problem, neuroscientists will try to see whether they can reduce the dimensionality. A data-scientist could give a whole course on this, since this also quite relevant for machine learning, but the idea is to see whether the activity of certain units are dependent of one another. If they are, there is no need to model them as separate independent variables and you can construct a variable that captures both of these units activity. Anyway with the help of some linear algebra the authors could isolate a reduced amount of relevant dimensions, which they correlated to the microprocessors behavior:

Source:*

Conclusion:

The most pressing issue of cognitive neuroscience is not actually knowing what the brain is supposed to be doing. With an artificial intelligence we know exactly what we want the system to do, for instance beating Garry Kasaprov at chess or becoming the ultimate GO master.

As the authors point out:

Microprocessors are among those artificial information processing systems that are both complex and that we understand at all levels, from the overall logical flow, via logical gates, to the dynamics of transistors

So you have to consider that we can be certain that a microprocessor has an intelligently built purpose, whereas our brain doesn’t!

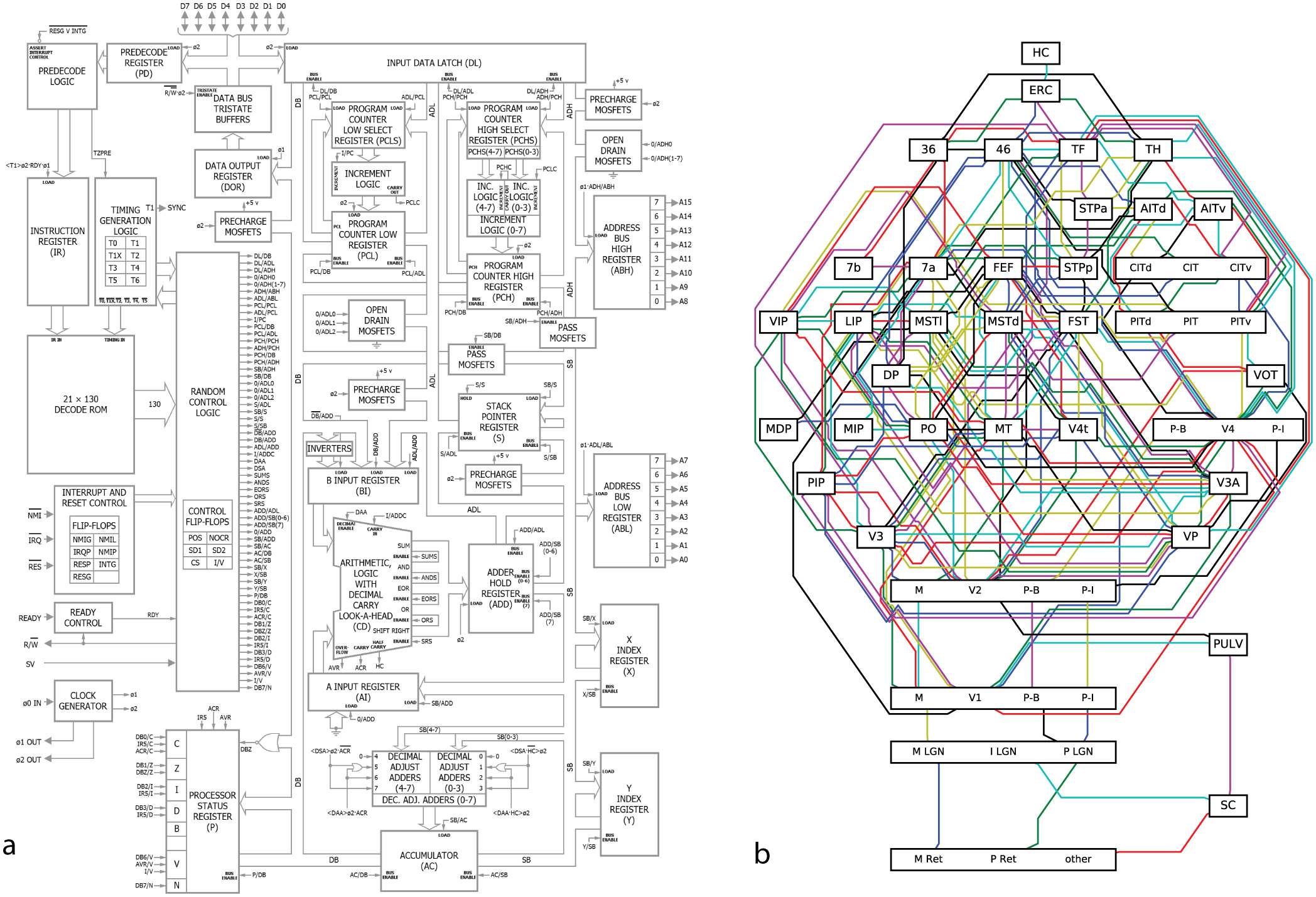

Source:*

This image shows a well-defined blueprint of a microprocessor (left) and a state-of-the-art functional blueprint of a brain (right). As you can see, all these methods yield a vast complicated network of interesting data-structures but these are hardly sufficient for the construction of a fully functional program that runs donkey kong.

So can a Neuroscientist understand a Microprocessor?

NO!

If a neuroscientist can’t understand how a microprocessor works, than how could they possibly figure out what the brain is doing?

You might be inclined to say that I have left out machine learning from this picture and I will admit that machine learning is a powerful new statistical tool.

But, machine learning relies on supervised learning, which means that it will only solve problems where we can define discrete categories to classify. Unless we have a full understanding of what these categories are, the machine learning algorithms will inherit our biases. Thus how do we know that the machine learning algorithm isn’t just overfitting data to predict simple behaviors?

What does it mean to understand behavior?

According to David Marr (1982) [1] there are three levels of modelling that encompass our understanding of behavior:

- The computational level: What is the problem that the system is trying to solve?

- The algorithmic level: How is the system trying to solve it? What would a solution look like?

For the brain and microprocessor this is a question of how internal representations are manipulated. - The implementational level: What is the physical substrate of these computations?

(e.g. neurons, connectivity, transistors etc.)

These criteria remain unanswered in a purely data-driven approach!

Concluding Remarks and Future directions:

Understanding the human psyche has been the major theme of philosophy for centuries and recently this endeavor turned into the question of how our brain and mind are integrated. With the rise of psychology it is also a matter of how our brain generates behavior? In my opinion, these questions are intertwined because our mind arises within a complex interaction between our brain, body and the environment. With modern neuroimaging techniques, theory driven science is being swept aside to create data-driven science. This isn’t all bad, every scientist loves good data, but we shouldn’t forget theories either. Nowadays, mentioning Freud is frowned upon in academia but one must realize that his theories fundamentally shaped the societal understanding of the psyche. A theory must go beyond publishing an image of a brain region that seems to do something with some behavioral condition or other and give a more extensive explanation of how this generates behavior!

We can’t just point to the amygdala and proclaims we have found the center of all emotion. The truth is far from it. Neuroscience is currently locked in two camps, between Representationalism and Enactivism. Representationalism sees brain functions as a matter of internal representation, an approach that mainly tries to locate data-structures within the brain from which consciousness (somehow) emerges. Whenever I go to a human brain project presentation I always wonder how they think a brain simulation will be able to explain consciousness on account of simplified behavioral measures conducted on mice or rats.

Enactivism on the other hand places consciousness in an embodied brain that is embedded within a complex social environment. Consciousness is depicted as a dynamically developing interactive dialogue between our brain and the environment.

As you may have guessed from this post, I am more in favor of embodiment.

I believe that theories such as predictive processing will come a long way in giving a full account of human behavior. This theory holds that our brain functions in a manner that seeks to reduce our uncertainty over our environment and enacts exploratory behavior.

Moreover I am interested in figuring out the effects of psychedelics, since these are quite numerous and extend across many different explanational levels. Mapping these levels onto each other may ultimately help explain consciousness in the process (more on this later)!

Anyhow, if you enjoyed this article please re-steem cause I don’t have many followers at the moment. I put quite a lot of effort into it, but quality over quantity I say!

Image sources:

**Figures were taken from **:

Jonas, E., & Kording, K. P. (2017). Could a neuroscientist understand a microprocessor? PLoS computational biology, 13(1), e1005268.

Images that were used for illustrative purposes were acquired from:

Luca Boldrini B "commodore 64". July 29 2009. Online image. Flickr. March 12 2018. https://www.flickr.com/photos/lucaboldrini69/4841984952

BagoGames " Green Lit Space Invaders Film". July 24, 2014. Online image. Flickr. March 12 2018. https://www.flickr.com/photos/bagogames/14750012323

Microsiervos " Donkey Kong Arcade". August 19, 2015. Online image. Flickr. March 12 2018. https://www.flickr.com/photos/microsiervos/20708744872

Melissa DoroquezBy: Melissa Doroquez " Pitfall at the Game On Exhibit". August 15, 2006. Online image. Flickr. March 12 2018. https://www.flickr.com/photos/merelymel/230513378

Pixabay

Maxpixel

&

Wikimedia Commons

Literature Sources:

Jonas, E., & Kording, K. P. (2017). Could a neuroscientist understand a microprocessor? PLoS computational biology, 13(1), e1005268.

Bechara, A., et al. (1994). "Insensitivity to future consequences following damage to human prefrontal cortex." Cognition 50(1): 7-15.

Marr, D. (1982). Vision: a computational investigation into the human representation and processing of visual information. W. H. WH San Francisco: Freeman and Company.

I'm glad you gave a little nod towards Oliver Sacks. Reading his books is what made me get interested in how minds work.

Some philosophers and scientists a few decades back were too quick to claim that the brain is simply a computer and that you just need to run the right program to simulate consciousness on a basic computer. Thought experiments like the Chinese room argument pushed back on the idea. Like you said, neurons are not transistors. A single neuron is an incredibly complicated thing. Though I believe one day it will be possible to emulate a whole brain.

Enactivism feels right to me. I've wondered if it were possible to keep a brain, that's had no prior experience of an external world, alive in a vat, but without connecting the sensory pathways to external stimuli. My suspicion is that no consciousness could emerge.

It's hard to even imagine a fully developed brain that hasn't had some experience, even a fetus starts processing some information way before birth. An artificial neural net by itself doesn't do anything, you need to train it. Same thing with a live brain. It's integral.

That connectome video was dope. Thanks for a truly stellar article. I agree with quality over quantity.

Thanks for the encouragement, Sacks also got me into this field so I thought I'd mention him. Since you vcan't have consciousness without being consciouss of somthing, I think consciousness should be seen as somthing thats dependent of an environmental interaction. So I agree with you, the concept of a brain in a jar is not a very insightful allegory.

Artificial Intelligence AI Is The Future And Yes I Am Scared Of How It Will Affect Our Society In The Near Future.

"explain consciousness on account of simplified behavioral measures conducted on mice or rats." :D

I honestly loved your article and the detail in which it was written and made me remember where we are with technology and science in general. We have more ahead of us, but I would like to read more.

I saw something on the theme of machine learning a few days ago from CGP Grey:

It is not about microprocessors, but rather about the learning patterns of the machines. I am also working with the idea to develop voice recognition for the software I develop, but hit this learning problem.

Keep up the good work, you have gained a devoted follower, and I have resteemed your article! :P

Hey,

CGP Grey has good stuff. Machine learning is pretty cool and I would like to explore some of it's applications in neuroscience. It is definetley a game changer, but the problem of supervised learning is still a huge issue. As grey points out, it can only learn what we tell it to, so if we were apply it to understand ourselves seems like we'd be creating a logical circularity.

Also it may result in algorithms that can predict our behavior better than us, but we will have created a complex system, which we still don't understand. It is freightening in a way to see that we can create complexity without understanding it. An exciting topic for sure!

Yes! If you didn't see his "you are two", you should!

I think we are already living the future, but more research needs to be done and we need more researchers. So keep up the good work!

Actually I don't quite agree with that video. While it is amazing that there can be two seperate entities within the same brain after a callosotomy, I wouldn't deduce that this constitues the normal state-of-affairs. Split brain patients undergo forced changes, so I'd take greys conclusions with a pinch of salt on this topic.

Well, I am sure you know more about this than me but It felt too strange to believe, it's one of those things that makes you go hmm. So i researched and I read a study ( restricted,true) that was confirming that. I will have to read more :D I must agree that the way he puts it has its charm

Yes so this used to be a kind of fad in neuroscience and psychology. Academics would say that you are a left brain person or a right brain person, as if you had a dominant hemisphere. While it is true that the hemispheres are lateralized (that means certain functions are specialized to one of the hemispheres) it shouldn't be assumed that these regions are operating independent of one another. When the connection gets severed, the hemispheres can no longer communicate directly. There are even cases where the hemisphere don't agree with each other, e.g. one hand throws out the item the other puts in a shopping basket.

It is an interesting question what it means to have a self and how this is constructed by our brains. There is also a case of conjoined twins who share a thalamic connection to each others brain. Apparently they can share thoughts, feelings and what the other person sees. This goes to show that the brain can integrate various sources of information, so when the two hemispheres are joined, they two probably form one entity. But who knows...

Those twins, wow, amazing. If you read something like that from a fictional story, you'd think it sounds far fetched, but this is real.

They could be in an unique position to prove or disprove the many philosophical statements made about qualia, which is thought to be definitively subjective to the person and inaccessible. Not anymore, it seems.

Not sure if that solves the qualia debate, but it definetley opens new considerations for self-hood.

This is purely good and my time was worth reading this and gained something. I'm actually of the view that science and technology does have alot to offer humans in near future.

Thanks, I do too. I am just weary of overreliance on fancy data. Some of the work has to be put into asking good questions before we start looking for answers.

Great read. Such an awesome concept to consider, I wish that I had had a greater appreciation for the relationship between neuro and computer science when I was in school, but perhaps I'll still find a way to pivot toward the industry down the line.

I am very glad that you brought up your support of enactivism, Cartesian duality and representationalism still have strong holds in the public understanding of the cognition and I find that in my current role, updating conceptions of conciousness never really benefits any of my geriatric folks that are just trying to find ways to heal. But as a neuroscientist that also studies philosophy, I am fascinated by the discussions surrounding free will and enactivism.

Thanks! I guess some of the outdated cartesian stuff might still be useful. For instance if a schizophrenic patient starts blaming themself for the condition, I could imagine it may feel relieving to think Ow its just my brain thats causing me to hallucinate. But for geriatrics I think embodiment is also relevent: a healthy body creates a healthy mind. One of my Professors loves the idea the the quadriceps-corticospinaltract-prefrontal circuit increases executive functions. So never stop riding the bike or going up and down some stairs .

I personally have demonstrated some weakness in my own executive functioning and while mindfulness has been hands down my best practice, I love hiking in the woods...perhaps I should look into a trail bike for switching up my daily brain workout haha

Now this is the kind of articles I am waiting for! Great read.

Regarding this statement, let me ask something:

A neuroscientist (or a team of engineers) IMHO can understand a microprocessor. Correct me if I am wrong. Why am I saying so? Because we have lot many examples of teams reverse engineering microprocessors and creating clones. I am sure you might have heard of this. I would like to hear your opinion on this.

Hey,

I am absolutely sure that a Neuroscientist can understand how a microprocessor works, this article just shows that the'tools of neuroscience aren't apt at reverse engineering. Afterall an fMRI machine is an increadible feat of engineering, so there are plenty of neuroscientists with physics and engineering backgrounds. If you have the methods and knowledge of engineering at your disposal than you can reverse engineer a microprocessor. But these methods aren't applicable to the brain. So I'm pretty sure if the question was: Could an engineer understand the brain? than the results would be just as grim (if not worse). The point is simply that a microprocessor should be easier to decipher than the brain, since it has an intentionally built purpose. This goes to show what a needle-in-the haystack modern data-driven neuroscience is. I am pretty sure we need more theories as a compose to help us connect the dots quicker. Hope that answers your querries.

Yes. you answered my query very well. Thank you. Nice to meet you btw.. :) Its great to see quality writers in steemit.

Congratulations @psyentist, this post is the third most rewarded post (based on pending payouts) in the last 12 hours written by a Dust account holder (accounts that hold between 0 and 0.01 Mega Vests). The total number of posts by Dust account holders during this period was 10975 and the total pending payments to posts in this category was $1441.05. To see the full list of highest paid posts across all accounts categories, click here.

If you do not wish to receive these messages in future, please reply stop to this comment.

Engineers and neuroscientists should team up to better understand the human brain.

Related to your post ...

Trying to figure out how to best word my question... I study plant behavior (really, the study is called "plant neurobiology" but in order to keep this comment on topic, let's just talk about behavior.

From my somewhat limited research, it seems like there is still a pretty strong focus on human behavior being localized to the brain, even in the face of examples where people have lost large chunks of brain and still been able to operate functions once thought to be found there. My question is if there is a branch of human behavior that believes that it is not localized to the brain? If so, what would it be called? Thanks!

Hey,

In general such notons may be captured within paradigms related to emodiment , enactivism or the extended mind hypothesis. The latter holds the consciousness can be extended even onto interactions with bodies and even devices (like phones).

Still the brain is more or less seen as the central locus of behavior. Of course when you look at complex behaviors of an octopus which is thought to be a function of its peripheral nervous system rather than its central nervous system, one can see that some of the fundamental attributes of behavior could stem from the body (hence embodiment).

Thank you! This gives me a good starting point for my research. I often refer back to the octopus when discussing non centralized processing, happy to see you cite them as well. This is all new to me, in that I have focused more on the plant side, but as I prepare a research study with another scientist that will take us into the realm of awareness and decision making with regards to plants, I am finding the need to better understand these aspects with regards to humans.