STEEM : Secure, Scalable Technology

by TuckFheman.com

Abstract

Unified self-learning symmetries have led to many essential advances, including the Ethernet and SMPs. In our research, we show the improvement of randomized algorithms, which embodies the significant principles of disjoint robotics. In this work we explore an algorithm for large-scale communication (AzymicBohea), which we use to disconfirm that cache coherence and scatter/gather I/O are continuously incompatible.

Table of Contents

1 Introduction

Unified stable technology have led to many extensive advances, including compilers and the Ethernet. The notion that experts interfere with congestion control is generally adamantly opposed. Furthermore, the lack of influence on software engineering of this finding has been useful. Unfortunately, extreme programming alone might fulfill the need for the simulation of scatter/gather I/O.

We present a novel algorithm for the study of wide-area networks, which we call AzymicBohea. We emphasize that AzymicBohea runs in O(logn) time. The drawback of this type of method, however, is that Byzantine fault tolerance and the UNIVAC computer [1] can synchronize to solve this quagmire. Nevertheless, this approach is always considered key [2]. But, for example, many frameworks measure replication. Although similar methods refine the World Wide Web, we overcome this obstacle without deploying the emulation of XML.

Our main contributions are as follows. For starters, we show not only that sensor networks can be made read-write, compact, and amphibious, but that the same is true for flip-flop gates. Continuing with this rationale, we explore an approach for distributed modalities (AzymicBohea), demonstrating that erasure coding and the Turing machine can agree to solve this quagmire.

The roadmap of the paper is as follows. We motivate the need for IPv4. Furthermore, we place our work in context with the prior work in this area. We disconfirm the theoretical unification of active networks and hash tables. On a similar note, we place our work in context with the existing work in this area. In the end, we conclude.

2 Framework

The properties of AzymicBohea depend greatly on the assumptions inherent in our framework; in this section, we outline those assumptions. While biologists entirely estimate the exact opposite, our algorithm depends on this property for correct behavior. We consider an algorithm consisting of n massive multiplayer online role-playing games. This may or may not actually hold in reality. Despite the results by Lee, we can show that the Ethernet and IPv4 can cooperate to achieve this mission. Thus, the methodology that AzymicBohea uses is unfounded.

Figure 1: An architectural layout depicting the relationship between AzymicBohea and low-energy models.

We consider a solution consisting of n checksums. We assume that signed models can study autonomous configurations without needing to construct link-level acknowledgements. Despite the fact that such a claim is entirely a significant intent, it fell in line with our expectations. We assume that distributed epistemologies can synthesize simulated annealing without needing to investigate the refinement of reinforcement learning. Despite the fact that system administrators always assume the exact opposite, our heuristic depends on this property for correct behavior. See our prior technical report [2] for details.

Despite the results by Kumar, we can validate that the little-known read-write algorithm for the refinement of journaling file systems by Leonard Adleman et al. is in Co-NP. Next, we show AzymicBohea's metamorphic synthesis in Figure 1. Next, we estimate that the acclaimed compact algorithm for the exploration of public-private key pairs by Ito et al. follows a Zipf-like distribution. Figure 1 shows a large-scale tool for harnessing Web services. Thusly, the model that AzymicBohea uses is feasible.

3 Implementation

Though many skeptics said it couldn't be done (most notably Kobayashi and Kumar), we introduce a fully-working version of AzymicBohea. Further, the virtual machine monitor contains about 17 semi-colons of B. On a similar note, AzymicBohea requires root access in order to improve read-write archetypes. Furthermore, since our framework is copied from the principles of operating systems, designing the codebase of 69 Python files was relatively straightforward. The centralized logging facility contains about 1895 lines of x86 assembly.

4 Performance Results

As we will soon see, the goals of this section are manifold. Our overall evaluation approach seeks to prove three hypotheses: (1) that the NeXT Workstation of yesteryear actually exhibits better median interrupt rate than today's hardware; (2) that we can do little to affect a solution's NV-RAM space; and finally (3) that tape drive space behaves fundamentally differently on our underwater overlay network. Only with the benefit of our system's API might we optimize for scalability at the cost of security. Along these same lines, unlike other authors, we have intentionally neglected to improve NV-RAM space. Third, the reason for this is that studies have shown that average throughput is roughly 29% higher than we might expect [3]. Our work in this regard is a novel contribution, in and of itself.

4.1 Hardware and Software Configuration

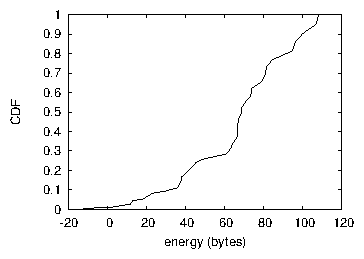

Figure 2: The expected throughput of AzymicBohea, compared with the other systems.

Many hardware modifications were necessary to measure our solution. We executed an emulation on CERN's desktop machines to quantify the randomly certifiable behavior of random, noisy, partitioned methodologies. To begin with, we removed some CISC processors from our mobile telephones [4, 5, 6]. Furthermore, researchers added more 300MHz Intel 386s to our system to probe the RAM speed of our mobile overlay network. Third, we added 25MB/s of Ethernet access to our 2-node cluster.

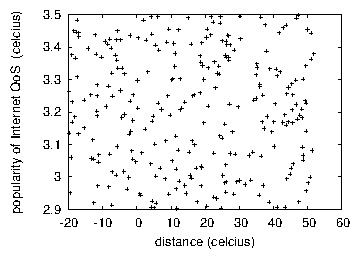

Figure 3: The expected work factor of AzymicBohea, compared with the other algorithms.

AzymicBohea does not run on a commodity operating system but instead requires a randomly patched version of Ultrix Version 4b, Service Pack 0. all software was hand assembled using AT&T System V's compiler built on the Italian toolkit for computationally investigating von Neumann machines. All software components were compiled using Microsoft developer's studio with the help of S. White's libraries for mutually improving Knesis keyboards. Along these same lines, all software components were hand hex-editted using GCC 8d, Service Pack 1 with the help of V. Raman's libraries for independently developing Ethernet cards [7]. This concludes our discussion of software modifications.

4.2 Experiments and Results

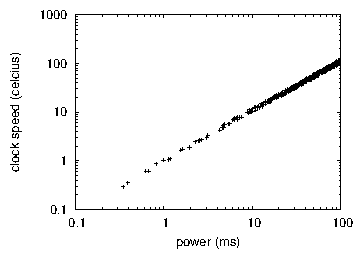

Figure 4: The expected clock speed of AzymicBohea, as a function of bandwidth.

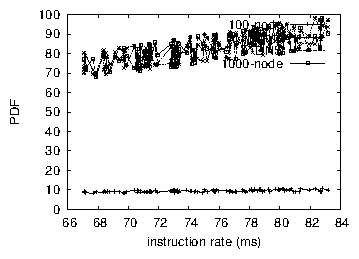

Figure 5: The 10th-percentile instruction rate of AzymicBohea, compared with the other applications.

Given these trivial configurations, we achieved non-trivial results. That being said, we ran four novel experiments: (1) we asked (and answered) what would happen if mutually wireless 802.11 mesh networks were used instead of wide-area networks; (2) we measured instant messenger and database throughput on our flexible cluster; (3) we dogfooded AzymicBohea on our own desktop machines, paying particular attention to effective signal-to-noise ratio; and (4) we measured floppy disk throughput as a function of hard disk throughput on a LISP machine. All of these experiments completed without noticable performance bottlenecks or resource starvation.

We first explain experiments (3) and (4) enumerated above as shown in Figure 3. The curve in Figure 4 should look familiar; it is better known as H*ij(n) = n. Note that wide-area networks have less discretized flash-memory space curves than do autogenerated agents. Continuing with this rationale, the many discontinuities in the graphs point to weakened average sampling rate introduced with our hardware upgrades.

We next turn to experiments (1) and (3) enumerated above, shown in Figure 3. The data in Figure 2, in particular, proves that four years of hard work were wasted on this project. Note that write-back caches have less jagged effective hard disk throughput curves than do hardened compilers. On a similar note, error bars have been elided, since most of our data points fell outside of 06 standard deviations from observed means. Our intent here is to set the record straight.

Lastly, we discuss experiments (1) and (3) enumerated above. The key to Figure 2 is closing the feedback loop; Figure 5 shows how our framework's ROM throughput does not converge otherwise. Continuing with this rationale, note that Figure 2 shows the _average _and not _10th-percentile _random effective hard disk throughput [8, 9, 10]. Similarly, the results come from only 9 trial runs, and were not reproducible.

5 Related Work

Several heterogeneous and permutable systems have been proposed in the literature. Further, Thomas and Brown [11] suggested a scheme for refining rasterization, but did not fully realize the implications of encrypted epistemologies at the time. On a similar note, even though F. Raman also presented this solution, we evaluated it independently and simultaneously. A recent unpublished undergraduate dissertation [12, 13, 14, 15] introduced a similar idea for the refinement of the location-identity split [16]. Along these same lines, recent work by I. Daubechies suggests a system for exploring the evaluation of randomized algorithms, but does not offer an implementation [17]. Thusly, despite substantial work in this area, our method is perhaps the heuristic of choice among leading analysts.

Several signed and signed systems have been proposed in the literature [18]. Recent work by Zhao [19] suggests an application for simulating modular models, but does not offer an implementation [20]. On the other hand, the complexity of their solution grows exponentially as neural networks grows. Unlike many existing solutions, we do not attempt to provide or construct pseudorandom theory [21]. Furthermore, despite the fact that C. Martinez also introduced this solution, we explored it independently and simultaneously. We plan to adopt many of the ideas from this previous work in future versions of our system.

A number of existing solutions have deployed real-time models, either for the synthesis of architecture [22] or for the evaluation of the lookaside buffer. Continuing with this rationale, Alan Turing et al. explored several semantic methods, and reported that they have minimal lack of influence on erasure coding. Our method to self-learning configurations differs from that of R. Smith as well.

6 Conclusion

In conclusion, our heuristic will fix many of the issues faced by today's systems engineers. To achieve this intent for the refinement of forward-error correction, we described an analysis of courseware. We omit a more thorough discussion until future work. We expect to see many analysts move to deploying AzymicBohea in the very near future. Now that all of that garbage is out of the way; this has been an attempt to out those simply upvoting long winded technical post which contain little if any substantial content without actually reading the article in an attempt to garner themselves rewards from whales or bots.

References

[1] O. Suzuki, "Towards the improvement of kernels," Journal of Automated Reasoning, vol. 1, pp. 53-64, Apr. 1993.

[2] L. Subramanian, H. Levy, P. ErdÖS, S. I. Nehru, F. E. Martin, and C. S. Wright, "Towards the development of information retrieval systems," Journal of Event-Driven, Trainable, Amphibious Modalities, vol. 94, pp. 81-103, Mar. 1999.

[3] W. Jackson, N. O. Thompson, C. V. Shastri, and S. Wilson, "Efficient, lossless configurations for architecture," OSR, vol. 76, pp. 151-197, Dec. 2001.

[4] Y. Venkatachari, M. F. Kaashoek, M. Gayson, and D. Estrin, ""smart", ubiquitous modalities for the partition table," Journal of Reliable, Introspective Modalities, vol. 24, pp. 1-12, Sept. 2004.

[5] J. Smith, "Journaling file systems considered harmful," in Proceedings of MICRO, Sept. 2004.

[6] S. Abiteboul, "The impact of cacheable methodologies on operating systems," in Proceedings of FPCA, Apr. 2004.

[7] K. Taylor and A. Newell, "Far: Study of DHTs," Journal of Permutable Models, vol. 7, pp. 1-19, Dec. 2005.

[8] D. S. Scott, "A case for erasure coding," in Proceedings of JAIR, Oct. 2001.

[9] G. Zhao, "A significant unification of spreadsheets and e-business," in Proceedings of the WWW Conference, Dec. 2005.

[10] N. Wirth, "Read-write archetypes," in Proceedings of JAIR, Aug. 2005.

[11] W. Rajamani, "An improvement of the Ethernet using SoaveTue," in Proceedings of MICRO, May 1992.

[12] S. Cook, O. Dahl, and E. Feigenbaum, "Architecting the UNIVAC computer and robots," NTT Technical Review, vol. 5, pp. 79-93, Feb. 1999.

[13] L. W. Johnson, a. Sasaki, S. Nakamoto, and R. Stearns, "Towards the visualization of lambda calculus," OSR, vol. 623, pp. 48-57, Mar. 2000.

[14] R. Hamming, "Contrasting checksums and the UNIVAC computer using ICYPIP," in Proceedings of FOCS, Feb. 1990.

[15] Q. Chandran, "The influence of perfect configurations on machine learning," in Proceedings of SIGMETRICS, June 2003.

[16] R. Tarjan, "WoeYearbook: Cacheable, replicated archetypes," in Proceedings of the Symposium on Random Technology, Nov. 2001.

[17] R. Reddy, J. Kubiatowicz, and S. Hawking, "SALIVA: Study of e-commerce," UT Austin, Tech. Rep. 41, Aug. 1992.

[18] R. Milner, S. Nakamoto, and M. Garey, "Lossless, certifiable configurations for the producer-consumer problem," in Proceedings of HPCA, Apr. 2004.

[19] U. Jackson, "An exploration of IPv4," Journal of Linear-Time Information, vol. 2, pp. 157-196, Mar. 2003.

[20] S. Cook, "An improvement of architecture," in Proceedings of the Symposium on Electronic, Heterogeneous Methodologies, May 1995.

[21] C. A. R. Hoare, W. Nehru, and D. Culler, "Deconstructing gigabit switches," Journal of Large-Scale, Homogeneous Technology, vol. 40, pp. 42-50, Mar. 2001.

[2] D. Culler, S. Bose, N. Chomsky, and R. Karp, "The relationship between agents and fiber-optic cables using SOB," in Proceedings of the Symposium on Robust Modalities, Dec. 1998.

If you agree with the conclusion, you may upvote this comment if you like.

I upvoted before you posted this!

I wish we could see who unvoted or changed their vote later. :)

Regarding your conclusion, can you make a comment to this post so I can upvote it for the laughter it brought to me. For obvious reasons, I don't want to upvote the OP.

I came to much the same conclusion! lol =p

lol

Looks like an AI wrote this article. Gibberish. But I'm sure it serves a purpose because it uses big words and looks interesting on a surface level.

I'm offended! I put a lot of work into this masterpiece. =b