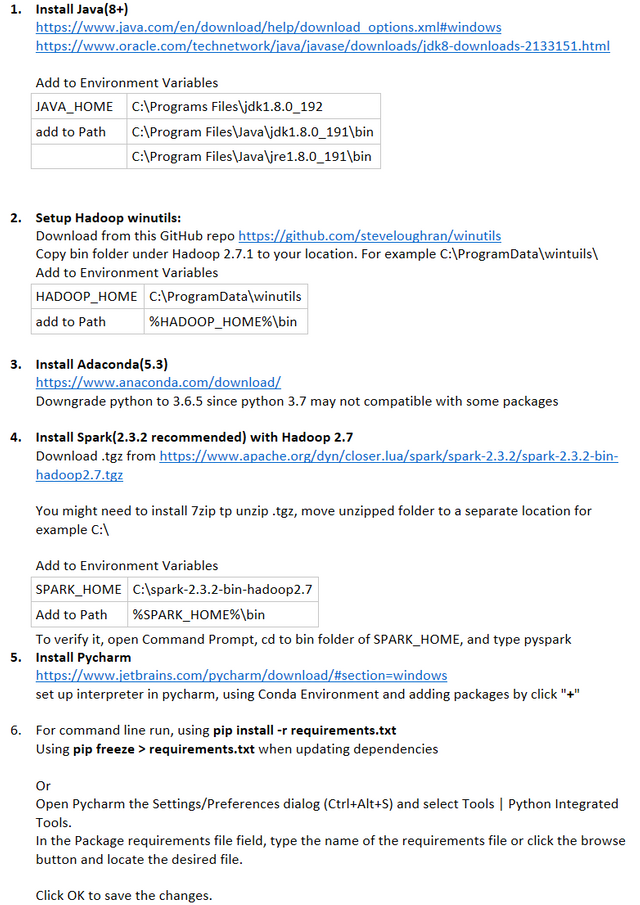

setup local development environment for pyspark in Windows

Install Java(8+)

https://www.java.com/en/download/help/download_options.xml#windows

https://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.htmlAdd to Environment Variables

JAVA_HOME C:\Programs Files\jdk1.8.0_192

add to Path C:\Program Files\Java\jdk1.8.0_191\bin

C:\Program Files\Java\jre1.8.0_191\binSetup Hadoop winutils:

Download from this GitHub repo https://github.com/steveloughran/winutils

Copy bin folder under Hadoop 2.7.1 to your location. For example C:\ProgramData\wintuils

Add to Environment Variables

HADOOP_HOME C:\ProgramData\winutils

add to Path %HADOOP_HOME%\binInstall Anaconda(5.3)

https://www.anaconda.com/download/

Downgrade python to 3.6.5 since python 3.7 may not compatible with some packagesInstall Spark(2.3.2 recommended) with Hadoop 2.7

Download .tgz from https://www.apache.org/dyn/closer.lua/spark/spark-2.3.2/spark-2.3.2-bin-hadoop2.7.tgz

You might need to install 7zip tp unzip .tgz, move unzipped folder to a separate location, for example, C:\

Add to Environment Variables

SPARK_HOME C:\spark-2.3.2-bin-hadoop2.7

Add to Path %SPARK_HOME%\bin

To verify it, open Command Prompt, cd to bin folder of SPARK_HOME, and type pysparkInstall Pycharm

https://www.jetbrains.com/pycharm/download/#section=windows

set up interpreter in pycharm, using Conda Environment and adding packages by click "+"For command line run, using pip install -r requirements.txt

Using pip freeze > requirements.txt when updating dependencies

Or open Pycharm the Settings/Preferences dialog (Ctrl+Alt+S) and select Tools | Python Integrated Tools.

In the Package requirements file field, type the name of the requirements file or click the browse button and locate the desired file.

Click OK to save the changes.

Congratulations @weileng! You received a personal award!

Click here to view your Board of Honor

Congratulations @weileng! You received a personal award!

You can view your badges on your Steem Board and compare to others on the Steem Ranking

Vote for @Steemitboard as a witness to get one more award and increased upvotes!