Driverless Cars, The Moral Dilema

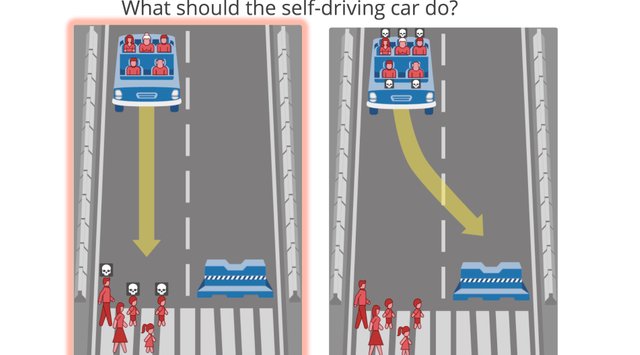

Who makes the decision when a driverless car is in a situation where there is a choice of who gets killed in an impending crash?

Image source

The quest for Level 5

Self-driving cars have clocked up millions of kilometres over the last few years with very few crashes. Statistics generally show that 90% of car accidents are caused by the driver, so would a driverless car system that has proved to be significantly safer than a driven car be preferable?

The problem is that the statistics—although very encouraging—can't tell the whole story. Typically these tests aren't conducted in the driving rain in a dark and unsafe road. At the same time, an autonomous vehicle will not suffer from fatigue, sore eyes, lack of concentration and all the other weaknesses that mere mortals possess. Also, a study has shown that the human cause the vast majority of accidents involving autonomous cars.

The rate of technological improvement in this area is accelerating and there is no doubt that when autonomous vehicles reach the required threshold of safety—the so-called Level 5—there will be a rapid uptake of them.

Who to blame?

Cars at Level 5 would drive at least as well as a human and would react extremely quickly to a dangerous situation and obstacles such as swerving cars and pedestrians. But as we know, not all potential accident situations can be avoided. And sometimes us human drivers have to make instant value judgements where there is some choice. Do I plough into the group of pedestrians or risk my own life and crash into the concrete barrier? I'm not sure much thinking can be done in those situations, but an autonomous vehicle would have to be pre-programmed to react in a certain way.

This is the stuff that makes philosophers very excited. The classic “moral dilemma” is often called the trolley problem. It's a thought experiment in ethics with the general form of:

You see a runaway trolley moving toward five tied-up (or otherwise incapacitated) people lying on the tracks. You are standing next to a lever that controls a switch. If you pull the lever, the trolley will be redirected onto a side track, and the five people on the main track will be saved. However, there is a single person lying on the side track. You have two options:

Do nothing and allow the trolley to kill the five people on the main track.

Pull the lever, diverting the trolley onto the side track where it will kill one person.Which is the more ethical option?

(Wikipedia)

A sample scenario from the Moral Machine: should the user hit the pedestrians or crash into the barrier?

The Moral Machine

To study the problem in the real world, a group of scientists launched the Moral Machine, an online survey that asks people variants of the trolley problem to explore moral decision-making regarding autonomous vehicles. Volunteers have to judge between two bad courses of action in a number of scenarios where driverless cars are involved in unavoidable accidents. Participants have to decide which lives the vehicle would spare or take, based on factors such as the gender, age, fitness and even species of the potential victims.

You can do the test here. (Just a tip, click on Show Description on each choice to fully understand the situation.)

The experiment received over 40 million responses from 233 countries, making it one of the largest moral surveys ever conducted.

The results, published in a recent paper in Nature, turned up some intriguing results.

It turns out there are distinct regional differences in the choices made. The regions are:

- “Western,” predominantly North America and Europe, where they argued morality was predominantly influenced by Christianity

- “Eastern,” consisting of Japan, Taiwan, and Middle Eastern countries influenced by Confucianism and Islam, respectively

- “Southern” countries including Central and South America, alongside those with a strong French cultural influence.

These are the strongest differences between these regions and cultural features:

| Region/Culture | More Likely Kill | More Likely Spare |

|---|---|---|

| “Western” | Old people | Young people |

| “Eastern” | Young people | Old people |

| “Southern” | Fat people | Athletic people |

| High inequality | Homeless people | Business executives |

| Strong rule of law | Jaywalkers | Lawful pedestrians |

But in most scenarios, there was almost universal agreement:

| More Likely Kill | More Likely Spare |

|---|---|

| Individuals | Groups |

| Men | Women |

| Cats | Dogs |

| Criminals | Dogs |

This led to the conclusion from one of the researchers that:

Philosophers and lawyers … often have very different understandings of ethical dilemmas than ordinary people do.

Image source

The moral calculation

The hard choices will eventually have to made into algorithms and programmed into autonomous vehicle systems. The underlying moral calculations will have to be made explicit. Guidelines are already being drawn up, the most comprehensive of them by Germany.

The Germans took the interesting position that there should be no preference given to potential victims, such as age, gender or build. In fact, they specifically outlaw it.

This seems to me to be the most rational way to approach it. If vehicles are all programmed to react in the same way, life could become very dangerous for fat people (or cats). There is no doubt that customers would want the car companies to prioritise their lives over pedestrians, but that would make it much more dangerous to be a pedestrian.

Will we be ready to cede these ethical, life and death decisions to machines?

References:

Singularity Hub: Building a Moral Machine: Who Decides the Ethics of Self-Driving Cars?

Nature: Self-driving car dilemmas reveal that moral choices are not universal

The New Yorker: A Study on Driverless-Car Ethics Offers a Troubling Look Into Our Values

Wikipedia: Trolley problem

Fortune: Humans—Not Technology—Are the Leading Cause of Self-Driving Car Accidents in California

Also posted on Weku, @tim-beck, 2019-01-31

Ah... these "moral machine" type questions are always a lot of fun... well "interesting to debate" at the very least. I know it's caused more than a fair number of "debates" in my circles.

Thanks for an interesting read! \m/

Ja, it is interesting. But usually these discussions don't actually matter. This one does: will my car kill me to save someone else 😓

Moral debate will never come to a conclusion, it will depend how the machine is programmed. Thought behind machines reaction is human, will boil down to region.

I think the Germans are right—there should be no discrimination between potential victims. The only (moral?) position to program into the machine would be to harm less people. But with regional preferences it could be like religion: in some areas of the world a particular orthodoxy is forced regardless of how rational it is.