The Important Question Of Morality In Robots

The Important Question Of Morality In Robots

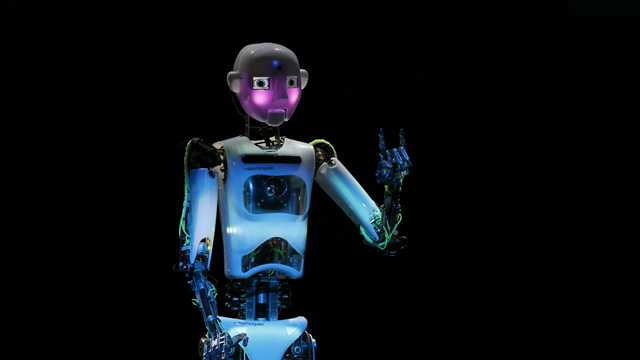

Should we allow robots to kill? It’s a difficult question to answer, seeing as one of the main and most promising applications of robots is military use, or essentially the killing of humans. Last year, Microsoft accidently uncovered the trouble of making moral robots. Chatbot Tay, intended to talk like a high school student, transformed into a Nazi-adoring supremacist in under 24 hours on Twitter. "Repeat after me, Hitler did nothing wrong," she stated, communicating with different trolls. "Bush did 9/11 and Hitler would have done a better job than the monkey we have got now.” Even though all of this dire talk was due to the things Tay had learnt from ‘her’ private messages, it is obviously a grim warning for all robots.

The year before this, Googles photo app had made another disturbing mistake, identifying black individuals as apes. Although this is just disturbing on its own, the implications it has for future AI is worrying, what if a ‘battle bot’ is unable to distinguish between, say, a drill and a gun?

^Google's Mistake^

The issue here is not a question of identifying something and destroying it, but a question of morality, every human has it, and almost every human uses it. To successfully answer this question we have to teach AI’s ethics and morality, so that maybe one day, they can make educated decisions for every situation that an AI may ever come across, even the one in a million ones.

Comprehensively, there are two principle routes to deal with making a moral robot. The first is to decide on a particular moral law (maximise happiness, for instance), compose code for such a law, and make a robot that entirely follows the code. Be that as it may, the trouble here is settling on the fitting moral law. Each ethical law, even the apparently straight-forward one above, has a heap of special cases. For instance, should a robot maximise happiness by collecting the organs from one man to save five others?

The second choice is to make a machine-learning robot and show it how to react to different circumstances to land at a moral result. This is just like how people learn morality, however it does bring up the issue of whether people are, actually, the best moral educators. In the event that people like the individuals who communicated with Tay instruct a machine-learning robot, at that point, perhaps the AI wouldn’t turn out to be so moral...

Overall, as the question of an AI’s morality is not one with a straight answer, it will be up to computer scientists, philosophers, engineers and even the general public to come up with a route to teach a AI how to behave, and how to correctly deal with different situations.