Part 2: Smile Recognition using TensoFlow, Opencv in real video feed

Hello World!

This is going to be the second and final part of smile recognition in real time, In the first part, I explained about how to train logistic/softmax regression model using TensorFlow, to predict if the input is a neutral face or smiling face.

In this post, I am going to explain how to load the saved model, find the face from video feed using Dlib module, extracting the mouth part(Region Of Interest), pass it through the saved model run the prediction and show the result.

About the Dlib:

Dlib is a modern C++ toolkit containing machine learning algorithms and tools for creating complex software in C++ to solve real world problems. See http://dlib.net for the main project documentation and API reference.

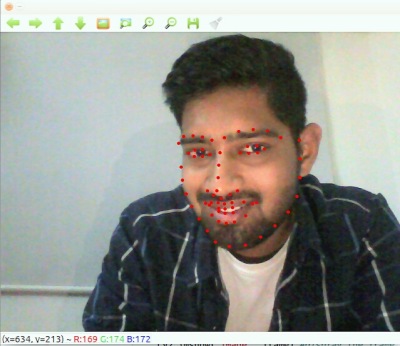

Dlib facial landmarks:

Steps:

- Loading the saved model

- Start TensorFlow session and initialize camera to read frames

- Using Dlib library find the face in the image, and then using facial landmarks detection get the Region Of Interest of the mouth area.

- Once we get the mouth area, normalize all the pixels values and flatten the image

- Pass the flatten image to the saved model, you'll get score for both the cases

All the above steps have been well explained in the video below:

If you like the video please give a thumbs up and subscribe to the channel: https://www.youtube.com/channel/UC97_pHqfRIp6h-E30jj5JRwif you like it and want more like this

Want to implement your first AI? Check out our post :)