21st Century Problems: How NOT To Fall In Love With A Chatbot

(www.outerplaces.com)

Image Credit: Universal Pictures

https://www.outerplaces.com/science/item/16872-dont-fall-in-love-with-a-chatbot-infographic

21st Century Problems: How NOT To Fall In Love With A Chatbot by Matthew Loffhagen

If Ex Machina has taught us anything, except that Oscar Isaacs has some incredible dance moves in his arsenal of talents, it's that you should never fall in love with a robot.

As time goes on, this sage advice if going to get harder and harder to follow. As AI chatbots get more sophisticated, philosophers expect that humanity will increasingly manage to be fooled by artificial personalities masquerading as human beings.

(www.giphy.com)

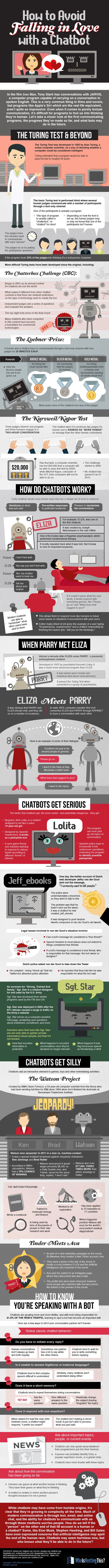

Luckily, Who Is Hosting This has come up with a handy infographic that breaks down the history of chatbots, and the ways humans can avoid being fooled by an artificial friend pretending to be a potential suitor.

There's some really interesting stuff in the history of chatbots - for example, one of the earliest bots, Eliza, was built all the way back in the 1960s, and was designed to be a therapist.

Then, in 1972, someone built a paranoid schizophrenic chatbot inventively named Parry. Naturally, Eliza was put to use "treating" Parry, leading to a pretty pointless non-sequitur discussion between the two that didn't exactly provide the breakthrough that the robotics experts were hoping for.

As it turns out, bots have managed to pass the Turing Test in some form or another fairly often in the history of artificial intelligence, with machines managing the fool the majority of testers that they're actually more human than other, flesh and blood chat partners. This is often achieved by programming around the limitations of chatbots, for example, giving them a reason to have poor sentence structure or comprehension.

It's not uncommon, for example, for AI programmers to create chatbots that pretend to be young, non-native English speakers, as a proclivity for informal language and an unfamiliarity with the language they're typing in works as a suitable cover to disguise the fact that testers are actually talking to a toaster with access to a thesaurus.

According to the infographic, even Ex Machina, a cautionary tale about humans falling in love with robots, led to a few cases of robotic heartbreak, as the promotional campaign for the film involved setting a bot called Ava loose on Tinder to give users false hope of ever finding someone to love.

(www.giphy.com)

Never mind - it's not like Tinder users are actually looking for a solid long term romance anyway most of the time!

So how does the infographic suggest you test your online friends' humanity? Apparently, the trick is to look at their responses. If they struggle with overly formal language or slang, they might be fake. If they respond too quickly to messages or wait patiently for your response, that suggests they're only programmed to send you a message when you talk to them.

Another good clue is whether or not the "person" changes the subject a lot. The example given is John Grisham - if a bot isn't familiar with books by Grisham, they may try to start talking about ice cream instead.

Alternatively, as a suspected bot what they'd do if they came across a tortoise on its back in the middle of the desert. If the chatbot attempts to shoot you in the shoulder, it's probably not human.

Ultimately, this is good advice, if someone limited in its long term effectiveness. It's not going to be too long before bots get even more sophisticated, and more capable of manipulating our puny human emotions. Bots can already spot patterns in human behavior and preferences that we don't recognize ourselves, so given long enough, these programs will succeed in fooling us into loving them.

Who knows? More advanced bots might already exist, writing articles on Outer Places to throw readers off the scent.

You never know...

(www.whoishostingthis.com)

(www.steemitimages.com)