Programming - OpenMP API Introduction

[Image 1]

Introduction

Hey it's a me again @drifter1! Today marks the start of a new series of Programming and more specifically Parallel Programming! I already covered basic multi-threading and multi-process parallelization in Networking, and also made a simple example of MPI (Message Passing Interface) for Distributed Programming (which will also be rebooted quite soon!).

As you might have guessed from the title already, this Parallel Programming series is about covering the OpenMP Programming Interface. It's a much easier way of getting parallelization up-and-running quickly, as simple directives to the compiler do the job for us! Today will be a simple introduction and afterwards we will dive more deeply into each directive and its purpose. And don't worry I will also make quite a few basic and more advanced example programs, so that everyone can understand. So, without further ado, let's go straight into it!

Requirements - Prerequisites

- Basic understanding of the Programming Language C, or even C++

- Familiarity with Parallel Computing/Programming in general

- Compiler

- Linux users: GCC (GNU Compiler Collection) installed

- Windows users: MinGW32/64 - To avoid unnessecary problems I suggest using a Linux VM, Cygwin or even a WSL Environment on Windows 10

- MacOS users: Install GCC using brew or use the Clang compiler

- For more Compilers & Tools check out: https://www.openmp.org//resources/openmp-compilers-tools/

OpenMP API

OpenMP (from Open Multi-Processing) is a parallel programming API (Application Programming Interface), that should not be confused with OpenMPI, which is a Message Passing Interface. The API is portable and scalable, giving parallel programmers a simple and flexible interface for developing portable parallel applications in C/C++ and Fortran. In this series I will be using the C/C++ API. The Directives and Runtime Library Routines (Functions) are very similar in both languages though.

The OpenMP API is explicitly meant for multi-threaded, shared memory parallelism, and only that. Its not meant for use in distributed systems by itself, doesn't guarantee the most efficient use of shared memory, doesn't check for data dependencies, data conflicts, race conditions, deadlocks and doesn't handle parallel I/O. The programmer is responsible for the synchronization and correct definition of parallel code.

So, what exactly does OpenMP provide? The API is built up of three pripary components:

- Compiler Directives - Apply on the succeeding structured block to define parallel behavior

- Runtime Library Routines - Various functions that can be called from a parallel or non-parallel region

- Environmental Variables - Variables that can be used for various definitions and actions

Abstraction Benefits

Parallel Programming in general can be defined using:

- Extensions for classic sequential or serial programming languages

- New programming languages that support parallelism natively

- Automatic parallelization

OpenMP uses Thread-based Parallelism in its back-end, and subroutines are scheduled to be executed autonomously on those small units of processing. Threads exist within the resourses of a process and cease to exist when the process terminates. The maximum number of simultaneously running threads equals the number of threads that are available in the processors/cores of the CPU, and the application itself defines how it wants to use them. By default, OpenMP uses all available threads.

Additionally, OpenMP's Parallelism can be considered explicit, which means that the parallelization is not automatic. The API offers the programmer full control over parallelization. The parallelization can be simple, meaning that compiler directives are just inserted over a serial program to make specific serial sections parallel, define critical sections and more. On the other hand, the parallelization can also become quite complex, by creating subroutines and levels of parallelism, locks and even more.

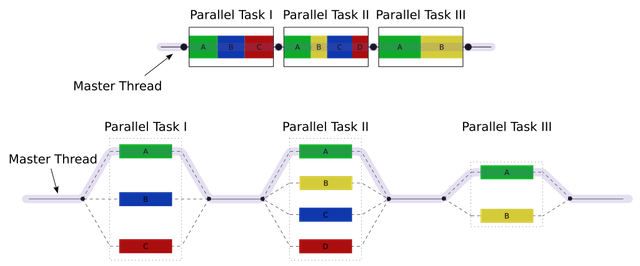

Fork-Join Model

All OpenMP programs begin as a single process with a master thread. The master threads exectues sequentially (serial code) until the first parallel region occurs. In such a case the master thread creates a team of parallel threads, which is called FORK. The statements inside of the parallel region are then executed in parallel among the various threads. When the threads complete the statements, they synchronize and terminate (barrier synchronization method), leaving only one thread, the master thread. This is the JOIN behavior.

Inside those parallel regions, most data is shared by default, which means that all threads can access this shared data simultaneously. If this behavior is not desired, the programmer can explicitly specify how the data should be "scoped".

General Directive Format-Syntax

The general format of a directive in the C/C++ implementation of OpenMP is as following:

#pragma omp directive-name [clause ...]Those directives can be used for various purposes:

- Defining a parallel region

- Dividing blocks of code among threads

- Distributing loop iterations between threads

- Serializing sections of code

- Synchronization of work among threads

Compiling an OpenMP Program

Currently, the latest version of OpenMP is version 5.0. Initial support for C/C++ was added in version 9 of GCC, version 10 added even more features, and with version 11 (currently in developement) even more features of OpenMP 5.0 should be accessible. With Version 11 of GCC there will be full-support for OpenMP 4.5 in Fortran. As you can tell, the Fortran implementation is quite a bit behind. Either way, the differences in version 4.5 and 5.0 for a simple introduction series will not be too important.

The General Code Structure of a OpenMP Program is:

#include <omp.h>

int main(){

Serial code

.

.

.

Beginning of parallel region - Fork

.

.

.

Resume serial code - Join

.

.

.

}

Compiling a OpenMP program is as easy as adding the "-fopenmp" flag in the GNU compiler:

gcc -o output-name file-name.c -fopenmp

g++ -o output-name file-name.cpp -fopenmp

RESOURCES:

References

- https://www.openmp.org/resources/refguides/

- https://computing.llnl.gov/tutorials/openMP/

- https://bisqwit.iki.fi/story/howto/openmp/

- https://gcc.gnu.org/wiki/openmp

Images

Final words | Next up

And this is actually it for today's post!Next time we will get into how we define Parallel Regions...

See ya!

Keep on drifting!