Planning a training of an AWS DeepRacer autonomous car

Having completed the tutorial and started training, I had to decide on a proper way to get my DeepRacer going and doing it well.

Analyzing the track

I started with a bunch of default sample rewards like sticking to the center of track, going fast and stuff. But then it's not really enough, I had to reward the car for its behaviour depending on conditions on the track. I had to decide on how to better optimize my model. As noted previously, I gathered data for a given state that are an input to the reward function:

{

'all_wheels_on_track': True,

'x': 4,

'y': 5,

'distance_from_center': 0.3,

'heading': 359.9,

'progress': 50,

'steps': 100,

'speed': 1.0,

'steering_angle': 6,

'track_width': 0.2,

'waypoints': [[ 2.5, 0.75], [3.33, 0.75], [4.17, 0.75], [5.0, 0.75], [5.83, 0.75], [6.67, 0.75], [7.5, 0.75], [8.33, 0.75], [9.17, 0.75], [9.75, 0.94], [10.0, 1.5], [10.0, 1.875], [9.92, 2.125], [9.58, 2.375], [9.17, 2.75], [8.33, 2.5], [7.5, 2.5], [7.08, 2.56], [6.67, 2.625], [5.83, 3.44], [5.0, 4.375], [4.67, 4.69], [4.33, 4.875], [4.0, 5.0], [3.33, 5.0], [2.5, 4.95], [2.08, 4.94], [1.67, 4.875], [1.33, 4.69], [0.92, 4.06], [1.17, 3.185], [1.5, 1.94], [1.6, 1.5], [1.83, 1.125], [2.17, 0.885 ]],

'closest_waypoints': [3, 4],

'is_left_of_center': True,

'is_reversed': True

}

It's quite easy to get because you can use print in your reward function and then you receive its output in CloudWatch (monitoring and logs gathering service) in Log -> Groups/aws/lambda/AWS-DeepRacer-Test-Reward-Function.

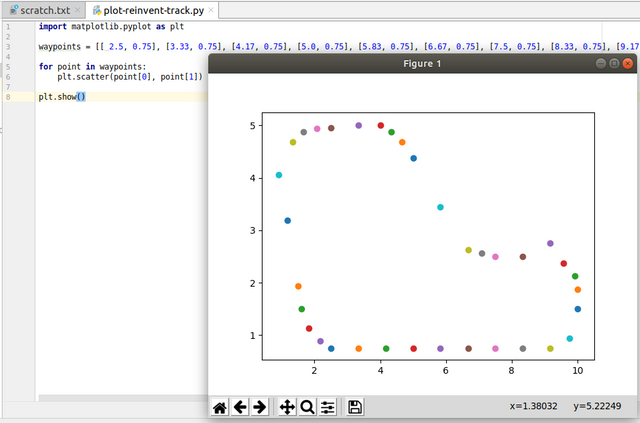

I made a simple Python script to plot the waypoints, just to understand what we can "see" from the data:

According to the developer guide the waypoints represent the middle line of the track. The purple point after the 180 turn go me worried as it doesn't look like a proper waypoint according to the track images:

I tried waypoints from a different reward run but the outcome was identical. I will need to amend it in my learning function.

Since the training track is same as the racing track, I could simply learn the best way, but I don't really want to do it. Instead I would like to analyze my location on the track and decide the desired behaviour depending on the circumstances. What seems reasonable to me:

- going straight on a straight line

- going full speed on a straight line

- keeping close to the center on a straight line

- cutting corners on a turn

Stuff like that.

A first exercise: could I detect a straight line and a turn?

I already mentioned the waypoints. They are points in a coordinate system. In that case I could calculate the angles between two lines going through the three subsequent points. If the angle is +/- 180 degrees, it's a straight line. I actually assumed that the absolute value of above 172 degrees is still a straight line, then I also tell mild and sharp turns apart. I used a code like this:

import numpy as np

def get_angle(p0, p1, p2):

v0 = np.array(p0) - np.array(p1)

v1 = np.array(p2) - np.array(p1)

angle = np.math.atan2(np.linalg.det([v0, v1]), np.dot(v0, v1))

return np.degrees(angle)

It's pretty simple. Unfortunately numpy is not available at the moment, so I had to do the maths manually. Also, for some time the waypoints had the same point in first and last position of their list so I had to secure myself against that.

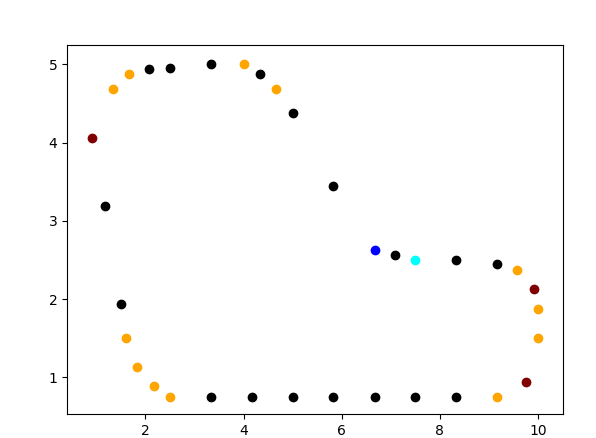

The result is as follows:

Black dots are straight line ones, light dots are mild turns and dark ones are sharper turns.

Having this in mind I could start optimizing the behaviour of the car.

Mistakes

I have learned a few things while performing the optimizations.

Hyperparameters

These are parameters that go into the algorithm and decide on the style of learning. The default values provided are reasonable and work pretty well at first, but then you might want to change them a bit. The video tutorial, AWS Summit workshop and developer guide all provide a bit of information on their impact and recommend some values.

But do not change too much or by a lot. This could knock your model out of a reasonable behaviour, like this:

In this case I put more emphasis on learning compared to exploiting current knowledge, at least compared to the default values. I also added way more entropy which pretty much made the training go back to the start.

Speed

Speed is awesome. Everyone wants to make it under eight seconds. That said when learning going fast makes it difficult to get the car to behave as desired.

I started getting nice times at a cost of ability to complete the track. First get some skills, then accelerate (new drivers: this also works in real life).

Not so fast

Not really about the speed of the car, more about the speed of learning. I tried setting a very high incentive for going fast to speed up the learning of a model in one of the iterations only to see this:

I observed that it gave more reward for going fast while getting of the track then to go along the track. I readjusted the incentive and increased the amount of experience episodes and this appeared to have fixed things.

Do the maths, then redo the maths. Then redo the redo.

It took me a while to remember all the trigonometry stuff and was so energy consuming that I wrote the rest of the reward calculation without much thinking. As a result I saw this:

Because of a bug I made the car get the best reward when it is on the right side of the road. Continental Europe will be pleased to hear that. I ended up taking a piece of paper and doing the maths with a pencil to improve it. I started training a new model which goes well along the track but doesn't go too fast.

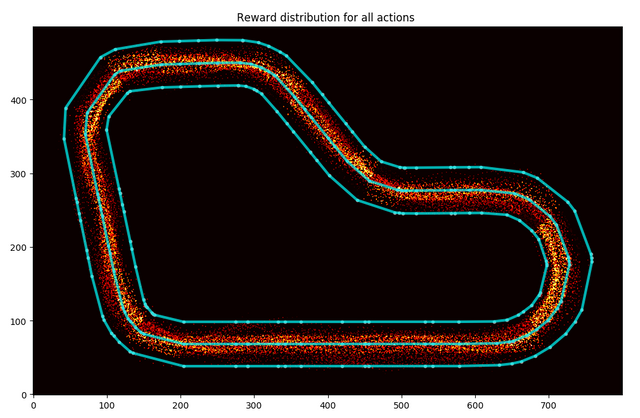

Track analysis

I have learned that staring at a simulation for a couple hours is not the best way to analyze the behaviour of a car. This was especially painful when the car was fast - It would do three laps under a minute, where it takes about six to start the evaluation up and four to stop it.

Luckily Amazon's Wonderful Engineers have provided some tools to help with that on the workshops github account. The notebook they provided leads one throught the process. Some learning curve is involved here.

I've learned that to get the stream IDs to download the logs one can use the following code:

client.describe_log_streams(logGroupName='/aws/robomaker/SimulationJobs')

Then once you know which stream to choose, use its part before the first slash as the prefix value for the instructions in that notebook.

The tools let you plot a couple pretty graphs, like a distribution of reward on the track through the training:

You can see that I finally managed to get the rewards right for the insides of the turns.

There is a number of examples in there with various visualizations of the data from the logs in there. I am yet to understan them better.

Learning resources

What I have completed so far:

- watched the video tutorial from Amazon (about 90 minutes) - highly recommended

- read the developers' guide (about 4-5 hours skipping the bits I already knew from the video)

- read the workshop materials (about 30 minutes skipping the bits I already knew from above)

The guide includes an introduction to the learning algorithm used for the DeepRacer. Sadly, I have lost some of my maths skills which makes it difficult to understand mathematical papers at 2 am in the morning after a week of sleep shortage. I will have to get back to it at a later time.

As a side effect of learning the tracks analysis I finally set up a separate user for a safer access to DeepRacer and nothing else (not that there is anything in there, it's just a reasonable security practice to follow), set up my AWS command line interface tool, set up programmatic access with Boto3. I also learned a bit about costs evaluation. This month is going to cost me dearly.

Costs

Have I mentioned that this month is going to cost me dearly? SageMaker is not a cheap thing to run. My current AWS meter is at around $40 for this month and I'm being told to expect a total of $230. It won't be the case because:

- Some will get covered by Free Tier services credits

- Some will get covered by credits I got at the AWS re:Invent

- I am hoping to use an AWS Summit workshop account to do some training (that would at least be a day with lower costs)

- After the summit and successful submission to the London Loop virtual track I will not be doing as much training any more

Still, that's a lot of friggin' money so if you want to get into this, do watch your budget. It's easy to get amazed by this and get overly enthusiastic and forget about what's involved in it. I decided to take that hit because I don't remember being so enthusiastic about a piece of technology in a very very long time and I treat it as an investment in the unexplored bits of me rather than a waste of money for a spoiled grown-up kid's entertainment.

Summary

That's about it for now. Today is my last day before the summit and I am working out a model that I could try and load to a car. I'm also preparing for the summit itself since I would like to attend some sessions. I plan to arrive at the venue at 8:00 am, as the door open, to find my way around and make sure I can get into to DeepRacer workshops.

I will do my best to bring some interesting stories from there. I already warned my manager that my output would be DeepRacer-centric. He approves. Thanks, man!

Loving this post. I may start learning python. I have the basics. I just cant get over the hurdle between basic and intermediate

I would definitely not say I know Python. I use it as a tool to get things done here and there.

It is nice though.

I always knew I was going to be rich. I don't think I ever doubted it for a minute.