Digital photography: bits and pixels basics.

Quantum, according to its definition in physics, is the minimum amount of any physical entity involved in an interaction. In the information world the same definition apply to the "bit".

So what is one bit in other words ? It's simply : 1 bit is a information of yes or no. Nothing more, no maybe, no "I don't know" just a yes or a no we could say 0 or 1, high voltage or low voltage, even black or white which is already much closer to the subject.

Now we might have a single 1 bit picture which is either a black or a white sheet. Of curse we need lot more information for a nice sunset shot but let's see what happening with 2 bit. Now we can have black (black one, black two), white (white one white two) and 50% gray (black one, white two or white one, black two).

Adding one more bit and we gonna have a 33% gray and a 66% gray in addition. Let's see. The human eye can make difference in about 1000 shades. For that we need 10 bit (2 to the power of 10) which is 1024 different information package might say 1024 tones.

1024X768 pixel monochrom image with 8-bit gradient using dithering avoid posterization

Ok, if we pick one tiny dot from the picture (black and white picture for now) it certanly fit one of the 1024 tones. This tiny dot is the pixel. Most common monitors are the "full-hd" resolution monitors if we take a black and white wallpaper picture from that, it consist 1920x1080 pixels or 2,073,600 pixels or 2.1 megapixels with 10-bit (or most likely 8-bit)depth.

What about colors ?

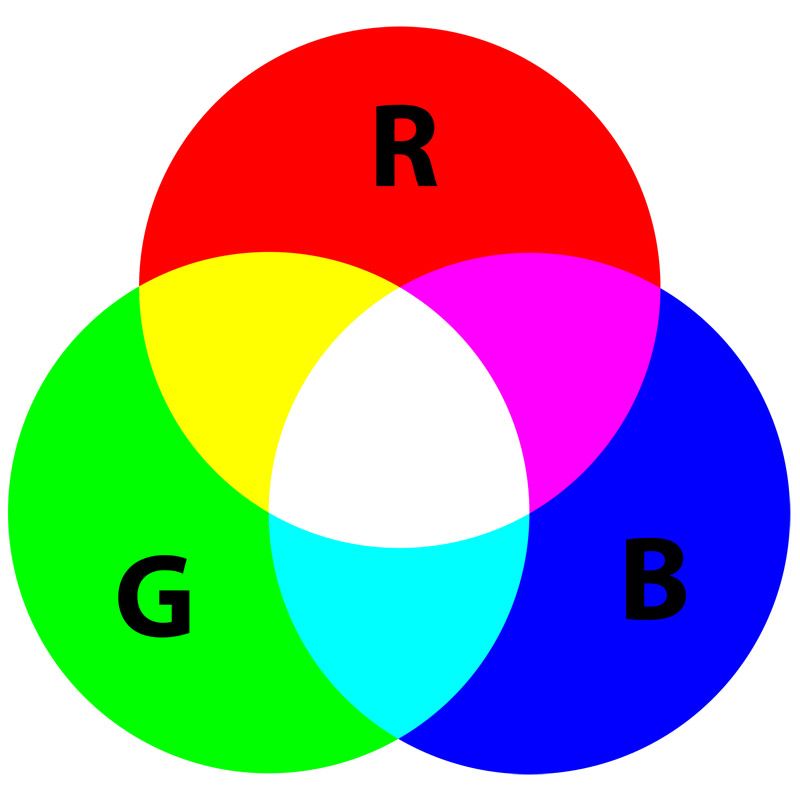

Cameras and monitors use an additive color model in which red, green and blue are added together to reproduce any of colors. Zero intensity for each component gives a black, and full intensity of each gives a white.

Additive color mixing.

So we need three channels to reproduce all colors. All colors... how many colors do we need ? Again, the human eye can make difference about 10 millions of colors. With three channels in order to get at least 10 millions of colors need to have 8 bits depth per channels. This is equal to 16,777,215 colors. It's way more than 10 millions but with 7 bits depth or 7 bits BPC (BPC mean bits per channel) it would be only about 2 millions colors. We have now 16 millions of colors or true colors with 8-bit image. But wait a minute 8-bit permits only 256 shades (2 to the power of 8) that is not enough. Remember, would need at least 1000 tones or 10 BPC.

Well, unfortunately most typical desktop displays devices only support 8 bits of color data per channel including monitors and graphic cards. Even if you chose to edit in 16-bit, what you see, are going to be limited to 8-bit by your computer and display. However most of the operating system itself are limited to 8-bit color depth as well. To avoid posterization caused by the 8-bit limit, imaging softwares uses different methodes called dithering.

Cameras sensors on the other hand store data in 12, 14 or 16 bits per channel. This fact leads to many more questions what has to be answered. Like :

- When we upload the images from the camera, we are throwing away a good amount of data already ?

- What is the different between jpeg and RAW ?

- Can I save more information if I use RAW image data ?

- 8-bit or 16-bit what color depth should I use anyway ?

And so on ... But for the answers, perhaps another time.

I hope somehow was usefull these few bit of information...