NUMERAI: Takeaways and Advice as a Data Scientist on Numerai

What is Numerai?

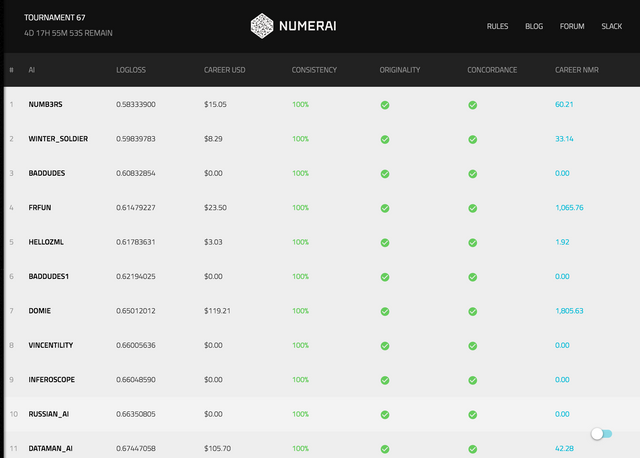

Numer.ai is a hedge fund which crowdsources stock market predictions from anonymous data scientists like myself. Data scientists download a new data set each week, train their machine learning models, and submit predictions. The top 100 prediction sets, in terms of performance on live data, receive payouts in the form of USD and NMR, Numerai's cryptocurrency. Currently the total payout per week is approximately $40,000 worth of NMR, $4,000 USD, and an additional $3,000 USD in a separate "staking" tournament.

How I've Done So Far

It takes 4 weeks after submitting to get tournament results, as the predictions need time to play out on the real stock market. I've only been at it 5 weeks, so I just got my first results ... they weren't very good, but I already knew that was coming. After my first submission, I found an error which had caused me to substantially overfit. Since then, I've done a lot of development and have built out an efficient and powerful pipeline that allows me to submit high-quality predictions very quickly. I'm cautiously optimistic that some of my entries over the past month will land in the top 100 and get me a payout.

The Challenges of the Numerai Tournament (and how I've approached solving them)

1. The Dataset is Homomorphically Encrypted

Ok so what does that mean? That means that the historical data which we train our models on has been encrypted in a way to preserve the ability to do computation on it, while disguising any information about what the data really means. This is likely to protect any proprietary data being provided by the hedge fund. We get a csv with 21 columns of features (useful data points which were known prior to the outcome) and a binary target (the outcome we are trying to predict). The features, however, are all between 0 and 1 and have column names of feature1 - feature21. There is no information about their meaning. In some ways this can be frustrating, but at the same time I find it kind of awesome from a technical perspective and it presents a unique challenge. This eliminates any chance for feature engineering using domain knowledge, but there are still ways to squeeze more value out of the features without knowing what they mean.

My Approach: Using a combination of brute force and some careful measures to avoid overfitting, I used the existing feature set as building blocks to create my own unique, better-performing feature set.

The idea was to come up with a ton of new features, start with a clean slate, and incrementally attempt adding each one and see if it improved results on cross-validation. First, I created an exhaustive list of all 840 two-feature combinations using addition, subtraction, multiplication and division (i.e. feature1-feature2, feature1+feature2, ...). Feature interactions can often be significantly more predictive than the individual features themselves. I then calculated the Pearson correlation coefficient for each of these variables in relation to the target, to get a very rough estimate of their potential predictive power, and I sorted them with the highest correlation at the top. Then, starting with a clean slate, I attempted adding each feature to the feature set. Each time, re-training and cross-validating. If the logloss decreased, I kept that feature and used the new improved logloss as my new baseline. And so on, adding new features only if the improved the cross-validation logloss. Depending on the algorithm being used, I would end up with anywhere from 10 to 50 features in my new feature set. This provided me a small, but meaningful edge over the default feature set, and also provided my predictions with some originality. Which leads me to the next challenge ...

2. The Fight for Originality

Creating a model that performs decently well on Numerai is not actually that difficult. I get the sense that the 21 features provided to us are pretty good predictors, seeing as they want us to perform well. Thus, as long as you follow best practices to avoid overfitting (which I screwed up in my first week), you can get acceptable results using an out-of-the box machine learning module like logistic regression without applying any real strategy. I now utilize a wide array of algorithms and combine them into ensembles that out-predict all of the individual algorithms. I've seen my results steadily improve to put me solidly in the top 25% of data scientists on Numerai in terms of validation logloss. However, the real difficulty is originality. Part of the criteria for the tournament is passing an originality check. The hedge fund doesn't need thousands of sets of very similar predictions. The reason they are crowdsourcing is to create a very strong ensemble of unique prediction sets. For this reason, in order for your submission to be accepted, it needs to be under a certain cutoff of correlation to the rest of the prediction sets already submitted. This leads to the originality scramble. The longer you wait to submit your predictions, the less likely it is that your predictions will be uncorrelated to the existing ones. It becomes very difficult to submit a high quality set of predictions that gets accepted even just 30 minutes after the contest opens. So, the instant that each weekly contest opens, there is a scramble to download the dataset, train your models, and submit your predictions ... yet still have them be high-quality.

My Approach: I created an efficient pipeline to train many unique classifiers on the new data simultaneously, and then run hundreds of pre-defined ensembles off of the results of those individual classifiers.

After I download the new data, I run a single shell script which starts my top 15 classifiers (including multiple iterations of Random Forest, Logistic Regression, Gradient Boost, Neural Network, and even Linear Regression). Within a couple minutes they all finish and write their predictions to a CSV with the same name as the classifier. Then the shell script kicks off my ensembles, which simply take all the CSVs and combine the prediction columns with various weightings. Using just 15 classifiers, I can create hundreds of uniquely weighted ensembles almost instantly, as all that needs to be done is take the set of 15 prediction columns, weight them according to some array of 15 integers, take the weighted average, and write the new set of predictions to another CSV. By following this methodology, I can actually improve my accuracy (as the different classifiers balance out each others' weaknesses) and provide myself with hundreds of options to submit in a very short period of time. This means that, even if I'm not the first one to submit and I'm unable to pass the originality check on my first try, I can generally iterate through high-quality options much quicker than most. So, within the first 10-15 minutes I can usually get 3 very solid submissions in. Then i get to relax.

Conclusion

I'm hooked. If you're a data scientist, or just interested in giving it a try, I highly recommend Numerai. It's a great way to improve your skill set and get some real practice as a data scientist, and there's a ton of money to be made if you do a good job. As far as the NMR token, I don't have a very good sense of whether it will go up or down. There are actually some very interesting mechanics going into that which I can discuss in a later post. I'll continue to provide more updates on my results and experiences with Numerai as I go. Thanks for reading! Please give me an upvote and a resteem if you made it this far :)

Great. Looking forward to know more about your experience with numerai. I have attempted to participate but the few ideas I've tried have not shown promising results

Cool! Wasn't sure if any other Numerai users would actually see this. I didn't get too deep into the technicals on this post, but might consider posting some more detailed examples later

Soy trending https://steemit.com/@jhoshua1144 ya tienes mi upvote dejame tu upvote y yo te lo devolvere gracias por el apoyo,

Resteemed

Interesting!

Great article! I thought about entering this as I find the idea intriguing. The payouts seem to have gone up since I last investigated so maybe I'll take another look! It sounds like it needs an industrialised solution though if it comes down as much to speed as mathematics. Thanks for posting!

I just wish I had gotten in before they did their initial coin distribution (wasn't really an ICO). They distributed 1 million NMR to 12,000 data scientists

Just based on their performance, no financial investment needed

I saw. Plenty of wishes like that in the crypto space though! Hopefully still some opportunities left to come!

How would you suggest a complete newbie start in learning to do this?