Google Employees Revolt, Refuse To Work On Clandestine AI Drone Project For The Pentagon

Around a dozen Google employees have quit and close to 4,000 have signed a petition over the company's involvement in a controversial military pilot program known as "Project Maven," which will use artificial intelligence to speed up analysis of drone footage.

Project Maven, a fast-moving Pentagon project also known as the Algorithmic Warfare Cross-Functional Team (AWCFT), was established in April 2017. Maven’s stated mission is to “accelerate DoD’s integration of big data and machine learning.” In total, the Defense Department spent $7.4 billion on artificial intelligence-related areas in 2017, the Wall Street Journal reported.

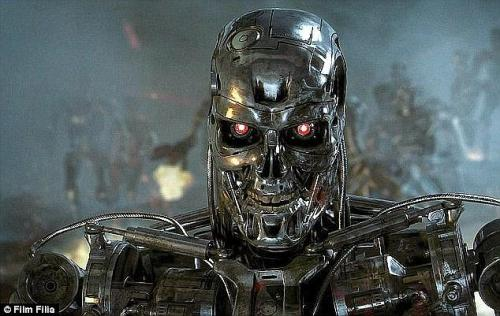

The project’s first assignment was to help the Pentagon efficiently process the deluge of video footage collected daily by its aerial drones—an amount of footage so vast that human analysts can’t keep up. -Gizmodo

Project Maven will use machine learning to identify vehicles and other objects from drone footage - with the ultimate goal of enabling the automated detection and identification of objects in up to 38 categories - including the ability to track individuals as they come and go from different locations.

Project Maven’s objective, according to Air Force Lt. Gen. John N.T. “Jack” Shanahan, director for Defense Intelligence for Warfighter Support in the Office of the Undersecretary of Defense for Intelligence, “is to turn the enormous volume of data available to DoD into actionable intelligence and insights." -DoD

The internal revolt began shortly after Google revealed its involvement in the project nearly three months ago.

Some Google employees were outraged that the company would offer resources to the military for surveillance technology involved in drone operations, sources said, while others argued that the project raised important ethical questions about the development and use of machine learning. -Gizmodo

The resigned employees cited a range of frustrations, from ethical concerns over the use of AI in a battlefield setting, to larger concerns over Google's overall political decisions.

The disgruntled ex-employees, apparently unaware that Google was seed-funded by the NSA and CIA, have compiled a master document of personal accounts detailing their decisions to leave, which multiple sources have described to Gizmodo.

The employees who are resigning in protest, several of whom discussed their decision to leave with Gizmodo, say that executives have become less transparent with their workforce about controversial business decisions and seem less interested in listening to workers’ objections than they once did. In the case of Maven, Google is helping the Defense Department implement machine learning to classify images gathered by drones. But some employees believe humans, not algorithms, should be responsible for this sensitive and potentially lethal work—and that Google shouldn’t be involved in military work at all.

Historically, Google has promoted an open culture that encourages employees to challenge and debate product decisions. But some employees feel that their leadership no longer as attentive to their concerns, leaving them to face the fallout. “Over the last couple of months, I’ve been less and less impressed with the response and the way people’s concerns are being treated and listened to,” one employee who resigned said. -Gizmodo

Ironically, the development of Google's original algorithm at Stanford was partly funded by a joint CIA-NSA program in which founder Sergei Brin created a method to quickly mine large amounts of data stored in databases.

“Google founder Mr. Sergey Brin was partly funded by this program while he was a PhD student at Stanford. He together with his advisor Prof. Jeffrey Ullman and my colleague at MITRE, Dr. Chris Clifton [Mitre’s chief scientist in IT], developed the Query Flocks System which produced solutions for mining large amounts of data stored in databases. I remember visiting Stanford with Dr. Rick Steinheiser from the Intelligence Community and Mr. Brin would rush in on roller blades, give his presentation and rush out. In fact the last time we met in September 1998, Mr. Brin demonstrated to us his search engine which became Google soon after.” -Nafeez Ahmed

In their defense of Project Maven, Google notes that their AI won't actually be used to kill anyone (just help the military ID targets to "service"). That isn't good enough for workers and academics opposed to the use of machine learning in a military application.

In addition to the petition circulating inside Google, the Tech Workers Coalition launched a petition in April demanding that Google abandon its work on Maven and that other major tech companies, including IBM and Amazon, refuse to work with the U.S. Defense Department. -Gizmodo

“We can no longer ignore our industry’s and our technologies’ harmful biases, large-scale breaches of trust, and lack of ethical safeguards,” the petition reads. “These are life and death stakes.”

Over 90 academics in AI, ethics and computer science released an open letter on Monday, calling on Google to end its involvement with Project Maven and support an international treaty which would prohibit the use of autonomous weapons systems.

Peter Asaro and Lucy Suchman, two of the authors of the letter, have testified before the United Nations about autonomous weapons; a third author, Lilly Irani, is a professor of science and a former Google employee.

Google’s contributions to Project Maven could accelerate the development of fully autonomous weapons, Suchman told Gizmodo. Although Google is based in the U.S., it has an obligation to protect its global user base that outweighs its alignment with any single nation’s military, she said. -Gizmodo

“If ethical action on the part of tech companies requires consideration of who might benefit from a technology and who might be harmed, then we can say with certainty that no topic deserves more sober reflection—no technology has higher stakes—than algorithms meant to target and kill at a distance and without public accountability,” the letter states. “Google has moved into military work without subjecting itself to public debate or deliberation, either domestically or internationally. While Google regularly decides the future of technology without democratic public engagement, its entry into military technologies casts the problems of private control of information infrastructure into high relief.”

We're sure employees have nothing to worry about and their concerns are overblown - as Google's "Don't be evil" motto prevents them from ever participating in some scary program that could kill more innocent people than a Tesla autopilot.

great thought on this subject

i upvote youer blog . i like it . check my blog too please