Don't know Programming? Here are some popular tools for Data Science and Machine Learning:

Introduction:

Programming plays very vital role in Data Science.It is often assumed that a person who understands programming,loops,functions and logic has higher chance of becoming successful on this platform.

Is there any way for those who don't know programming???

Yes!

With the advancement in recent technology,lots of people are showing interest in this domain.

Today i will be talking about the tools which you can use to become successful in this domain.

Before getting into today's topic of discussion, i would like you to visit my blog on the

"mistakes which amateur data scientists make"

here is the link,have a look:

https://steemit.com/mgsc/@ankit-singh/13-mistakes-of-data-scientists-and-how-to-avoid-them

Ok guys,lets begin!

Once upon a time, i too wasn't much good in programming and hence i understand how horrible it fells when it haunts you at every step in your job,still there are ways for you to become a data scientist .There are tools which provide user-friendly Graphical User Interface along with Programming soft skills.

Even if you have very less knowledge of algorithms,you can develop High End Machine Learning Models.Nowadays,companies are launching GUI driven tools and here i will be covering few important ones.

Note:All information gathered is from open-source information sources.I am just presenting my opinions based on my experiences.

List of Tools:

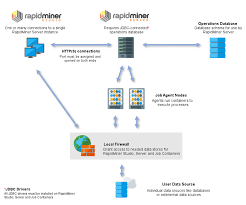

1)RapidMiner:

RapidMiner(RM) was started in 2006 namely Rapid-I ,as an standalone open-source software.It had also gathered 35+ million USD as funding.The newer version comes with 14 day trial period and there after you need to purchase the licensed version of it.

RM focuses on total life cycle,starting from prediction and data presentation till modelling,development and validation.

Its GUI works similar to Matlab Simulink and is based on Block-diagram approach.They provide plug and play service,as blocks are predefined in GUI. you just need to connect these blocks in right manner and varieties of algorithms can be run without even writing a single code.Also,they provide custom R and Python scripts to be integrated into the system.

Their current products are:

RapidMiner Studio:

Use this for data preparation,statistical modelling and visualization.RapidMiner Server:

Use this for project management and model development.RapidMiner Radoop:

Use this to implement big-data analytics.RapidMiner Cloud:

Use this for easy sharing of information over different devices using cloud.

RM is being actively used in banking,insurance,life sciences,manufacturing,automobile industries,oil and gas,retail,telecommunication and utilities.

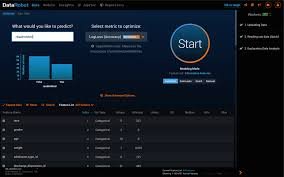

2)DataRobot:

DataRobot(DR) is a machine learning platform and is completely automated.This platform claims to cater all the needs of data scientists.

.jpg)

This is what they say:"Data science requires math and stats aptitude,programming skills and business knowledge.With DataRobot,you bring the business knowledge and data,and our cutting-edge automation takes care of the rest."

.jpg)

Benefits of using DR:

Parallel Processing:

1)Scales to big datasets by using distributed algorithms.

2)Multi-core servers are used to divide computations.Deployment:

1)Easy development without using any codes.Model Optimization:

1)Automatically selects Hyper-Parameters and also detects data pre-processing which is best suited.

2)Uses imputation,scaling,transformation,variable type detection,encoding and text minings.For Software engineers:

1)Python SDK and API's are available which converts models into tools and softwares quickly.

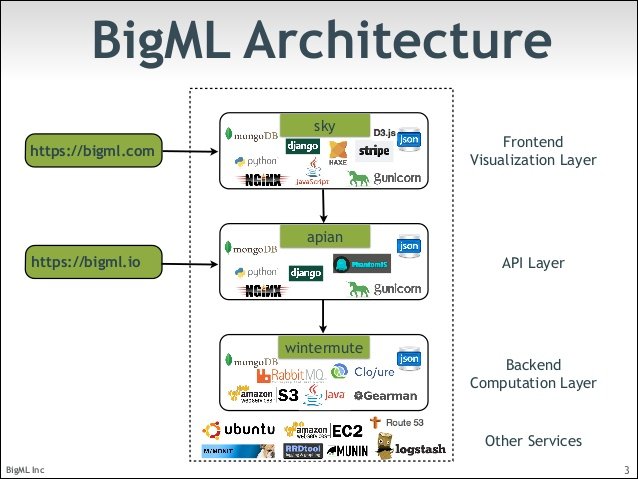

3)BigML:

It provides good GUI which has these steps as follows:

- Sources: Uses informations of various sources.

- Datasets: Create dataset using defined sources.

- Models: Make predictive models here.

- Predictions: Here,you will generate models based on predictions.

- Ensembles: ensembles of various models are created here.

- Evaluation: very model against validation sets.

This platform provides unique visualization and have algorithms for analyzing regression,clustering,classification and anomaly detection.

This platform provides unique visualization and have algorithms for analyzing regression,clustering,classification and anomaly detection.

They offer different packages for subscriptions.In free service,you can only upload dataset upto 16MB.

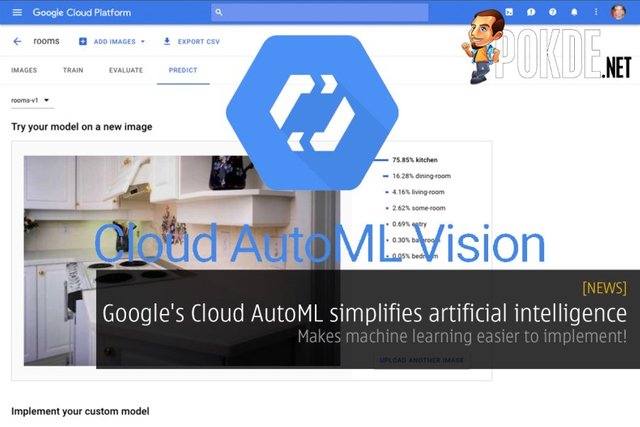

4)Google Cloud AutoML:

Its a part of Google's Machine Learning Program that allows user to develop high end models.Their first product is Cloud AutoML Vision.

It makes analysis of image recognition models easier.Also,has a drag and drop interface and allows users to upload images,train models and then deploys those models to Google Cloud directly.

Its built on Google's neural architecture search technologies.Lot of organizations are currently using this platform.

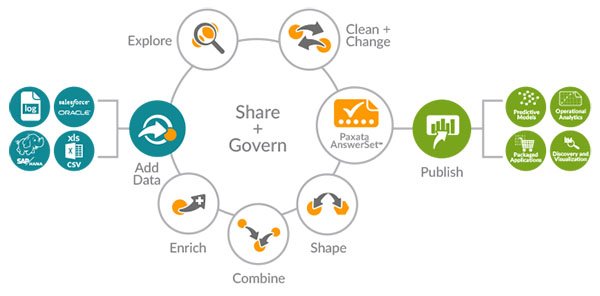

5)Paxata:

This organisation is one among the few which focus on data cleaning and preparation,not on statistical modelling and machine learning.It is similar to MS Excel application and hence it is easy to use.Also provides visual guidance and eliminates scripting and coding.Hence it overcomes technical barriers.

Processes followed by Paxata Platform:

Add Data:

Wide range of sources are used to acquire data.Explore:

Powerful visuals are used to perform data exploration.Change+Clean:

Steps like normalization,detecting duplicates,data cleaning are performed.Shape:

Grouping and aggregation are performed.Govern+Share:

Allows collaborating and sharing across sharing.Combine:

Using SmartFusion technology,it automatically detects the best combination of combining data frames.BI Tools:

Final AnswerSet is visualized here and iterations between visualization and data preprocessing.- Wrangler:

Its a free standalone software and allows upto 100MB of data. - Wrangler Pro:

Now it allows both single and multi-user and the data volume limit is 40GB. - Wrangler Enterprise:

It doesn't have any limit on data you process.Hence,its ideal for big industries. Discovering:

Looks at the data and quickly distributes it.Structure:

Assigns proper variable types and shapes.Cleaning:

Includes imputations,text standardization which makes data model ready.Enriching:

Performs feature engineering and adds data from other sources to the existing data.Validating:

Performs final checking on the data.Publishing:

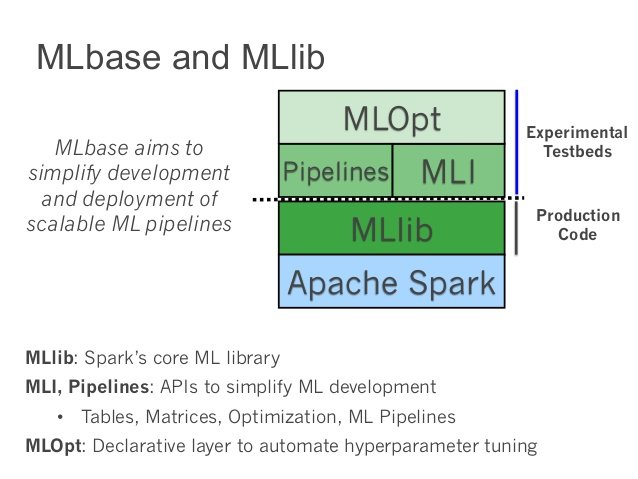

Now data is ready to be exported.MLlib:

In the support of Spark community,it works as core distributed ML library in Apache Spark.MLI:

Works on algorithm development and extraction,that introduces high-level ML programming abstractions.ML Optimizer:

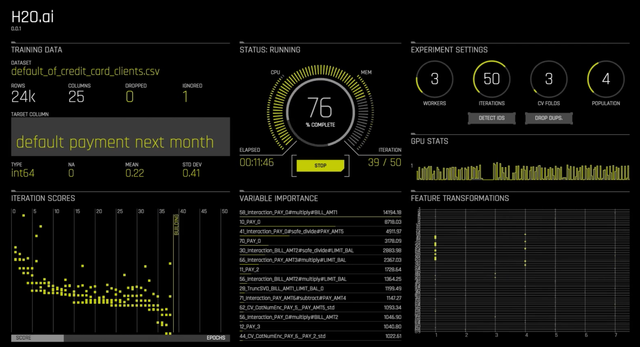

It automates the task of pipeline construction and also solves the search problems.Supports multi GPU for K-Means,GLM,XGBOOST:

Which improves speed of complex datasets.

Automatic featured engineering:

Produces highly accurate predictions.

Interprets the models:

Includes real time analysis by the featured panels.

Praxata also handles financial services,consumer goods and networking domains.Its good for you if your work requires data cleaning.

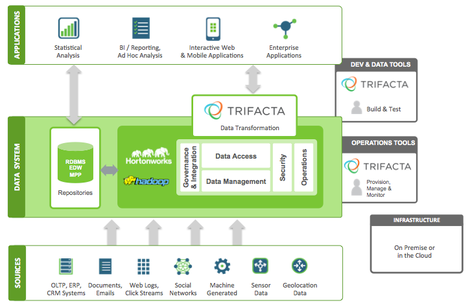

6)Trifacta:

Its another startup and focuses on data preparation.

Its GUI performs data cleaning automatically.For the input data,it provides summary of it along with the statistics column-wise.It automatically recommends transformations for the columns by using predefined functions which are easy to be called in the interface.

It uses these steps for preparing data:

It is used in life sciences,telecom and financial sectors.

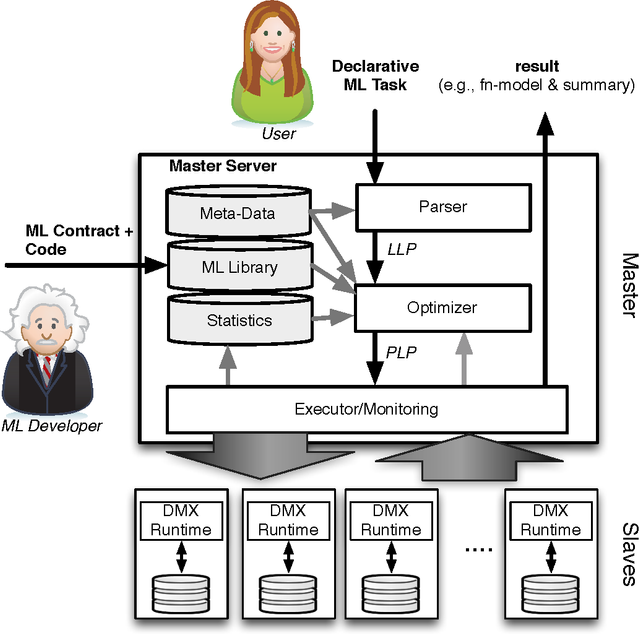

7)MLBase:

Its an open source project developed by Algorithms Machines People(AMP) in Berkeley,at University of California.

The goal of this company is to provide easy tools for machine learning,especially for large scale applications.

Its offerings:

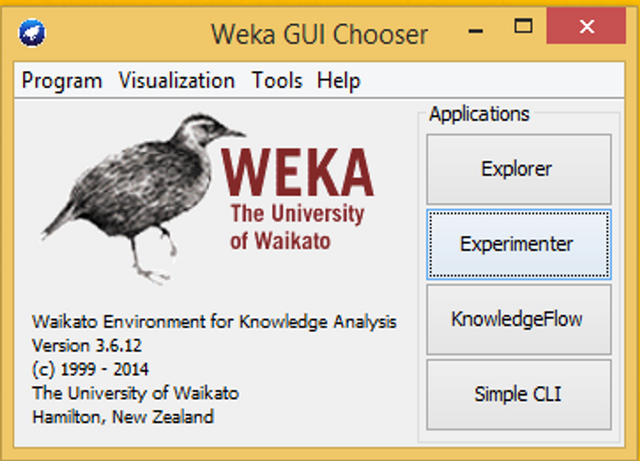

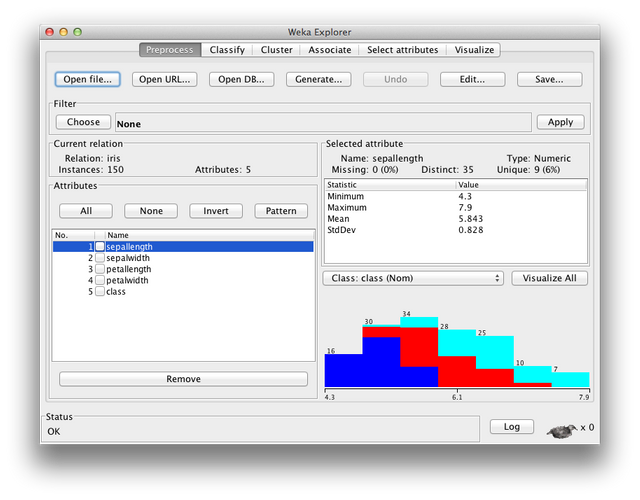

8)Auto-WEKA:

Its developed in New Zealand by the Machine Learning Group of the University of Waikato .Its a open-source data mining software and is based on java.It is also based on GUI,hence is good for amateur data scientists.To help you get started,its developers had provided papers and tutorials for the same.

Its used for academic and educational purposes.

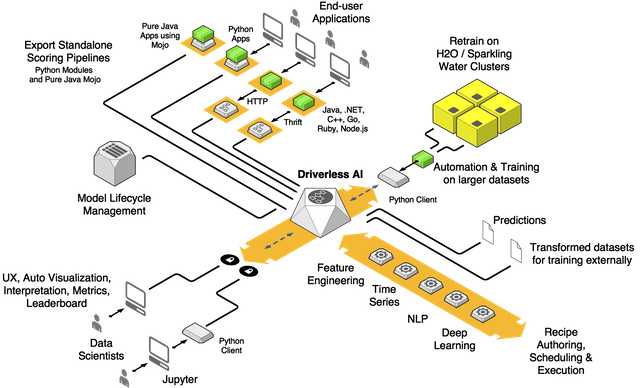

9)Driverless AI:

Its an amazing platform for companies that incorporates machine learning.It provides a 1 month of trail version.It uses drag and drop mechanism,using which you can track model's performance.

Mindblowing features:

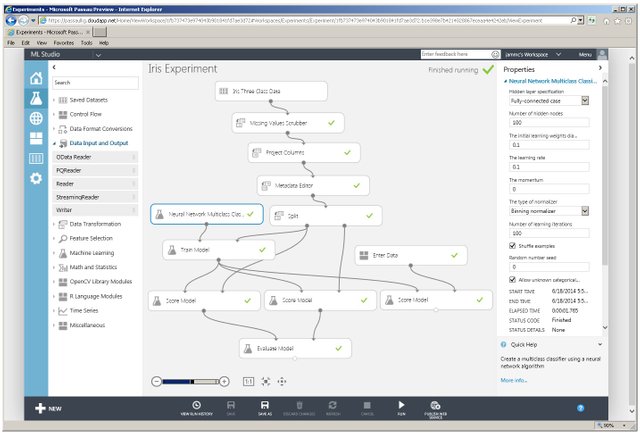

10)Microsoft Azure ML Studio:

Its simple yet powerful browser based ML platform.

It includes visual drag and drop environment.

Hence,no need of coding.Had published comprehensive tutorials and sample experiments for freshers.

End Note:

There are many more GUI based tools. But,these were the top ones.

I would love to hear your thoughts and your personal experiences.Use the comment section below to let me know.

Thanks

@ankit-singh

very well explained keep steeming bro

@ankit-singh

@zayushz

Thanks bro...keep supporting!.. from now onwards, you r in our team...lets build our profile stronger

thanks for your help

@cleverbot

@ankit-singh

Very knowledgeable blogs and very different from others keep it up 👍

Thanks,keep working...u r doing great on this platform.

@anchalmehta

Your post had been curated by the @buildawhale team and mentioned here:

https://steemit.com/curation/@buildawhale/buildawhale-curation-digest-07-20-18

Keep up the good work and original content, everyone appreciates it!

@nicnas

Thanks for your curation...keep supporting!

You got a 2.81% upvote from @postpromoter courtesy of @ankit-singh!

Want to promote your posts too? Check out the Steem Bot Tracker website for more info. If you would like to support the development of @postpromoter and the bot tracker please vote for @yabapmatt for witness!

man...... i dont want to know about scince , just make my chemestry with the that girl who are in the first picture :P

@mediawizards

Oh yeah!!

Thats why i added her...so that i could find a match for her...i will tell her to contact u...

hahaahaha

Great going man. Your blog are really interesting

Posted using Partiko Android

@dashingh

Thanks for your support.

Great work bro... Is this complete website for learnin?

@karan.work77

Yes bro

@karan.work77, if you want to learn programming check out my blog: https://steemit.com/@mariusclaassen

your data science knowledge is very deep

@jayminvekariya

Trying to learn more bro...

Will be adding more stuff like this.

Very good sir! Keep it up!

@ali1357

Thanks

Programming has always intrigued me & your post is very informative plus I like the layout & style, good job my buddy!

@manpreet13

Thanks for appreciating...will be updating more features with time...hope u would like it.

@manpreet13, If you wish to learn programming, check out my blog: https://steemit.com/@mariusclaassen

@mariusclaassen

Thanks for helping us...glad to see u here !