Do people threatening AI?

Artificial intelligence, many experts believe almost the main threat to humanity. But how justified these fears?

Machine against man

The fact that robots replace human everywhere, we hear almost every day. According to the most optimistic forecasts, in the coming decades, will leave the car without work, millions of people. But in most cases we are talking about highly specialized programs that, although they may be replaced by a single expert, but certainly not to be 'alternative »Homo sapiens.

It is no secret that artificial intelligence can already compete with the man. The most striking example - created by Google AlphaGo program. Previously, various programs have already gained the upper hand over man in checkers, chess and backgammon. But the ancient Chinese game of logical thought "too difficult for AI." It is indeed present a profound strategic content. The fact is that the first is almost impossible to enumerate all combinations, and therefore AI is difficult to calculate the scenarios. However, Google AlphaGo program created in 2016 was able to beat the European champion in the game of Fan Hui. And she did it with a crushing score of 5: 0.

But as it was possible to achieve such a result? After all, as we have said, we can not be applied conventional algorithms. AlphaGo creators have developed two neural networks: special algorithms that simulate the neuronal chains in the human brain. One network was responsible for determining the current position on the board, and the second applied the results of the analysis, which were prepared by the first network to select the next action.

"Nobel laureate"

This wonderful example of amplification is not the only evidence of AI products. Recently, a group of Australian physicists have managed to create a program that can carry out research itself. Moreover, it has turned out to reproduce the experiment, for which in 2001 was awarded the Nobel Prize in physics. We are talking about the award, which in 2001 went to Eric Cornell, Wolfgang Ketterle and Carl Wieman for "experimental observation of Bose-Einstein condensation in dilute gases of alkali metal atoms, and for early fundamental studies of the properties of the condensates". Within an experiment, researchers cooled gas to a temperature of about microkelvin and then given control of several laser beams artificial intelligence for cooling the captured gas to nanokelvin. The machine has learned to carry out the experiment in less than an hour. More than that, she was able to approach the process of "creative." AI, for example, changed the power of the laser to compensate for it with another laser. The authors of the new study say that "man would not be able to think of this."

Who is who

As we can see, progress in creating a clear and AI cases described above - only one of many. Another question: what is considered artificial intelligence, and more that can be embedded in the concept of "high-grade AI"? This question bothers the great minds for decades, but even now, in our advanced information age, to answer it is very difficult. In 1980, the American philosopher John Searle Rogers coined the term 'strong artificial intelligence'. This is a program that will be not just a model of the mind, and the mind will be in the literal sense of the word. In contrast, proponents of "weak artificial intelligence" estimate AI only in the context of solving specific tasks. Simply put, they can not imagine that the machine is operated on a par with the man.

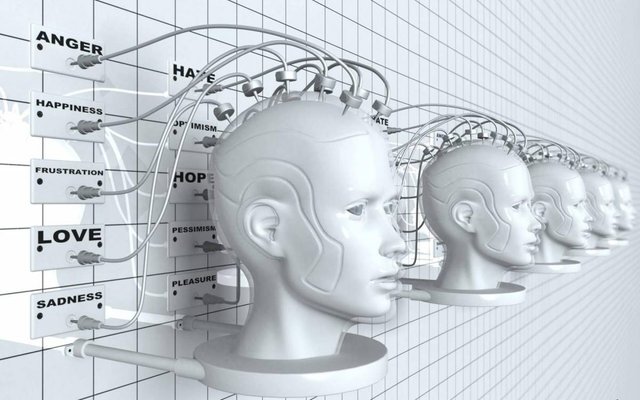

Equally important is the moral and ethical aspect. Suppose scientists will create an advanced AI that will replace the human in virtually all areas. But would such a program be familiar to human society morality? Alternatively, it is basically impossible to give such qualities ... I wonder what was previously introduced a special term "pure artificial intelligence": in this case the machine understands and deals with issues like a real person, but devoid of emotion characteristic of humans. The latter, incidentally, can be a competitive advantage of the car in front of a man, but more on that later.

Threat to survival?

"Weak AI", of course, can deprive a person of work, but is unlikely to become a threat to the survival of the species Homo sapiens. As the main threat experts call it an advanced full artificial intelligence, that man will simply not needed. Pretty frightening forecast in 2015 did the famous British physicist Stephen Hawking, who declared that the machine for a hundred years, will take over the top man. "In the next 100 years, artificial intelligence will surpass human. And before that happens, we must do everything to cars goals coincide with our ", - said Hawking. The researcher is concerned about the short-sightedness of AI developers. Earlier scientist called the cause of AI superior to Homo sapiens: in his opinion, a person evolves very slowly, while the car will be able to cultivate incredibly fast.

Fears Hawking shares Microsoft founder Bill Gates. "I'm in the camp, which is concerned about the prospect of superintelligence. First, the machine will perform most of the work for us, but will not have superintelligence. It is good if we are correct we will manage it. After a few decades, artificial intelligence is sufficiently developed to be a cause for concern ", - he said in 2015.

Interestingly, the threat of AI says even the main innovator of our time Elon Musk, who, it would seem, must see the pros in all new technologies. He said of the possibility of a full-fledged artificial intelligence in the coming years. "Artificial intelligence is progressing very rapidly. You have no idea how. This occurs at an exponential rate, "- he said in 2015.

Fantasy and Reality

His contribution to the dissemination of the thesis about the threat of AI, of course, have made fiction. Perhaps the most frightening prognosis end of the world - a "gray goo." In this case, self-replicating nanorobots unmanaged, performing a program to propagate independently, they will absorb all the available material on the planet.

Now this sounds like gibberish, but to many, this scenario does not seem so unlikely. Scottish scientist Duncan Forgan even proposed in 2015 to look for the lost alien civilization on the "gray goo". In the event of such a development of a doomsday scenario, in his opinion, an alien planet will be covered with "sand" from mini-cars. They will reflect light and dramatically improve the brightness of the planet as it passes over the disk of the star.

In any case, it is that the use of the AI is much more than harm, and there is no reason to think that "human robots conspired against something bad." The main enemy of Homo sapiens - is not a machine, not natural disasters, or even aliens from other worlds. The main danger for the person represents another person. And the benefit / harm of artificial intelligence will entirely depend on how people themselves dispose of new advances in science and technology.

material taken from https://naked-science.ru/article/nakedscience/ugrozhaet-li-lyudyam-ii