The concepts of reputational risk and feedback in decentralized governance

As some may know from my previous blogposts, I have discussed governance. Some may also know that I've been thinking about this topic for years now (since 2015 at least) and have reached some conclusions in my own research. Some of those conclusions have been hinted at in the series of blogposts listed below:

- Global sentiment analysis - public sentiment as the invisible hand behind democracy and how it relates to DPOS

- The key to long term success for any crypto project is winning global sentiment (data analytics)

- Self monitoring, Dramaturgy, Microsociology, and Morality

- Consequentialism and big data, the need for intelligent machines to assist with moral decision making

- Is "Don't be Evil" enough or should we strive to be wise and good?

In this blogpost I will go into some detail defining my philosophical stances. I will explain why I think as I do, how I arrived at certain conclusions and or opinions, and what I think are the biggest problems to tackle for improving governance.

The consequence based perspective

The perspective I take on "right" and "wrong" is consequence based. In addition, there is no belief in fixed ideology, dogma, or religion to inform me. I do not consider myself specifically to be a libertarian, anarchist, socialist, liberal, or conservative. I do not really believe in these brands or "schools of thoughts" as I see them all as limiting. These artificial limitations can prevent the rational investigator from exploring any possible solution and as a result reduce the innovative capacity of the rational investigator.

While my views do not include any fixed ideological structure there are what I consider to be "best practices". These are policies which have been shown to work a majority of the time, in most instances, and this would most resemble a form of rule utilitarianism if we seek to place it into the consequentialist box.

Some of these ideals or best practices are below:

- I currently think a world with human rights is a better (happier) world than a world without human rights.

- I currently think transcending the limits and barriers stifling individual self improvement is a good thing.

- I currently think science and wisdom building are of critical importance to self improvement.

- I think we should do what we can to minimize the involuntary and unnecessary suffering that exists in the world.

In terms of best practices, measured in human rights, then we can debate which human rights are indeed best practices but for me it is clear that protecting life (saving lives) is ideal. This is the central right which if not protected then none of the other rights matter. So life always comes first. Protecting liberty comes second because in order to be a person as we know it, requires a level of freedom. Protecting the ability of people to pursue happiness is the logical consequence, because quality of life does matter. Of course there may be more best practices than this but to sum it up, keeping people in the world safe (physically), allowing or encouraging people to live freely, and enabling or and allowing people to do their own thing, is all critically important.

How do I reach the conclusion that these values are important?

If I examine only my own life and desires I could reach the conclusion rationally that these values are important. I would like to be kept safe. I would like to be free. I would like to pursue my own happiness. This of course isn't enough for me to determine that these are best practices. What actually matters is do most humans want this? In order for it to be best practice it has to be shown that these values align with human nature (not just my own nature).

The fact is, humans are willing to risk their lives, fight, and die for these principles, for these rights, for these values. It is these actions backing these values which show me historically that human beings on some level deeply desire to be safe, to be free, to be happy. To call it best practices is to say that in order for any system of governance to truly consider itself to be meeting a certain quality standard of correctly functioning it is a must that it at minimum protect the fundamental rights that matter for a majority of people.

What if I'm wrong?

Just as with any best practices, if I find from new knowledge that my current best practices are wrong then they'll be modified or changed. To have and to follow static rules is to have a way of thinking which cannot evolve. If it cannot evolve then things cannot improve. On the individual level, I do not assume any current opinion is the final opinion. I do not assume anything I think today is something I'll think tomorrow after I've been exposed to new or perhaps higher quality information and knowledge.

Improving decentralized governance starts with recognizing the limitations of human nature

When I blogged about an exocortex, it hints at a very pragmatic approach to improving governance. This approach was arrived at based on certain assumptions which so far neuroscience has shown evidence to support. One of the assumptions is that the human brain itself is a bottleneck. The human brain for example is limited in it's ability to multitask. This limit in the ability of the ability of the human brain to multitask in my opinion is evidence in support of the idea that attention is a scarce resource. Despite the fact that science has revealed that the vast majority of humans cannot multitask it does not change the fact that most of us try to do it. Why do we try to multitask even when according to neuroscientists we aren't suited for it? It's a possible explanation that because the world requires more from us than we can keep up with and in order to remain competitive we spread our attention across multiple tasks thinking it will improve our productivity.

When I posted the blog titled: Attention-Based Stigmergic Distributed Collaborative Organizations, there was a deep yet important explanation contained in the quote:

"Attention is a scarce resource, information is not!"

In other words, as information grows exponentially our attention for our entire lifetime is a fixed amount. We simply do not have enough attention to keep up with the information being pushed at us. This information is pushed at us by advertisers. This information comes in the form of memes. These memes can be thought of as mind viruses and while some are helpful others can actually not be so helpful. For example, there are anti-science memes which encourage people to embrace ignorance, rebel against science, and reject the pursuit of knowledge. Because there is so much information hitting the conscious mind, the subconscious ends up taking on the task of absorbing the information which the conscious mind doesn't.

Attention scarcity in the context of a firm is made understandable from this quote from my previous post:

As human beings we are guided by our attention. It is also a fact that our attention is a scarce resource which many competing entities seek to capture. In an attention based view (ABV) of a firm it is attention which is the most precious resource and the allocation of attention is critical to the successful management of the firm. In a traditional top down hierarchical firm managerial attention is considered to be the most precious (Tseng & Chen, 2009), and is an very scarce resource. The allocation of attention within a firm can facilitate knowledge search. Knowledge search is part of the process of producing innovation and is effective or ineffective based on how management allocates their attention.

So the first limitation we can clearly see which is backed by the scientific literature (neuroscience and psychology) is that there is a such thing as attention scarcity. In managerial science literature we find that the ability of a firm to conduct a knowledge search (a critical process in generating innovation) is itself limited by this ineffective use of the very scarce attention available. Misallocation of attention can slow the rate of innovation.

Attention scarcity is not the only problem. Another major problem of the human brain which platforms (and platform developers) do not seem to recognize is that the human brain is also limited in the number of relationships which it can manage. On platforms like Facebook people may have 1000 friends but the brain can only really manage a fixed number of relationships effectively. The scientific literature arrived at an approximation called Dunbar's number which represents how well the human brain itself can scale socially. This number represents the fact that we are socially limited.

Transparency advocates will often make the statement that freedom requires radical transparency. In fact Dan Larimer himself blogged about this in his post titled: "Does Freedom Require Radical Transparency or Radical Privacy?. In this school of thought, information symmetry can help us to reduce the "evils" and enhance the "goods". In fact we can see his argument from this quote:

Transparency is the foundation necessary to secure the moral high ground. Absent transparency, governments use their control over information to obscure reality and slander whoever is necessary in order to justify their actions. It is only through secrecy and lies that governments can maintain the illusion of the moral high ground.

In this quote Dan Larimer communicates the concept of "moral high ground". This term is interpreted by me to mean "global sentiment" because the only thing a moral high ground represents in practice are the current global moral sentiments. The problem here is there is no way to predict where that might land. Another problem which I've identified in my own response posts is that the crowd for the most part isn't truly moral and that there is a distinction to be made here between "publicly recognized as moral" in terms of meeting with current public sentiment, and the more difficult moral because it actually is producing better consequences. In other words, if the crowd sentiment determines everything but the crowd is not wise, or moral in the consequence based sense of the word, then we could very well end up with a dystopian outcome where the crowd at the time thought it was right because everyone else thought it was right, but perhaps it was nothing more than mass hysteria.

We know propaganda can lead crowds into mass hysteria. We have seen this happen in Nazi Germany. We have seen this happen in the United States. The problem isn't in my opinion reducible to mere information asymmetry, but it's also a matter of what the hell are we supposed to do with the information once we have access to it? We simply do not have the attention spans (proven by neuroscience) nor do we have the social capacity (proven by neuroscience) to actually scale our ability to be moral.

If we look at the current Facebook scandal then we can see for instance that even while Facebook has us more connected to each other than ever in human history we still don't have access to quality information from which to make decisions. We are fed targeted information from central entities which seek to encourage ignorance in order to grow their wealth and power. This information may in fact even be disinformation designed to feed us harmful memes. Once again, we simply do not have the ability currently to make use of the massive amounts of big data we currently have access to. Instead people see Tweets on social media, declare it racist or sexist, and then seek to get those people fired in the belief that it is fighting racism/sexism (aka bigotry).

A common idea among those who believe in radical transparency as a solution is that if we just make the digital village like a small town we can trust each other again. That somehow if we can make Steem like a small town, where everyone knows everyone, that things will be fine. The problem again is in Dunbar's number which proves that the human brain can only manage a mean community size of 150 . In other words the human brain itself is not designed to handle the level of transparency where 1000 or 10,000 or 100,000 different people make up a community and can see everything you do.

It's one thing if you are talking about a neighborhood of 150 people but it is another thing completely to think 1000 people, or 100,000 people, or 1 million people can somehow act as a community. There is no evidence that community itself scales beyond the mean of 150 due to the limitations of the human brain. Facebook does not change this, EOS will not change this, as this is a hard limit. Greater transparency in a hyper connected community of 1 million people who have no way of cognitively managing more than an average of 150 relationships seems to be a recipe for disaster.

What can we do about this attention scarcity problem?

When thinking about this problem in my own life, I recognized that the only possible solution is to delegate certain tasks to others. In fact the only way DPOS works on platforms like Steem and Bitshares is due to this ability to have proxy voters. This is an example of task delegation but in these examples it's delegating to a human agent and while this may be necessary because someone has to be the witnesses, it also opens up the governance process to the same problems which we always see when humans act as politicians. In other words, DPOS is fueled by politics and the more transparent the platform becomes the more this will be the case. This does not mean DPOS is not useful, or that Steem is not of utility for the world, but it does mean as a governance platform it's got issues.

The solution I proposed for solving the attention scarcity issue is to delegate tasks to bots and humans depending on the nature of the task. Steem to a certain extent has incorporated this. The reason bots exist on Steem is because there isn't enough human attention available at current prices to make it "worth it" to do curation. Even if it were somehow worth it, the attention is still very limited and this is why bots are all over Steem. In specific, I'm in favor of using bots on an even more fundamental level and my blog post titled: "Personal Preference Bot Nets and The Quantification of Intention" communicates the idea of turning intention itself into an abstraction.

What does it mean to turn attention into an abstraction? The core of the mind is the will. An intention is what you want to do or want to happen, and is based on your will. To quantify your intention is to take your will and communicate it into a language understandable by current (and future) artificial intelligence and or humans. So if you've got your network of bots which do tasks which you cleverly defined then these bots can be thought of philosophically as an extension of your mind. The extended mind hypothesis is controversial and a majority of people in the cryptospace either have no understanding of it or reject it outright. It simply means that the bots which are under your control (attempting to act out your will) are as much a part of you as your limbs, hands, fingers, tongue you speak with, are part of you.

In my old blogpost the quote below summarizes an important concept:

Value is produced by services which save time or attention

The more time a bot saves, the more value the bot adds. The less scarce attention a human being has to spend on mundane tasks, the more value a bot adds. This simple equation can allow you to measure how much value a particular bot is adding or taking away. If a bot is spreading advertisements which are unwanted then it's noise, it takes away value, it makes it harder to find truly valuable content. Beneficial bots should do the exact opposite of spam and should always seek to add as much value as possible to Steemit and to their owner(s).

The quote above is the justification for Qbots or quantified intent bots. The solution for attention scarcity in my opinion is solvable using agent based networks. In the case where the agent is an intelligent machine then the intent (will) is relayed to these machines in such a way that they receive them as commands. These commands could contain an attached fee so that a market can form allowing many people to create bots which can satisfy the market similar to what we currently see on Steemit already so for the most part we see the birth of this ecosystem forming in the infant stage. The other part of this puzzle of course is the case where the agent is an intelligent person and in this case we have what some might call an Oracle. @ned discussed the concept of SMT Oracles and my thinking is very similar in that just as bots can accept a task, so too can a person. In fact in a currently functioning ecosystem as I imagine it, a bot can receive a task, break it into pieces, rent out the services of humans and other bots, and then get the completed result to rely back to the customer.

To put my vision and Ned's vision into context, here is a quote from Ned:

The economic and political mechanics of SMT Oracles are similar to Steem DPoS Witnesses - where instead of block and USD price-feed producing Witnesses, Oracles are paid for with SMT subsidies/new token emissions for pre-defined event-verification jobs and participants are voted into Oracle positions by stake-weighted voting of an SMT or a combination of SMTs. There are some other major differences, including the possibility of second levels of Oracles whose jobs are Quality Assurance, and the need for data consistency thresholds when determining consensus over how SMTs may be distributed.

On this vision Ned and I are in agreement. We may disagree a bit on technical details but we both conceive of the same idea. Humans currently only function as witnesses but in theory there are a lot of roles/tasks which only humans can do well. AI cannot yet do certain kinds of tasks. As I've also put it, the role of AI is to do the tasks the humans cannot do well and the role of humans is to do the tasks that the AI cannot do well or at all. The vision I see is for agents (intelligent persons or intelligent machines) to act as the foundation for what I call a decentralized exocortex.

What is an exocortex as I've defined it? It's a technical solution to the problem of attention scarcity. It amplifies the intelligence of the fundamentally limited human brain.

What can we do about the limited social intelligence of the human brain?

Attention scarcity and social intelligence are fundamentally limited. In recognizing these hard limits (of my own brain and every brain), it became necessary to search for technical means to transcend these limits. The mechanism I arrived at for solving this problem effectively is to build a decentralized exocortex. One of the first questions I could be asked is why does it have to be decentralized? Why not merely trust centralized companies in China, Russia, or the US, to build this capacity for us?

Prior to the current Facebook scandal, I did not have a very strong argument to refute people who would say I'm merely being paranoid and that I should trust these companies. This is because there weren't a lot of strong rational arguments based on facts (not conspiracy theories) for why we shouldn't just let Exocortex Corp build the global wisdom machine. The problem is becoming clearer over time, which is that whichever company or entity has central control (and thus access) to our data is in a better position to manipulate how we think, keep us ignorant, and weaponize. This scenario for governments at war with each other may seem like a good thing for the entities designated with the task of spreading harmful memes in the national interest but for the civilians across the globe who do not want cold war style propaganda to dictate the fate of their lives then perhaps an alternative means of communication is necessary.

The Internet itself was created by ARPA to allow for communication channels to remain open even in the context of a nuclear war. This was because during the cold war scenarios the first thing which would likely be attacked are the means of communication (this is true in any war). If there are centralized communications, then whomever you deem to be the enemy at the time is likely to attack that with priority. The Internet preserves communication but the major issue now is that there is nothing to build and preserve wisdom. We can connect all we want, we can communicate as much as we like, but if all we communicate is disinformation, propaganda, "fake news", etc, then our ability to function as a community is effectively wiped out. In order to have a community there is a requirement to not just connect and communicate, but to produce and share knowledge, to grow wisdom, and to collectively make increasingly wise decisions over time.

The purpose behind a decentralized Exocortex

By Longlivetheux [CC BY-SA 4.0 (https://creativecommons.org/licenses/by-sa/4.0)], from Wikimedia Commons

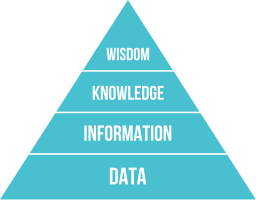

A decentralized exocortex is more than just a shared storage mechanism. We have that already with the Internet. It's more than just another decentralized storage like a DHT or IPFS. An exocortex decentralized must be a wisdom machine (or wisdom booster). It must allow anyone to contribute knowledge to it. It must have some mechanism of distributing the most current knowledge to all participants. It must have the ability to actually analyze information in such a way that it can transform the bits of information into knowledge, and from that knowledge produce wisdom. The DIKW pyramid reveals a critical functionality necessary for a decentralized exocortex.

To put it in a different way, the concept of "mind uploading" is very popular in some circles. The global mind is currently being uploaded onto the Internet. The problem is that the mind is uploaded currently as incoherent barely organizable pieces of information. This information is searchable by search engines run by companies but the ability to search is not built into the web itself. The ability to process data, to process information, is also not built into the network itself. As a result, data analytics companies are paid and these companies then provide unfair advantages to other companies in the areas which actually matter with regard to processing information. The idea behind a decentralized exocortex is to democratize the capabilities of knowledge management, information processing, and wisdom building (among many other functions).

The concept of feedback in decentralized governance

The concept of feedback was first introduced by the field of Cybernetics. Cybernetics is defined below:

Cybernetics is a transdisciplinary[1] approach for exploring regulatory systems—their structures, constraints, and possibilities. Norbert Wiener defined cybernetics in 1948 as "the scientific study of control and communication in the animal and the machine."[2] In the 21st century, the term is often used in a rather loose way to imply "control of any system using technology." In other words, it is the scientific study of how humans, animals and machines control and communicate with each other.

In order to do governance properly we require a mechanism of adapting the government to the needs of it's members. Democracy is the method of allowing all members to vote in such a way that their vote is anonymous. Their vote must be anonymous in order to discourage coercion (coercion resistance). If there is no coercion resistance then just like in dictatorships, all votes will go to the strong power and to vote any other way is met with harsh consequences. This coercion can happen under capitalism if for example people are afraid not to vote the opposite of how their boss is voting and if their boss can simply look at how everyone in the company voted then the boss can simply fire and permanently ban all employees who voted the wrong way.

Another problem we see with voting in a democracy is that most of the time in a democracy there is the assumption that the voter will be informed. The voters are often misinformed. There is the assumption that the voters will be wise, but often the voters are not wise. In democracy, often voters merely vote how their tribe votes, for parties, without deep understanding of policies or the impact of those policies on themselves or their community. In order words, there is not always any incentive to promote wisdom and discourage the growth of ignorance because ignorant voters can be made to vote for a party which may only want to collect votes to win elections.

The concept of feedback is much broader than voting. Feedback is a matter of collecting information. This information can be collected in an anonymous manner. This information could be for example what people really believe, what people really want, what people upvoted or downvoted, what people said in conversations, it can literally include all sources. In other words, big data collection and analysis can inform a network. Data analysis can determine the current sentiment on any issue, it can determine spending habits, it can determine based on the values each participant inputted as their values in their profile what policies are aligned with those values. So the concept of feedback in a technical sense goes far beyond democracy and the goal is understanding the mind of each participant.

As machine intelligence improves over time then this understanding can eventually reach a point where the network itself has a better digital profile of the political interests of the community participant than the community participant can actively communicate or understand about themselves. At this point the feedback loop is that the network can inform the participant so as to enhance their understanding of themselves, their true interests, their current values, in other words providing the user with a mirror to show them a digital representation of themselves.

Unlike with centralized social media, if this functionality is decentralized then the participant at least theoretically will have less to fear about being exploited by this deep understanding. It's one thing if a company knows you better than you know yourself vs if a decentralized peer to peer network knows you better than you know yourself. That decentralized network can be designed in a way that none of the other participants in the network know who you are, or can link your anonymous data back to you, or perhaps the data simply can remain encrypted in a form which no one but you can access. In any of these cases, the problems of data breaches, of hackers exploiting the knowledge, of it being sold to foreign intelligence agencies, all are reduced.

This does not mean that decentralized networks cannot be hacked, or that encryption is fool proof. It simply means that for people who do not want to trust their digital lives, their digital selves, to a US company, will not be required to do so. If you'd prefer to trust the peer to peer environment instead of the centralized environment then you're putting your trust now in the ability of the hardware to keep things secure. You're putting trust in the ability of programmers to produce secure code and of course the math which forms the basis of the cryptography which these programmers rely on. Summarized as, a decentralized alternative is not risk free, it is not trustless, but the distinction is that your trust is placed in different people, in different areas, it is minimized trust rather than trustless.

Reputational risk and the reputation economy

The concept of reputational risk is central to understanding how a reputation economy can work in a decentralized context. If we think about things from a consequence based perspective then the reputation of a business is critical to the success or failure of that business. Competitor businesses will be able to gain an advantage if they can discredit or diminish the reputations of their competition. If a business is going to make a decision it must often run a risk assessment which includes the reputational risk to the business.

For example, a company in 2018 cannot appear racist. As a result, that company may adopt policies which reduce the risk that the company could suffer a scandal which could be leveraged in such a way so as to smear the company with a racist reputation. This means companies which have shareholders, which are public, and which are global, have a tendency to be risk averse. In particular, a company with global shareholders must always track global sentiment so as to avoid ever being on the wrong side of it. This concept applies to companies, and if we think of companies as mere "agents" in an economic context, we can also think of persons and bots as agents as well. Agents whether in an economic or social context, have to deal with/manage reputational risks. These reputation metrics make up the reputation economy, and as this reputation economy becomes more complex, more sophisticated, more automated (less biased), then we will soon in my opinion have trust even in a decentralized global environment.

How do you know who you can trust in a decentralized global environment?

People who are accustomed to local mentality may not understand how metrics (from data analysis) can become trust. To put it simple, the more relevant metrics you have about a potential business partner, the better your decisions can become. This can be metrics which determine whether or not you should give someone a loan. This can be metrics which determine whether or not a service provider is likely to honor a contract. These metrics are trust in the decentralized context.

To illustrate, if we look at the metrics which allow for witnesses to remain a witness without being booted from that privileged position, then we know that being able to run a server flawlessly with no downtime is a metric to track. These sorts of metrics ultimately produce a kind of picture, a kind of digital identity, and over time an automated method can simply analyze these metrics to tell each of us who to trust and how. The problem currently is that without privacy these metrics cannot be collected because it's simply not safe to trust the entire Internet to prove yourself trustworthy. For this reason privacy remains a necessity for building trust in a safe manner, and this can be accomplished potentially using advanced cryptography.

The conclusion is that once the technical capacity passes a certain threshold then the problem of trust in a decentralized context will cease being a problem. We will be able to know a certain pseudonym is owned by a person who can be trusted without having to know who the person behind it is. The pseudonym could be thought of as a business, and the business could be owned by whomever, but the metrics would show that the pseudonym has a perfect track record, and that all the owners of the private keys behind it, are indeed trusted members of the community, without any more knowledge being necessary.

All this is possible via scores. Scores could be thought of as fitness criteria. Just as there are credit scores, there will eventually be scores for just about anything imaginable. The only way to get a high score will be to have earned it by track record. This means a person can in theory try to set up a Sybil attack on the reputation economy but it would fail because it is extremely time consuming to build up a good reputation but very easy to lose it. Just as you can set up bad witnesses in DPOS, or on Casper you can have people who try to betray the protocol, the fact is that those who attempt to attack it will receive a negative cost (negative score), which means they could lose their money, or lose their position and have to start over.

The solutions I present and technological trajectory in progress represent a massive change in how human beings will interact with each other. The current trajectory is leading to a world where algorithms potentially judge us all. We have the option to shape that world into a world where these algorithms benefit us as individuals (and not just companies trying to sell stuff or governments trying to win wars). As individuals we would like to know who we can trust, but our attention is limited. Some of us would like to be wise, but our ability to analyze the vast amount of information is also limited. If we build the technical means to transcend these issues then we may develop a new way of communicating, not just communicating in the very limited ways that our brain allows, but in new ways.

The inevitable seems to be that as the world becomes more connected, we will have markets form for judging. These judging markets will develop the necessary AI and tools to allow our exocortex to filter, (or perhaps remain restricted to big companies and government) to determine who is a "good" and "bad" person according to the search criteria. Trust, good and bad, these concepts which currently our limited brains are struggling to negotiate based on interpreting situations and behaviors we barely understand, will likely be outsourced or exported to machines, algorithms, AI, which hopefully is unbiased, that will score everything.

Moral judgment? That may become automated. Just as whether to lend someone money is based on a FICO score. What you view as moral and immoral will eventually be formulated, quantifiable, and once that happens there will be a way to turn your moral expectations into a score. Is this something we want? It may be unavoidable in a hyper connected transparent world.

References

- Rosen, C. (2008). The myth of multitasking. The New Atlantis, (20), 105-110.

- As AI Meets the Reputation Economy, We’re All Being Silently Judged

Frankly, I do not have the ability to respond one by one the contents of this post. I was absolutely stunned. Reading this content is like completing 3 books or 3 semester lectures. very brilliant. This is the first time I have found this complete content.

A senior citizen (don't mind the name, don't know how to refer you yet) that doesn't take advantage of his reputation and steempower by posting just pictures or a five line post.

I really appreciate it to know that there are people out there that doesn't take advantage of power.

Masterpiece. 👏💖

Captivating in it's brilliance. Thank you @dana-edwards because you're helping me get to that next level.

I still need to digest some of this, but I will speak from my own experience and approach to back up your case. I've reached that point here on STEEM where I am feeling attention scarcity kick in and I have only 225 followers. My feed has become overwhelming. So I am trying to defer some of my thinking and identify those who I can trust on a particular topic or subject and consolidate my attention via them. It feels like we are building a kind of Hive Mind on here, but maybe my teminology is wrong as I am not well read.

Two things I have been exploring that you touched on. The concept of the Proxy here is poorly utilised and understood IMHO and as a concept it seems in a very formative stage. I can see a time when we might nominate different proxies on different topics rather than an "all knowing" proxy that is also inevitably limited by attention scarcity. I might trust Person A to represent me on Subject X which could be a specialty of theirs, but on Subject Y I know they are uninformed. The other is the use of Delegation which I see as another form of Proxying, but only economic power rather than political influence. Perhaps on a technology level these proxy features will meet somewhere down the road in a form of hybrid.

I think that the problem of attention deficit is getting worse and worse as the amount of information we are bombarded with constantly increases.

To be honest I'm not sure software can really solve this. The human adaptation to this is to form small communities (you mentioned 150) where the attention span of the individual can concentrate and each amount of information takes value.

I'm not sure the wisdom of the masses work when it comes to morality. I feel like everyday brings more political correctness to the point where divergent views are muted or judged amoral.

True debate is hard when the number of participants involved is huge because there is always a trend to alignment towards a general moral consensus.

Thanks for a great study into the subject.

Thank you for the share @dana-edwards I hope so maybe share for one topic

Hi, I just followed you :-)

Follow back and we can help each other succeed!!@romyjaykar

Frankly, I do not have the ability to respond one by one the contents of this post. I was absolutely stunned. Reading this content is like completing 3 books or 3 semester lectures. very brilliant. This is the first time I have found this complete content.

Nice post..... improving decentralized governance is of the essence

In a world where nothing is private, where every behavior is "counted", we must now consider this:

There is no way in hell we can expect Alice to realistically know everything about everyone she ever interacts with. Nor would it make sense for her to want to know this as it would potentially bias her in dumb ways. What Alice would need to know is the answer to her query to the network which could be as simple as "Can I trust Bob?".

The return would be a score, if the score is beyond a certain threshold the answer is yes, else it's no. Alice does not need to know what Bob eats for breakfast each day but if she wanted that to be a factor in the score then an algorithm could capture this via instagram tags if Bob films himself eating breakfast each day.

Basically, the whole idea of Bob gets quantified. It then becomes analyzed by algorithms produced by a market. A market where the most fit algorithms get the most use and the most money for the creators. The end result is Alice gets her question answered by her exocortex.