How to run ZenTao in Kubernetes with NFS

- Test in Virtual host

Files needed to be edit

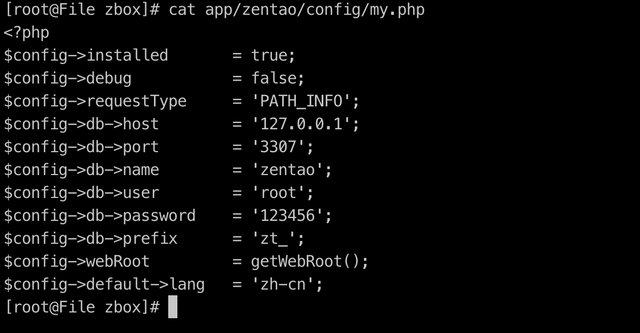

/opt/zbox/app/zentao/config/my.php

/opt/zbox/app/zentaoep/config/my.php

/opt/zbox/app/zentaopro/config/my.php

/opt/zbox/etc/php/php.ini

Edit Port in it

/opt/zbox/etc/apache/httpd.conf

Create Group and User

Otherwise there might be AH00544: httpd: bad group name nogroup

useradd nobody

groupadd nogroup

Keep or rm them on your own

Where attachments are

/opt/zbox/app/zentao/www/data/upload

ZenTao auto backup

root/opt/zbox/app/zentao/tmp/backup

Edit the above file and replace database as yours, I am using Alicloud RDS here.

After editting, start MySQL database service

/opt/zbox/zbox start or,

sh run/apache/apachectl start or /opt/zbox/run/apache/httpd -k start

- Dependencies work before run container

Since I have uploaded some pictures before, I need to use storage here.

Create a script in /opt/run and link storage file to /opt/zbox

Install nfs

Install nfs-utils

yum install-y nfs-utils

Edit exports and grant privilege

cat>>/etc/exports<<EOF

/opt/ 192.168.0.0/16(rw,no_root_squash,sync)

EOF

Start nfs service

systemctlenablerpcbind.service

systemctlenablenfs-server.service

systemctl start rpcbind.service

systemctl start nfs-server.service

systemctl stop firewalld

systemctl disable firewalld

rpcinfo -p

exportfs nfs

exportfs -r

exportfs

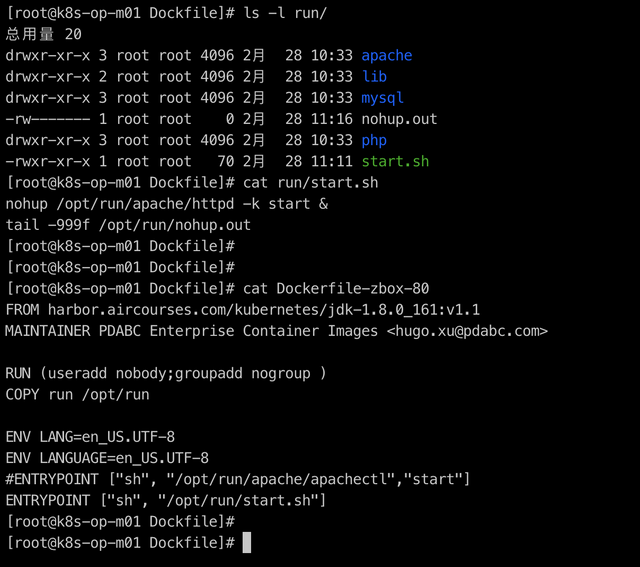

Create dockerfile

Since I want to link /opt/zbox/run with /opt/

So I need to keep one service in background

Create start.sh in /opt/run/

nohup/opt/run/apache/httpd -k start&

tail-999f /opt/run/nohup.out

Create dockerfile in /opt/run/

FROM harbor.aircourses.com/kubernetes/jdk-1.8.0_161:v1.1

MAINTAINER PDABC Enterprise Container Images [email protected]

RUN (useradd nobody;groupadd nogroup )

COPY run /opt/run

ENV LANG=en_US.UTF-8

ENV LANGUAGE=en_US.UTF-8

ENTRYPOINT ["sh", "/opt/run/start.sh"]

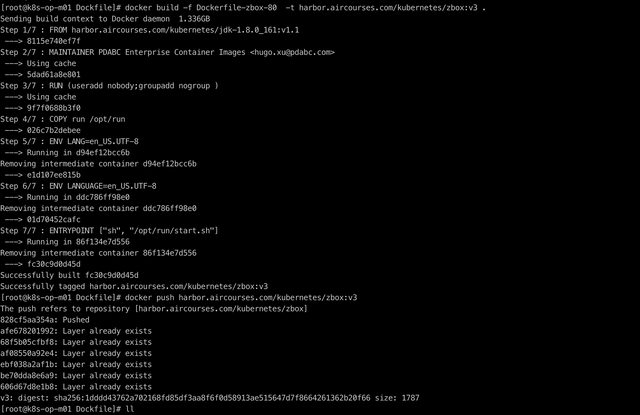

Docker build and push

docker build -f Dockerfile-zbox-80 -t harbor.aircourses.com/kubernetes/zbox:v3

docker push harbor.aircourses.com/kubernetes/zbox:v3

- Test in Kubernetes

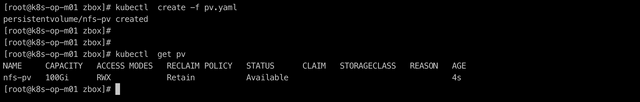

Create pv.yaml

Create nfs-PV

apiVersion:v1

kind:PersistentVolume

metadata:

name:nfs-pv

namespace:default

labels:

pv:nfs-pv

spec:

capacity:

storage:100Gi

accessModes:

- ReadWriteManypersistentVolumeReclaimPolicy:Retain

nfs:

path:/opt/zbox

server:192.168.13.212

kubectl create -f pv.yaml

Create pvc.yaml

Create NFS-pvc

kind:PersistentVolumeClaim

apiVersion:v1

metadata:

name:nfs-pvc

namespace:default

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage:100Gi

selector:

matchLabels:

pv:nfs-pv

kubectl apply -f pvc.yaml

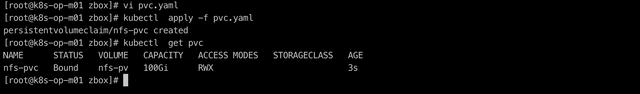

Create deployment.yaml

apiVersion:apps/v1

kind:Deployment

metadata:

deployment name has nothing to do with svc and ingress binding

name: zbox-dp

namespace:default

spec:

replicas:1

# labels is necessary when create template since Deployment.spec.selector is required field and it has to correspond template.labels

selector:

matchLabels:

app:zbox

# template defined details will apply to all the copies ( e.g. deployment pod ), labels can't be defined in template.spec.containers.Use "kubectl get pods --show-labels" to check

template:

metadata:

labels:

app:zbox

spec:

containers:

It doesn't matter about containers name and svc binding with ingress

- name:zbox

image:harbor.aircourses.com/kubernetes/zbox:v4

volumeMounts:

- mountPath:/opt/zbox

name:zbox-data

ports:

- name:HTTP

containerPort:80

volumes:

- name:zbox-data

persistentVolumeClaim:

claimName:nfs-pvc

imagePullSecrets:

- name:myregistrykey

kubectl apply -f deployment.yaml

Create svc-zbox.yaml

apiVersion:v1

kind: Servicekubectl apply -f pvc.yaml

metadata:

name:zbox

namespace:default

spec:

type:ClusterIP

selector:

app:zbox

ports:

- name:HTTP

port:80

targetPort:80

kubectl apply -f svc-zbox.yaml

Create ingress-zbox.yaml

apiVersion:apps/v1

kind: Deployment

metadata:

#deployment name has nothing to do with svc and ingress binding

name:zbox-dp

namespace:default

spec:

replicas:1

labels are necessary when creating template since Deployment.spec.selector is a required field and it has to correspond template. labels

selector:

matchLabels:

app:zbox

template defined details will apply to all the copies ( e.g. deployment pod ), labels can't be defined in template.spec.containers.Use "kubectl get pods --show-labels" to check

template:

metadata:

labels:

app:zbox

spec:

containers:

It doesn't matter about containers name and svc binding with ingress

- name:zbox

image:harbor.aircourses.com/jiaminxu/zbox:v1.0

volumeMounts:

- mountPath: /opt/zbox

name:zbox-data

ports:

- name:HTTP

containerPort:80

volumes:

- name:zbox-data

persistentVolumeClaim:

claimName:nfs-pvc

imagePullSecrets:

- name:myregistrykey

kubectl apply -f ingress-zbox.yaml

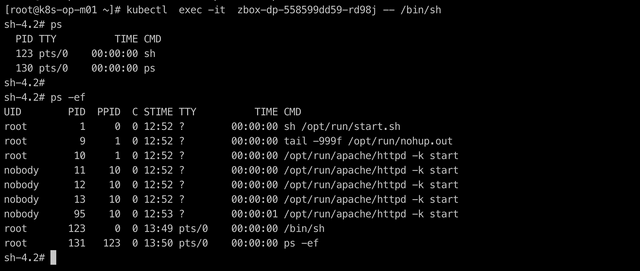

Visit ZenTao

Upload a picture and check if NFS has loaded successfully.

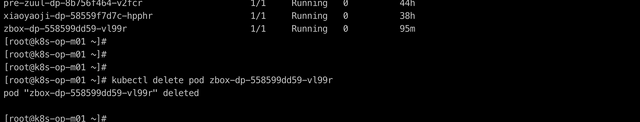

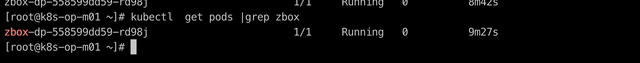

There will be another pod automatically loaded.

Check the record again and we can see that the picture is still there which indicates that the docker and storage loading is running fine.