AI Network X Open Resource

Dear AI Network Community,

In this post, I’m going to explain what AI Network is trying to solve and what are the important factors to solve the problem.

There are many kinds of clouds in the world. You’re probably thinking a lot about the cloud for giant machine learning calculations, or the cloud for servers for commercial services. But each of these environments is slightly different, so even the largest companies can’t afford to provide all the environments. Even if you change a few versions of your hardware environment, your OS environment, your hardware library, your language, your framework, you might have trouble executing your code.

Part of the solution is the Backend.AI Cloud Service we have. Currently, 13 languages, 4 famous ML solution development environments are provided, and versioning is managed internally, allowing developers to focus only on coding. But there’s about 30 million of the code that’s been released. The environment in which these codes are executed is also so diverse that one company cannot provide everything. The AI Network is trying to address this issue through decentralization. Anyone can be a cloud-operating entity, and use the AIN coin to access a vast variety of cloud environments that have never existed in the world. We would describe this as “the movement from open source to open resource

Open Source

The open source community is now an important element not only for individual developers but also for businesses, with 78 percent of businesses tied to open source and 14 percent growing each year. It’s becoming very professional in terms of the quality of the code, and the process of participating in open source is being organized.

There are three main values that developers get from open source.

Reproducibility, which allows others to reproduce the results that I made

Reusability, making once made available again. Can you save time and money?

Transparency, making it possible for anyone to see and contribute to the principle of operation.

Machine learning codes are the most important aspect of open source code, and machine learning codes are often difficult to reproduce due to the difficulty of setting up the environment.

Open Resource

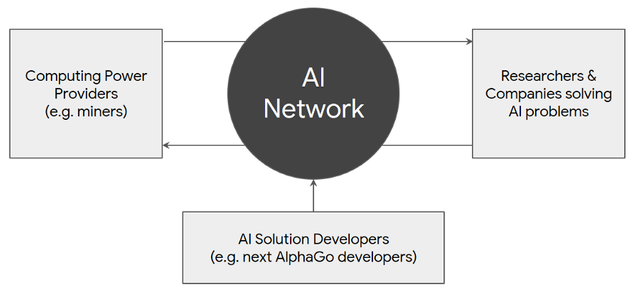

The concept of Open Resource is probably first used by AIN. It’s a compound word of open source and “Re,” and we continue to use it because we think it’s an intuitive representation of what we’re trying to do. We’re calling it Open Resource, setting up a code, even the execution environment, when it’s there, so anyone can try it right away. This requires an environment in which source code is executed, but unlike source code, the execution environment is difficult to keep. It will be expensive and time-consuming to manage, especially if producers have to maintain an environment to execute their code. Just as an open source can be managed by someone other than the original creator, it can be seen that open resources must be managed by stakeholders who also need them. Thus, rather than the traditional development methods in which a developer has worked from idea to resource preparation to execution, the key elements of open resources are divided into three elements:

• Author

They’re people who write and share common open source code. Typically, you upload a method to set up your development environment to a Readme.md file, but in an Open Resource environment, you can simply upload a cloud setup file.

• Resource Provider

It’s people who make money by managing and delivering computing resources. These people are more interested in managing resources, so they may not know what ideas are running in their environment.

• Code Execution

They’re the ones who want to discover the author’s code and put it into action. Based on the cloud setup file created by the author, the resource provider provides resources and the Code Executor executes code on it.

The difference between a centralized cloud environment and a P2P cloud environment

A trusted, centralized cloud is where the results come in, unless there is a particular problem. However, keep in mind the possibility of bad nodes being present in the P2P network. There is a possibility that the code you receive will not be executed as it is, that it will result in a falsified result, or that it will perform the task and disappear. And even if the results are correct, it’s possible to recycle the data that was used in the results without permission, or to steal the results from others.

This issue can be summarized as two issues: Verifiable, which is to verify that the results are correct, and Security, where my data is not leaked in the calculation process. In this picture, I will focus on Verifiable Computing.

Verifiable Computing

To solve the first problem, you need to learn about verifiable computation techniques. It’s a problem that we face a lot in our daily lives. If we’re going to test whether or not a very distracted child is doing his homework, we’re going to have to stick together and examine every line of notes he writes (Execution Verification). If the child is a more mature child, you can check your homework tomorrow(Checkpoint Verification). If you’re a professor, you can give them a year’s work, and you can do it only once(Solution Verification). And as you go back, you see the cost of testing decreases.

Smart contact, such as Ethereum, runs the same code and keeps the Consensus to ensure that everyone is in the same state. However, if there is a proper evaluation function, the example I mentioned earlier shows that not all nodes need to execute the same code. Evaluating the results at the right point can greatly reduce the cost of the calculations. Of course, designing these evaluation functions is an additional task, and without precision design there is also a security risk and may not be suitable for code execution such as normal smart contacts. But there’s an Objective Function in machine learning that’s perfect for this. We chose Machine Learning as our first goal, not because we wanted to look good, but because of its ease of evaluation.

With AI Network Architecture, complex and time-consuming operations are performed outside the chain (off-chain) and only communication for evaluation of the results is recorded in the blockchain, which enables efficient utilization of transaction speed. This is something that many people misunderstand and differentiates ourselves from other projects. This is not a blockchain project to solve AI problems with blockchain by increasing the performance of blockchain, but a project that utilizes blockchain as a communication type to achieve machine learning through connection and collaboration with multiple components.

Do you have an idea of where the AI Network is going and what its core technology, the Verifiable Computing, is? Security issues can be divided into communication channel security and encryption of data and models. There are a lot of technologies available, but I’ll give you a little more summary next time.

Thank you.

Telegram (English): https://t.me/ainetwork_en

Email: [email protected]

Homepage: http://ainetwork.ai/

Twitter : https://twitter.com/AINetwork1

Facebook: https://www.facebook.com/AINETWORK0/

Steemit: https://steemit.com/@ai-network

Brunch : https://brunch.co.kr/@ainetwork

github : https://github.com/lablup/backend.ai