Killer robots!!!deserves some serious attention

killer robots

Fully autonomous weapons, also known as "killer robots," would be able to select and engage targets without human intervention. Precursors to these weapons, such as armed drones, are being developed and deployed by nations including China, Israel, South Korea, Russia, the United Kingdom and the United States. It is questionable that fully autonomous weapons would be capable of meeting international humanitarian law standards, including the rules of distinction, proportionality, and military necessity, while they would threaten the fundamental right to life and principle of human dignity. Human Rights Watch calls for a preemptive ban on the development, production, and use of fully autonomous weapons.

Elon Musk, along with over 100 robotics and artificial intelligence (AI) leaders, has written an open letter to the UN Convention on Certain Conventional Weapons warning about "killer robots".

The letter cautions that autonomous weapons will create "the third revolution in warfare" and need to be closely monitored if not banned outright.

"As companies building the technologies in Artificial Intelligence and Robotics that may be repurposed to develop autonomous weapons, we feel especially responsible in raising this alarm," reads the letter.

"Lethal autonomous weapons threaten to become the third revolution in warfare.

"Once developed, they will permit armed conflict to be fought at a scale greater than ever, and at timescales faster than humans can comprehend.

"These can be weapons of terror, weapons that despots and terrorists use against innocent populations, and weapons hacked to behave in undesirable ways."

The letter has been signed by 116 leaders from around the world who are involved with the development of artificial intelligence.

Musk particularly has been a longtime critic of AI and the potential dangers it may cause.

“AI's a rare case where I think we need to be proactive in regulation instead of reactive," he said in July.

"Because I think by the time we are reactive in AI regulation, it’s too late."

A special group of experts from the UN was due to meet for the first time this week to discuss lethal autonomous weapons systems, but the meeting has been delayed until November.

The letter closes with the warning: "Once this Pandora’s box is opened, it will be hard to close."

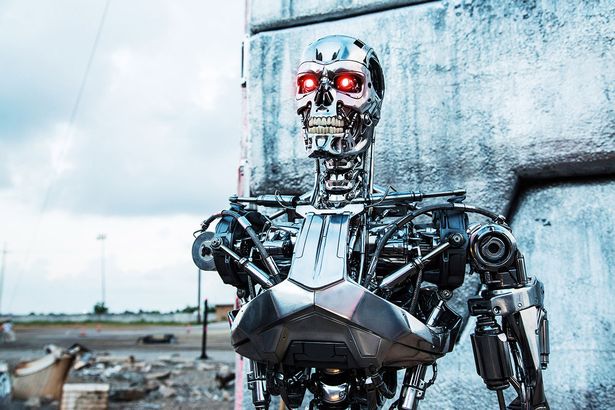

The 'Terminator' scenario

Experts are convinced that scientists are well aware of the threat of artificial intelligence and take it into account when developing systems.

"Everybody in AI is very familiar with this idea - they call it the 'Terminator scenario’," explains futurologist Dr. Ian Pearson, the author of You Tomorrow.

"It has a huge impact on AI researchers who are aware of the possibility of making [robots] smarter than people,” he told Mirror Tech.

"But, the pattern for the next 10-15 years will be various companies looking towards consciousness.

"There is absolutely no reason to assume that a super-smart machine will be hostile to us.

"But just because it doesn’t have to be bad, that doesn’t mean it can’t be. You don’t have to be bad but sometimes you are.

"It is also the case that even if it means us no harm, we could just happen to be in the way when it wants to do something, and it might not care enough to protect us."

Congratulations @prateeshrajput! You have completed some achievement on Steemit and have been rewarded with new badge(s) :

Click on any badge to view your own Board of Honor on SteemitBoard.

For more information about SteemitBoard, click here

If you no longer want to receive notifications, reply to this comment with the word

STOPCongratulations @prateeshrajput! You have completed some achievement on Steemit and have been rewarded with new badge(s) :

Click on any badge to view your own Board of Honor on SteemitBoard.

For more information about SteemitBoard, click here

If you no longer want to receive notifications, reply to this comment with the word

STOP