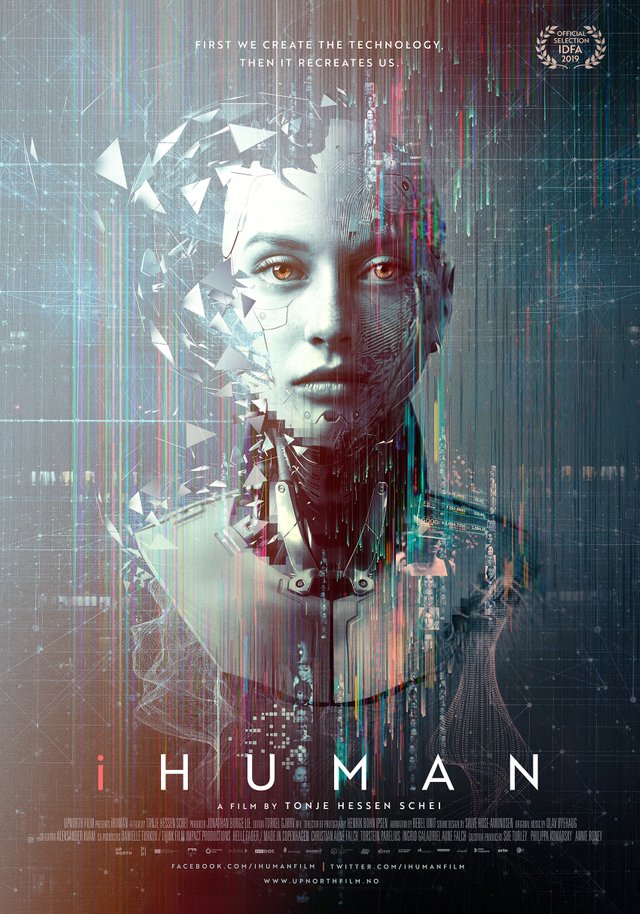

iHuman (personal reflections)

The essential and intensive document about Artificial General Intelligence (AGI). It includes interviews with renowned people from the AI scene, talking about upcoming AI benefits, and of course, threats.

You will reveal some nasty facts you probably do not know about Google:

Google's 'Don't Be Evil' was changed to Alphabet's 'Do the Right Thing' (be aware the "right thing" is very subjective, but "evil" probably too)

Google collaborated (still collaborates?) with the US army (military AI); check the project Maven. Thanks to this project, more than 3000 internal Google employees left the company

The unspoken question to Google owners about why they help the US army improve their dangerous AI ends up with the answer "Because we want to prevent dictatorship regimes from misusing the AI technology."

It reminds me of Peter Thiel's answer to the question:

"Why do you offer Palantir (the most advanced big data analyst company) services to US secret agencies, US army, ...?"

"Because I am afraid of the Chinese totalitarian regime."

It is a similar question like:

"Why do you develop nuclear weapons?"

with the expected answer:

"Because everybody else does it too. We have to defend."

I am trying to imagine the perspective of Larry Page and Sergey Brin. Do they feel "so much responsibility" to the world that they need to collaborate with the US army? (They can also say - if we do not do that, someone else will do it).

- Google participates in the censorship search engine in China and helping to build China’s surveillance state

Google's "Don't Be Evil" is not valid anymore. And of course, they do the right thing - for the Chinese government.

I like the idea that humans are not kings of creation; we should be aware we are not the highest top of our evolution, just a partial step in our biological-technological evolution towards higher complexity. And soon, we should expect something that will transcend us as human beings—more developed, more complex, smarter, something that may perceive us as we perceive animals. We love them (at least some of them), but to achieve some bigger goals (e.g., build a city), we are always prepared to sacrifice them. We have full control over them, and we never ask them for permission. The AGI might choose a similar approach in the future.

I admit we should probably expect the "post-privacy age," at least for most of the population. Despite desperate government legal tries to save our privacy (e.g., using GDPR), governments of all countries still gather more information about their citizens. There are millions of reasons why they "should do it." Mainly "safety reasons" required by the majority of frightened people.

It is stupid to think the AI in the hands of governments will lead to more freedom. It will always lead to the opposite.

Finally, if you were disgusted with the "Social Dilemma" (for me, it was too anti-capitalist and biased propaganda), watch iHuman. It is more politically neutral, focusing more on human rights, more philosophical, questioning human society's future directions.