Application for season 23 learning challenge- Machine learning team

Hello, everyone; before I go ahead with my application, I would like to thank the Steemit team for this great initiative of encouraging us to participate and teach others about our expertise. I would also like to thank the teachers from the previous seasons for the hard work and effort they put into bringing new courses to us every week, reviewing and scoring the homework posts too. They have done well.

I am @kinkyamiee, and I am from Nigeria. A certified Data scientist and expert in Machine learning, that is, I build predictive models that can forecast and show patterns for easy and insightful decision-making. I joined the Steemit Platform in December 2020, and ever since, it has been an amazing journey of learning and improving my knowledge. Though i left for sometime because i misplaced my keys which i later found and came back to the platform. I am a statistician by profession, and joining the Steemit platform has really helped me use my statistical skills. I have been able to understudy technical analysis and chart patterns of the blockchain network. I have been able to compare coins and analyze and predict coins that have great potential in the market, too, with my knowledge of probability.

My expertise lies in the area of applying machine learning techniques that can provide real-life solutions; these include building predictive models, classification, and clustering. I have experience using different datasets from the healthcare industry, the Agricultural field, and the business analytics industry, where I developed an end-to-end machine learning pipeline. I am very passionate about sharing my knowledge and helping others learn and leverage data science and machine learning in solving challenging problems.

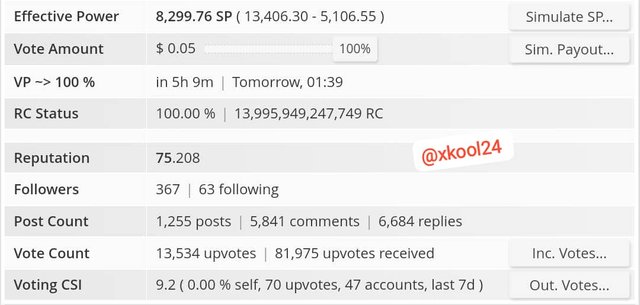

@xkool24 from Nigeria joined the Steemit platform in November 2020, and it has been quite an interesting journey for me, given the different roles I got engaged in during the line of duty. Among them is helping build the country's community to its enviable position where it is barely on an auto-run acceleration as well in the Steem-Agro community.

Steemit is part of my daily life, I am always active both in the role of administrator/moderator sponsoring contests and other activities or in the role of user participating in contests and dynamics of different communities. I may not be over-ambitious but I am determined to work hard, inclusive in my approach, as well as ready to serve rightly.

I was a member of the greeters team a few years ago, which was a very interesting experience for me. It was an experience with the responsibility of training, guiding, and reviewing these newbies in the platform, especially from member countries. I'm also an admin in the @steem4nigeria community, the largest community of Nigerians on Steem Blockchain, and also an admin in #Steem-agro, a hub of all agricultural activities.

I am an Agricultural Economist with a decade of experience in telecommunication business and retail sales. Before joining a career path with the telecommunication industry, I participated in several Agricultural projects, such as snail production, farm practice, yield growth, appraisals of poultry farmers' access to micro-credits, etc. As policymakers, we are always keen on agri-based developments to formulate policies poised to promote agricultural sustainability, food security, and overall sector growth.

Having implemental knowledge of econometrics and its convergence with machine learning is one we could find ideal to relate to students with the ability to analyze datasets and make accurate predictions in the sector. This hands-on knowledge would primarily enhance our methodology and application of this duo.

Below are the tasks for the first three weeks. |

|---|

Tasks:

- Environment Setup

• Install Python, TensorFlow/PyTorch, and Streamlit.

• Create a virtual environment for the project - Data Import and Initial Exploration

• Download the dataset.

• Load the data into a Pandas DataFrame

• Perform initial data exploration (shape, data types, summary statistics) - Data Cleaning and Validation

• Handle missing values (imputation or deletion)

• Identify and handle outliers

• Correct data types (e.g., ensure dates are in DateTime format)

• Remove duplicate entries (if any) - Data Transformation

• Normalize numerical features

• Encode categorical variables (one-hot encoding or label encoding)

• Create derived features (e.g., age from date of birth) - Statistical Analysis

• Perform descriptive statistics on all variables

• Conduct correlation analysis

• Deliverables:Perform hypothesis tests (e.g., t-tests, chi-square tests) to validate initial hypotheses.

Tasks:

- Univariate Analysis

• Create histograms and box plots for numerical variables

• Create bar charts for categorical variables

• Compute and visualize descriptive statistics - Bivariate Analysis

• Create scatter plots for pairs of numerical variables

• Create box plots of numerical variables grouped by categorical variables

• Perform and visualize correlation analysis

• Conduct chi-square tests for categorical variables - Multivariate Analysis

• Create pair plots

• Perform and visualize principal component analysis (PCA)

• Create parallel coordinates plots - Advanced Visualization

• Create interactive visualizations using Plotly or Bokeh

• Develop a dashboard summarizing key insights using Streamlit - Insight Generation

• Identify and document key patterns and relationships in the data

• Formulate new hypotheses based on exploratory analysis.

Tasks:

- Feature Creation

• Develop new features based on domain knowledge (e.g., study time per credit)

• Create interaction terms between existing features

• Implement polynomial features for numerical variables

• Develop time-based features (e.g., time since the last exam) - Feature Transformation

• Apply log transformation to skewed numerical features

• Bin continuous variables into categorical ones where appropriate

• Standardize numerical features - Feature Selection

• Implement filter methods (e.g., correlation analysis, chi-square test)

• Apply wrapper methods (e.g., recursive feature elimination)

• Use embedded methods (e.g., Lasso, Random Forest importance)

• Perform stability selection to identify robust feature subsets - Dimensionality Reduction

• Apply Principal Component Analysis (PCA)

• Implement t-SNE for visualization of high-dimensional data.

For this criterion, we already took precautionary measures to make all tasks a practical one. We have completely played down theoretical tasks but rather hands-on experience practicals with screenshots to show for answers.

We will have less need for AI checking given this control measure, but we would rather look out for content farming.

These listed weekly topics are subject to change, given the participation and feedback received. We have available topics to make up the 6-week duration as well as supplemental should in case we need to make changes.

We are optimistic that we will be given this job to impact learning in the subject area.

Thanks.

Great