Temporary Public Node Outage (eu2.eosdac.io): Technical Story and Improvements

We recently had an issue with one of our public nodes and thought it would be a good opportunity to do a technical write up on our response. At no time was block production compromised as our primary and backup block producing nodes were functioning as expected.

The public endpoints are the only thing public monitoring systems see, so this red line here is because one of our public nodes, eu2, experienced an outage:

We have a load balancer in front of our public nodes so some requests to eu.eosdac.io worked and some did not. Because the server on the backend was still active (but nodeos was not responding correctly) it still included eu2 in the balancer. This is something we'll be improving in the future with scripts to automatically remove a non-functioning node from our load balancer.

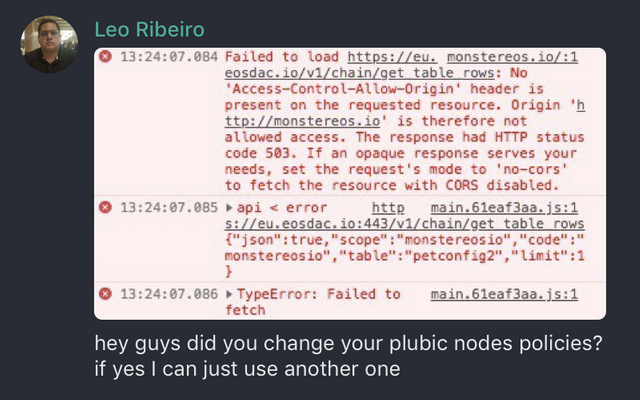

We were alerted of an issue when Leo from MonsterEOS messaged us in our Telegram channel:

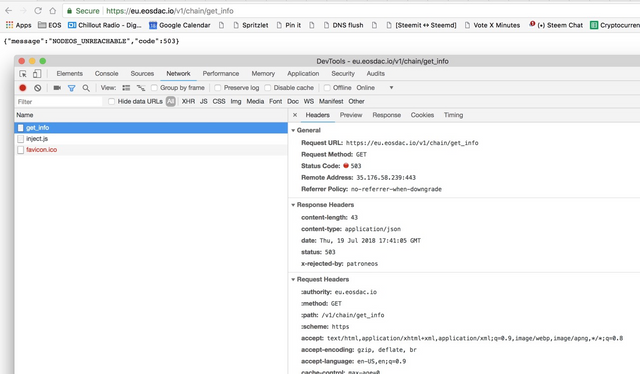

They've been using our public endpoints to power their game. We were able to quickly reproduce the problem ourselves:

NODEOS_UNREACHABLE was showing up half the time because eu1 was working while eu2 was not. The data got corrupted with a bad alloc issue (something that can happen occasionally with blockchain systems, which we monitor for closely).

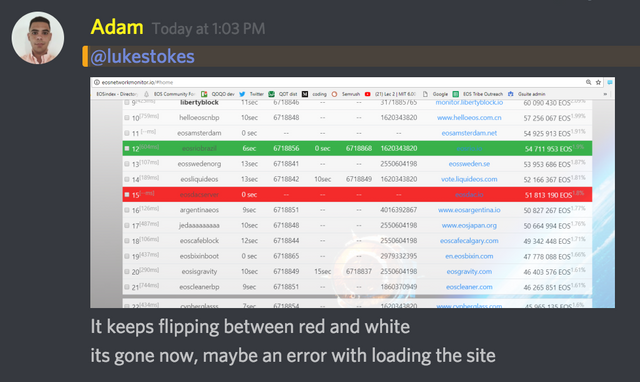

We also got a message from Adam in our Discord:

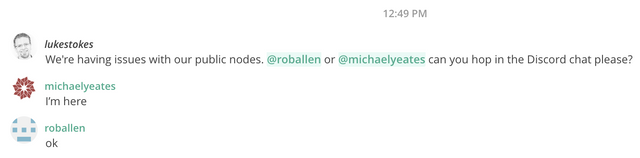

Though Michael Yates is currently in Korea for a conference, Rob Allen is in the UK, and Luke Stokes is in the US, we quickly got together on our discord live chat to assess the situation.

As Rob and Michael worked to remove eu2 from the load balancer, Luke attempted various replay strategies before removing the block data and starting a resync with the correct genesis file:

rm -rf data-dir/blocks/* data-dir/state/*

./start.sh --genesis-json genesis.json

As of this post, we're re-syncing eu2, and it is currently outside of our load balancer. We'll add it back in as soon as it finishes syncing. The load balancer in front of our public nodes gives us the ability to quickly change things up as we upgrade or update our servers and software. Just yesterday we upgraded to mainnet-1.1.0 and if there was an issue or if that release required a replay, we'd be able to do one server at a time without disrupting the service of anyone using our public node endpoint.

What's unusual about this event (and the only reason anyone noticed prior to us resolving it immediately), is that eu2 had a misconfigured monitoring script.

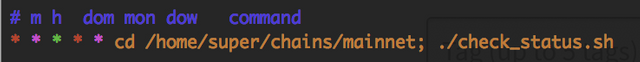

Luke has custom monitoring scripts which check the status every minute and send text messages if needed. Unfortunately the file was misnamed on this one server as checkstatus.sh instead of check_status.sh so no alert came. Michael also has external monitoring configured, but since it was 2am in Korea, the messages he received due to the outage were at first lost in the shuffle (though he responded immediately when messaged in Keybase).

Things We Improved

- Fixed the misconfigured monitoring script for eu2

- Prioritized updating our load balancer to automatically remove nodes that are not giving a correct response code

- Updated our internal polices and procedures document to include information about adjusting the load balancer and recovering from a corrupted chain

We hope you've enjoyed this behind the scenes look at how our geographically diverse block production team functions together seamlessly and is continuously improving. For more behind the scenes, you might enjoy this look at the First eosDAC Worker Proposal Multi-Signature Payment. You can also join our 5-tech-and-development channel on Discord for real-time updates regarding our DAC Toolkit, EOSDAC Token Explorer, and smart contracts to run the DAC including custodian elections. We are currently on track to have working betas for these tools within Q3 as mentioned on our site roadmap. If that changes, we'll keep the community updated in Slack, Telegram, and Discord.

We greatly appreciate the support of those who have invested in eosDAC and understand the value DACs will bring to the world. We on the launch team are getting contacted regularly by organizations around the world who want to consult with eosDAC to learn more about how they can use the tools we're building to create their own DAC. With a solid Constitution, revenue from EOS Block Production, active worker proposals, and more, we're very excited about the progress being made. We hope you will join us and participate in adding value to the DAC.

If you have any questions at all, please feel to raise them to the community. We are a community owned block producer and DAC enabler. The value we want is the value we create.

Please vote for eosdacserver

Join our newsletter to stay informed and follow us on your favorite social media platform:

Steemit | Discord | Telegram | Facebook | Twitter | Google-plus | Github | Instagram | Linkedin | Medium | Reddit | YouTube

Looks like the problem was found, analyzed,corrected and published all within 3 hours! Nice job @eosdac

Nice and quick response to fix the issue! Congrats! Also, I appreciate the shout out to MonstersEOS! :D Actually we need to thank the players, they were desperate because they could not feed their monsters! hehehe