Yet another demux example

Demux-js

Hey, in this post I would like to share one more example of using the demux-js library.

Unfortunately, I am using the library version 3.0.0, while version 4.0.0 is already available. But maybe someone will have time to fork an example and port it to the latest version of the library or I'll find some time to do it myself.

I managed to find only two examples of using the demux library on the GitHub as well as on the Internet. The truth is most likely I am not as good at searching as I think. Anyway, I don't think my repository will be superfluous.

Example

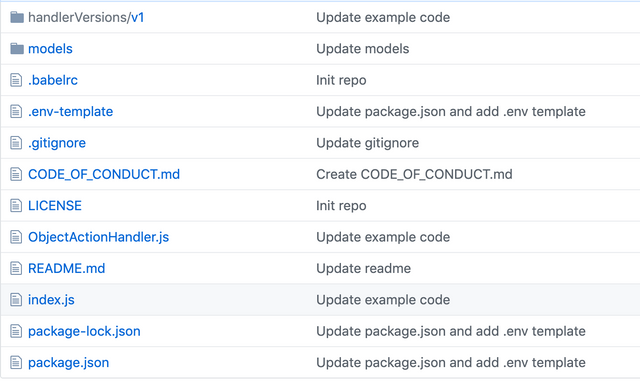

Here is the link to the repo: https://github.com/4ban/EOS-demux-js-example.

And here is the link to the API repo: https://github.com/4ban/EOS-demux-js-api-example

This example was created to monitor the EOS producer and record committed transactions (actions) into the Mongo database.

Initially, this service has a practical application, but the example has been modified to remove sensitive information. I also tried to simplify it so that it was easy to modify to fit your possible needs. So there are 2 identical models and effects for them in a case to demonstrate how easy to modify it if you want to use different actions, models or saving functions.

Description

This example tracks the transfer action for different contracts and stores them into different collections in the Mongo database.

{contract account name 1}::transfer{contract account name 2}::transfer

Instead of the

transferaction, you can catch any other, bring it into the desired form and save it in the database. Different actions can be saved in different collections or in one.

Details

In this example, I catch all the transfer actions for the eosio.token contract.

The idea is simple. First, we need to init our state with the following fields we want to track:

let state = {

from: '',

to: '',

quantity: '',

currency: '',

memo: '',

trx_id: '',

blockNumber: 0,

blockHash: '',

handlerVersionName: 'v1'

}

Then catch required actions by updaters. It will go through blocks and if meet the required action on required account just call the function. In our case mdl1Transactions or mdl2Transactions:

const updaters = [

{

actionType: `${account_1}::transfer`,

apply: mdl1Transactions,

},

{

actionType: `${account_2}::transfer`,

apply: mdl2Transactions,

},

]

And finally, save them into the DB. This is a part of the code od mdl1Transaction function:

const { amount, symbol } = parseTokenString(payload.data.quantity)

state.currency = symbol

state.quantity = amount

state.from = payload.data.from

state.to = payload.data.to

state.memo = payload.data.memo

state.trx_id = payload.transactionId

state.blockNumber = blockInfo.blockNumber

state.blockHash = blockInfo.blockHash

try {

let transaction = new Schema_1({

from: state.from,

to: state.to,

quantity: state.quantity,

currency: state.currency,

memo: state.memo,

trx_id: state.trx_id,

blockNumber: state.blockNumber,

blockHash: state.blockHash,

handlerVersionName: state.handlerVersionName

})

transaction.save(function (err) {

if (err) console.log('Can not save into mdl1 collection')

})

} catch (err) {

console.error(err)

}

The Schema_1 model code is pretty simple too:

const mdl1Schema = new Schema({

from: String,

to: String,

quantity: String,

currency: String,

memo: String,

trx_id: { type: String, unique: true }, // uniqueness also create an index for this field

blockNumber: Number,

blockHash: String,

handlerVersionName: String

})

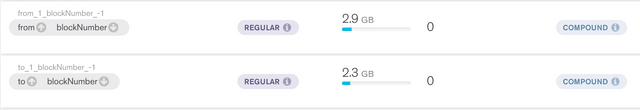

mdl1Schema.index({ from: 1, blockNumber: -1 })

mdl1Schema.index({ to: 1, blockNumber: -1 })

How it looks like in the MongoDB Compass.

Important things about the performance of searching

When I finished catching all the actions with the necessary accounts (there were only 3 accounts, but 2 of them had a small number of executed transactions), on Mainnet, on 57 million blocks. My database has more than 270 million documents. That's a lot!

At the same time, I needed to do a search taking into account the fields from and to with descending sorting. After much experimentation, I found that two compound indexes give the required search performance.

When I wrote a simple API service, an example of which I will also post here soon, I also used pagination (i.e., use the skip and limit parameters during the database requests) so that the query time does not exceed 100ms.

After not very long tests with the help of a

Jmetereven managed to get an APDEX score: excellent. But more on that in another article the link to which I will add after the publication of the next post: Demux API

STOP_AT

I also tried to make a very simple auto-interrupt function of the service, since I needed to run multiple copies of one service on the block ranges so as not to wait for weeks until it passes all 57 million blocks in one stream.

For comparison, if done in one stream, then it will take at least 56 days, considering the processing speed of the blocks of approximately one million per day

function stopAt(blockNumber) {

if (blockNumber >= STOP_AT) {

console.log("\nSTOP AT: ", blockNumber)

process.exit(1)

}

}

...

async updateIndexState(stateObj, block, isReplay, handlerVersionName) {

console.log("Processing block: ", block.blockInfo.blockNumber)

stateObj.handlerVersionName = handlerVersionName

if (STOP_AT) {

stopAt(block.blockInfo.blockNumber)

}

}

It looks lousy, but it does its job. I manage to run more than 10 copies of my service on one machine and completely bypass all 57 million blocks in less than 6 days.

I do not understand why the authors of the library did not add such a feature.

Summary

I think I described everything that I wanted. I hope this will be useful to someone and will help to make your own example or apply it in a real project.

Installation

Clone the repo: git clone https://github.com/4ban/EOS-demux-js-example.git

Go to the folder: cd EOS-demux-js-example

Install dependencies: npm install

Fill the .env file:

Available parameters:

# Mainnet

ENDPOINT=https://eos.greymass.com

# {server_address} is a ip address or domain name of the server

# EOS is a Mongo database name

MONGODB_URL=mongodb://{server_address}:27017/DBname

or

# Testnet

ENDPOINT=http://api.kylin.alohaeos.com

# {server_address} is a ip address or domain name of the server

# EOStest is a Mongo database name

MONGODB_URL=mongodb://{server_address}:27017/DBnameTest

Start | Stop points

# Start point of tracking

# 0 - is a "tail" mode, where service start at an offset of the most recent blocks.

START_AT=100

# Stop point of tracking

# 0 - means that it will not stop

STOP_AT=200

To handle the microfork issue enable the processing of irreversible blocks

# irreversible blocks only

IRREVERSIBLE=true

Accounts with contracts to track

ACCOUNT_1=eosio.token

ACCOUNT_2={contract_account_name 2}

Credentials

# Login and password from the database

MONGODB_USER={username}

MONGODB_PASS={password}

Usage

To run the service: npm start

Links

EOF

Enjoy. Git cool!