SLC S22 Week5 || Threads in Java: Life Cycle and Threading Basics

Assalamualaikum my fellows I hope you will be fine by the grace of Allah. Today I am going to participate in the steemit learning challenge season 22 week 5 by @kouba01 under the umbrella of steemit team. It is about Threads in Java: Life Cycle and Threading Basics. Let us start exploring this week's teaching course.

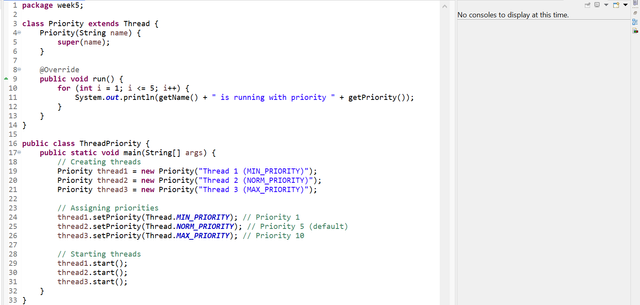

Write a program that demonstrates how thread priorities affect execution order.

Here is a Java program that demonstrates how thread priorities affect execution order.

Explanation of the code

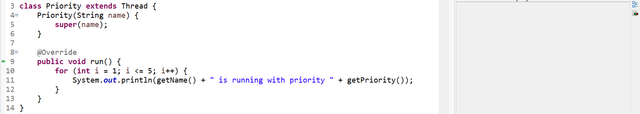

This Java program implements thread priorities and observe the effect on the execution order. The program illustrates how thread priorities can affect the scheduling of threads, but note that thread priority behavior is JVM and OS dependent.

This class extends the Thread class, enabling it to define custom behavior for each thread. The constructor takes a thread name as an argument, which is passed to the superclass constructor to name the thread. The run method is overridden to define the actions the thread will perform when executed. It prints out the name of the thread and its priority in a loop for better visibility.

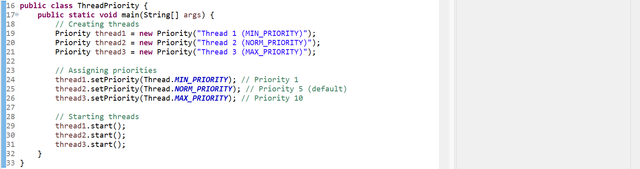

Three Priority threads are created. Each of them is assigned with a different name so they can be differentiated.

- This program uses

setPriority()to give priorities to the threads. Thread priorities run fromThread.MIN_PRIORITY(1) toThread.MAX_PRIORITY(10) withThread.NORM_PRIORITY(5) being the default. - call

start()for each of the threads which starts their execution.

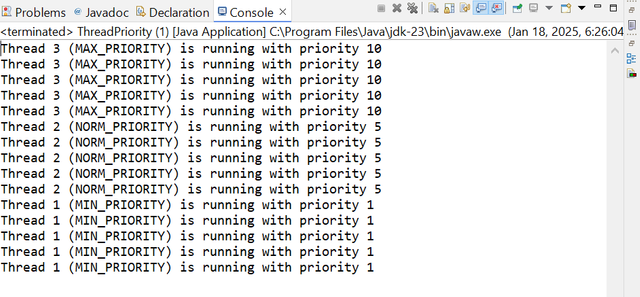

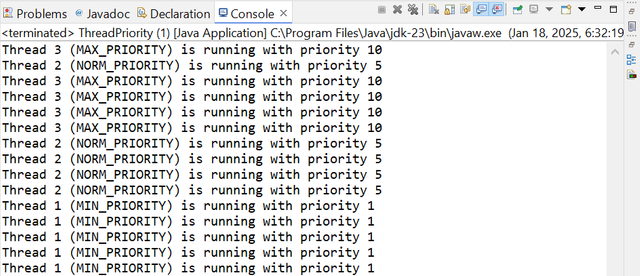

This is the output of this program. In this output the execution shows the program is following the priority order of the threads defined in the program. It is visible that first 5 lines were printed with the maximum priority which is 10 and then the normal priority threads are printed in the next 5 lines whose priority is 5 and at the end of the normal priority the threads of the minimum priority are executed as the lines show the threads with the minimum priority which is 1.

Observations

Here are some observations from the execution and output of the program:

- High-priority threads (like

Thread 3) may be executed ahead of low-priority threads (Thread 1) because a thread scheduler naturally gives priority to high-priority threads. - Nevertheless, a JVM and its OS scheduler simply view the declared thread priorities as a set of hints. For many systems containing a large number of competing processes or threads there is little or no determinism in thread order.

Why This Is:

- JVM thread scheduling is dependent on the host operating system implementation of preemptive or time sliced scheduling.

- On some platforms the difference in priorities is more noticeable while on others. It may be too small to matter.

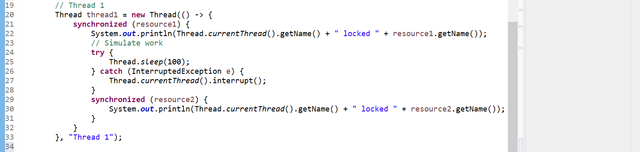

Develop a program that intentionally causes a deadlock using synchronized methods.

Here is a program that intentionally causes a deadlock using synchronized methods:

Explanation of the code

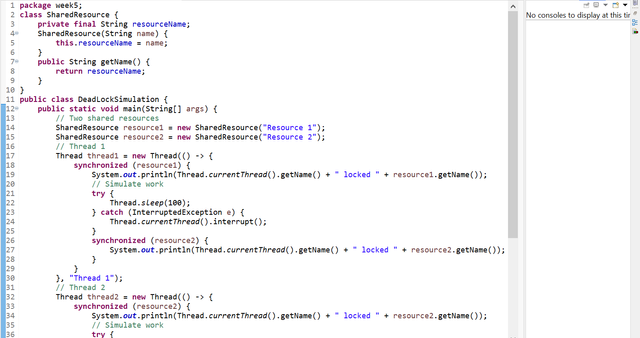

The SharedResource class is defined to represent a shared resource that multiple threads will attempt to access. It has only one field, resourceName, to identify the resource, and a constructor that initializes this name. The getName method is used to retrieve the resource's name for display purposes. This class is basic to demonstrating the shared resource locking in the simulation:

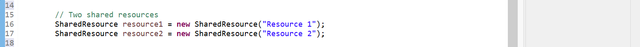

The main method creates two shared resources, resource1 and resource2, which will be locked by two threads in reverse order to simulate a deadlock. The resources are instances of the SharedResource class:

Thread 1 locks resource1 by using a synchronized block first, then waits on resource2 with the possibility of acquiring it only after a simulated delay. The sleep period (Thread.sleep(100)) has been added for making the chance of deadlock even greater by giving opportunity to Thread 2 to take the second lock in its favour. Synchronized block is there to lock the resource1 exclusively up to Thread1 until that block is out from the control.

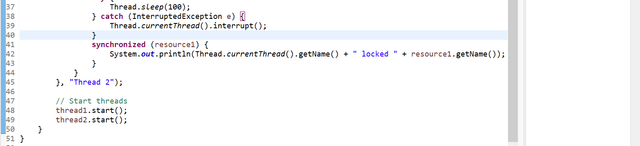

Thread 2 locks the resources in reverse order of thread 1, so it will lock resource2 and then try to lock resource1 later. This has caused a deadlock due to a circular wait where both the threads are waiting for each other to release their locks

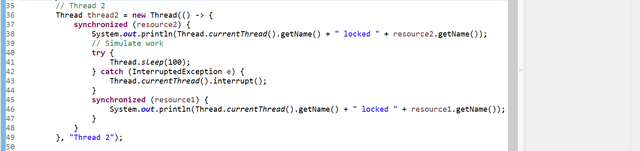

The two threads are started using the start method. Both run concurrently causing a deadlock: they both hold one resource and wait for the other to release its lock, since thread1 is waiting for thread2 to release its resource and vice versa.

Deadlock occurs since Thread 1 holds resource1 and waits for resource2 while Thread 2 holds resource2 and waits for resource1. No any thread can continue as the lock it requires is held by the other thread. This gives rise to a kind of circular dependency where both threads will wait indefinitely. And this situation is known as the deadlock.

Strategies to Avoid Deadlock

Lock Ordering:

Always obtain locks in a consistent order between threads. For example, both threads should try to acquireresource1first and thenresource2.Timeouts:

Use a timeout mechanism to prevent threads from waiting indefinitely. ThetryLockmethod inReentrantLockaccepts a timeout.Avoid Nested Locks:

Reduce the use of nested synchronized blocks to prevent more opportunities for circular dependency.Higher-Level Concurrency Tools:

Utilize tools likeExecutorServiceandjava.util.concurrentto manage thread synchronization, thereby reducing the amount of manual lock management and the associated risks.

By following these strategies we can avoid deadlocks and ensure that program threads run smoothly without delay which never ends.

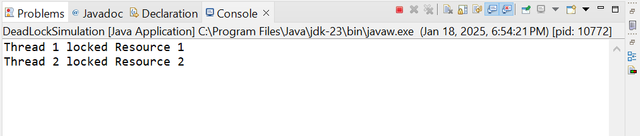

Create a program where multiple threads count from 1 to 100 concurrently.

Here is a program where multiple threads count from 1 to 100 concurrently and in this program the numbers will be printed in the correct order.

Explanation of the code

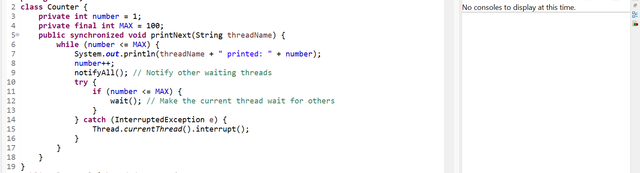

The Counter class is designed to control the counting process and maintain the synchronization between the threads. It has two variables: number, which is initialized with a value of 1, tracks the current number to be printed; MAX is initialized to 100 and is the maximum limit for the counter. The printNext method is the core functionality of the counting. It is synchronized to ensure that only one thread can execute it at a time.

Inside the printNext method, the while loop ensures that the counting process continues until the number exceeds MAX. Each thread prints the current number along with its name, increments the number, and then calls notifyAll to wake up other threads waiting on the Counter object. The wait method is used to pause the current thread if the counting is not yet complete, allowing other threads to take their turn.

The MultithreadedCounter Class

The MultithreadedCounter class initializes and manages multiple threads that share a single instance of the Counter class. It creates three threads, each of which executes the printNext method of the shared Counter object. This demonstrates how threads can work together while maintaining proper synchronization.

Each thread is created using a lambda expression which calls the printNext method with its name as argument. The threads are then executed concurrently by invoking the start method. Note that even though the threads execute in parallel, the synchronized keyword on the Counter class ensures only one thread may print a number at a time.

Mechanisms Used to Preserve Correct Sequence

Synchronized Method:

TheprintNextmethod is declared assynchronizedand ensures that only one thread is allowed to execute the method at a time, thus eliminating any possibility of a race condition in updating the counter (number) sequentially and correctly.Wait-Notify Mechanism:

waitandnotifyAllare used to coordinate the execution of threads. When a thread finishes printing, it callsnotifyAllto wake up other threads waiting for the lock. Then, the thread callswaitto release the lock and allow another thread to proceed.Shared Counter Object:

All threads share the sameCounterinstance, which is a shared resource. This means that updates to the counter are consistent and visible to all threads.

If we observe the output of the program then it is very simple to understand that all the threads are executing and printing the numbers in the correct order irrespective of the order of the threads. The program has printed numbers from 1 to 100 in the correct order with the help of the multiple threads. This shows how synchronization is used effectively to maintain a sequential order in a multithreaded environment. The combination of synchronized methods and wait notify ensures that each thread cooperates to produce the desired output.

Write a program that uses a thread pool to process a list of tasks efficiently.

Here is a program that uses a thread pool to process a list of tasks efficiently. I will set some simple tasks to the threads in the pool to observe their working.

Explanation of the code

Here is a detailed Java program which implements the use of a thread pool to efficiently process a list of tasks. The explanation is divided into parts where each section outlines in detail the relevant code.

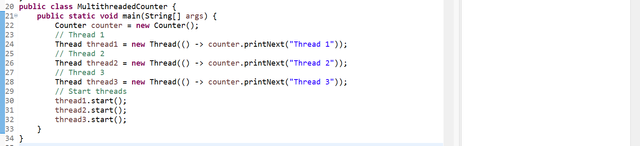

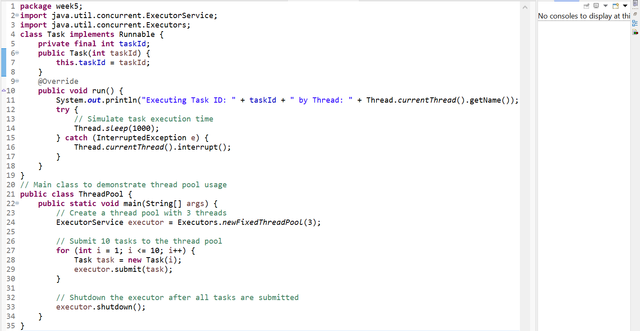

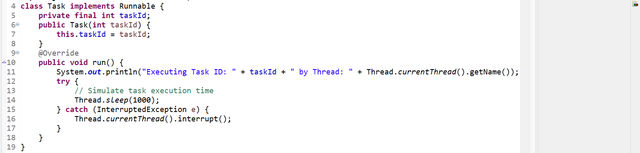

The Task class implements the Runnable interface, which is important to define tasks that can be executed concurrently by threads. It is implemented with Runnable so that this class can be executed by any thread in the thread pool. Every task has a unique identifier like taskId, which is passed while creating the Task object.

In the following Task class, there is taskId passed upon instantiation, used in the run method to refer to the task inside the output. The run method will mimic task execution using Thread.sleep(1000) whereby the task spends one second performing some kind of time consuming work. An exception of type InterruptedException was caught and allowed for safe response from the thread for an interruption request. Good practice for long running threads.

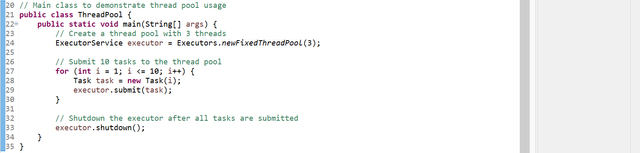

The ThreadPool class is a sample that shows how to use the thread pool for concurrent execution of multiple tasks. Here the ExecutorService interface is used to manipulate the thread pool it allows to submit tasks and manage the pool lifecycle.

In this section of the program these functions are carried out:

- Creation of a thread pool: This creates a fixed-size thread pool of three threads using

Executors.newFixedThreadPool(3). Only three threads will be active at any given time, regardless of how many tasks are submitted. - Task Submission The

forloop creates 10 tasks, with a uniquetaskIdassigned to each one. These are submitted to the executor usingexecutor.submit(task). Thesubmitmethod puts the tasks into a queue; available threads in the pool will execute them when they become free. - Shutdown: Once all tasks are submitted, the

executor.shutdown()method is called. This gracefully shuts down the executor, preventing new tasks from being submitted and allowing the current tasks to finish. Once all tasks are complete, the program will terminate.

How Thread Pools Improve Performance

Using a thread pool has several advantages over manually creating and managing each individual thread. The following sections elaborate on how resource usage is optimized and performance enhanced in thread pools.

Overhead Reduction:

Creating and destroying threads for every individual task involves significant overhead. Threads consume memory and CPU resources, and the creation and destruction process itself is costly. This has minimal overhead as there is the creation of a specified number of threads at the onset and reuses them for different tasks, ensuring that the thread creation and thread destruction operations will not bog down the system.Good Resource Utilization:

The thread pool limits the number of active threads at any given time. In the example above, only three threads are allowed to run concurrently. This avoids the problem of too many threads flooding the system and ensures effective utilization of the available CPU resources. The size of the pool is usually set to match the capabilities of the system and the requirements of the workload.Task Queuing:

If the number of tasks is more than the available number of threads, then the tasks that are to be executed later are queued.This queuing ensures that no task gets lost and also executes tasks in the order they have been received. This mechanism further ensures that there is effective usage of threads, as it is not creating new threads for each incoming task and will lead to resource contention and thus degrades performance.

In this output you can see that the tasks are assigned to the threads in the pool, and each thread prints its respective task ID. The thread names (e.g., pool-1-thread-1) indicate which thread is executing each task. Because the thread pool has only three threads, they are reused to execute multiple tasks, demonstrating how thread reuse optimizes performance.

Thread pools are a very useful tool for handling lots of concurrent tasks in Java applications. It reduces the overhead associated with creating and destroying threads by reusing a fixed number of threads, which improves resource utilization and performance.

Thread pools are useful where a lot of short-lived tasks need to be executed in parallel. They ensure efficient management of resources, controlled execution of tasks, and responsiveness of the system. This approach is especially useful in applications like server systems or background processing tasks, where task execution needs to be managed efficiently without overwhelming the system.

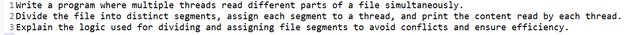

Write a program where multiple threads read different parts of a file simultaneously.

Here is the Java program where multiple threads read different parts of a file simultaneously.

Explanation of the code

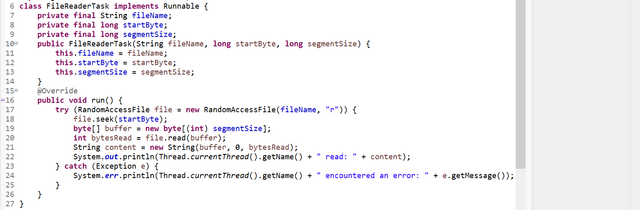

Below is the detailed description of the program using multiple threads to read different parts of a file in parallel. Each section has relevant code snippets.

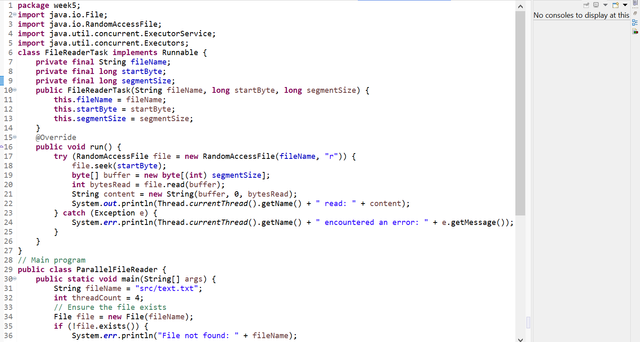

This FileReaderTask class encapsulates the task every thread would carry out. In this design, it will be implementing the interface Runnable. Such an interface facilitates the implementation being executed through some thread; such a Runnable is encapsulated in a single instance. Each of the files to be processed is specified. The specific block of file segment to read through is identified using the attributes, fileName, startByte and segmentSize.

- The

fileName,startByte, andsegmentSizeparameters define the file to be read, the position to start reading from, and the number of bytes to read, respectively. - The

runmethod uses aRandomAccessFileobject to navigate to the designated segment (file.seek(startByte)) and reads the specified number of bytes into a buffer. - Every thread prints the contents of their piece to the console, and shows the respective portion of the file. Note how

try-with-resourcescloses the file when it has been read through.

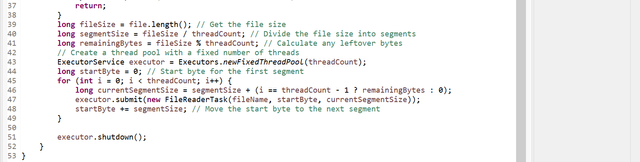

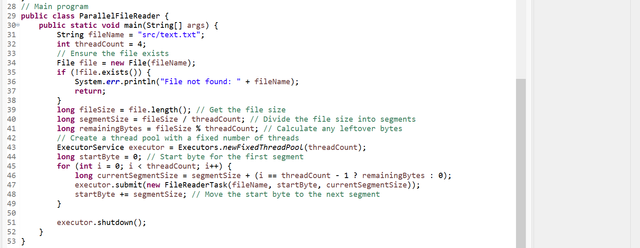

The ParallelFileReader class controls how the file is divided and passed to as many threads.

- File Size and Segments: The program calculates the size of each segment using

fileSize / threadCount. Any leftover bytes (fileSize % threadCount) are added to the last segment to ensure complete file coverage. - Thread Pool: A fixed-size thread pool with

Executors.newFixedThreadPoolis used to avoid the overhead of creating and destroying threads and properly utilize available resources . - Task Assignment: All the segments are iterated through using a

forloop, and aFileReaderTaskis created for each segment by passing the start byte and size. These tasks are submitted to the thread pool for execution. - Dynamic Adjustments: The last thread reads any remaining bytes by adding

remainingBytesto its segment size, ensuring the whole file is read.

How the Program Ensures Efficiency and Avoids Conflicts

The program design ensures conflict-free and efficient file reading by careful management of file access and thread execution:

- Random Access: Each thread accesses its segment independently using the

RandomAccessFileclass, thus preventing interference between threads. - Thread Pool: The program saves system resources and avoids overhead of thread management by limiting the number of active threads.

- Sequential Segmentation: The file is segmented into non-overlapping segments so that each byte is read exactly once.

This is the file which I created to use in this program execution as a test file if the proram reads the different segments of the file or not.

This is an example of parallel file reading using threads. Each thread processes a part of the file. Each thread reads a different portion of the file as determined by its assigned segment.

The parallel file reader program is a demonstration of the power of multi threading in processing large files efficiently. The program ensures optimal use of system resources by dividing the file into manageable segments and assigning these to threads in a thread pool. The use of RandomAccessFile enables independent access to file segments, eliminating conflicts. This approach is particularly beneficial for processing large files in scenarios requiring fast and parallel data access.

Develop a program simulating a bank system where multiple threads perform deposits and withdrawals on shared bank accounts.

Here is the Java program simulating a bank system where multiple threads perform deposits and withdrawals on shared bank accounts.

Explanation of the code

This program implements a thread-safe bank transaction system that simulates deposits and withdrawals performed by multiple threads on shared bank accounts.

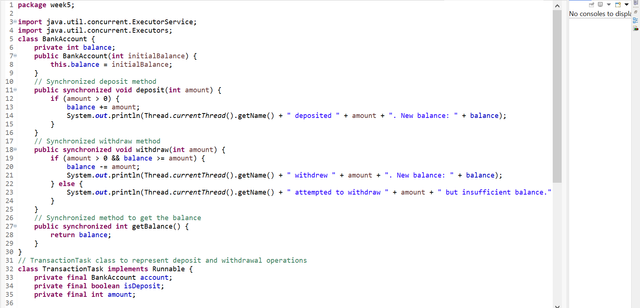

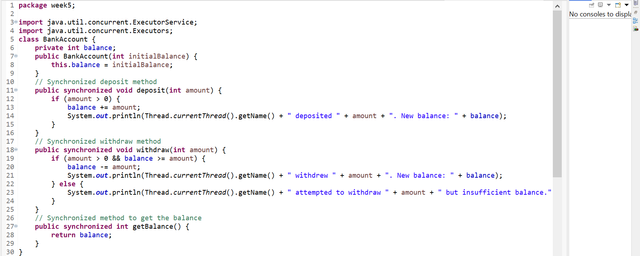

The BankAccount class represents a shared bank account. The class makes use of synchronization in the deposit and withdrawal operations for it to be thread safe.

The BankAccount class uses the synchronized keyword to make deposit and withdrawal methods thread-safe. Synchronization ensures that only one thread can execute a critical section (modifying the balance) at a time, preventing race conditions. The getBalance method is also synchronized to avoid inconsistent reads during concurrent updates.

The TransactionTask class implements the Runnable interface to define deposit and withdrawal tasks that operate on a shared BankAccount.

Each TransactionTask specifies whether it is a deposit or withdrawal and the amount involved. This task is passed to threads for execution. The shared BankAccount ensures synchronized access to maintain data integrity.

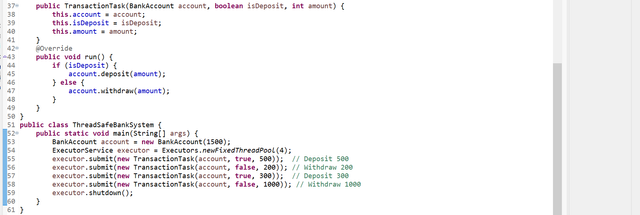

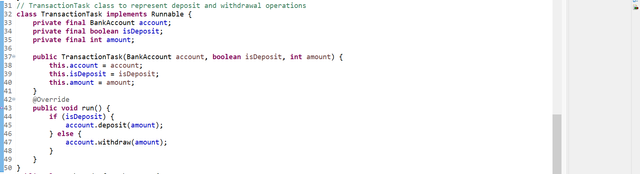

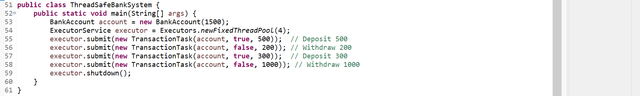

The main program creates multiple threads to perform transactions on the shared BankAccount object.

The program uses an ExecutorService with a fixed thread pool to manage concurrent threads. Four tasks, two deposits and two withdrawals, are submitted for execution. The shared BankAccount ensures that all threads interact safely with the account, preventing data corruption.

Techniques for Maintaining Thread Safety

Synchronization:

Thesynchronizedkeyword ensures that the program does not allow multiple threads to access critical sections of code (such as changing the balance) at the same time. This eliminates race conditions and guarantees consistency.Shared Resource Management:

TheBankAccountobject is shared between threads, but its state is protected by synchronized methods. This approach simplifies the implementation while ensuring data integrity.Thread Pool:

Controlled thread management is achieved using anExecutorService. A fixed number of threads decreases the overhead of creating and destroying threads repeatedly.

The output shows the sequence of transactions performed by threads. The synchronized methods ensure that deposits and withdrawals occur atomically, preventing inconsistencies such as withdrawing more money than available.

This program demonstrates a thread-safe bank transaction system in which multiple threads operate on shared resources without conflicts. Such synchronization ensures atomicity, consistency, and correctness in a multi-threaded environment. This kind of design is suitable for real-world applications requiring concurrent access to shared resources.

I invite @wilmer1988, @josepha, @wuddi to join this learning challenge.

Upvoted! Thank you for supporting witness @jswit.