Code Quality Review and Control as part of Smartym Pro Software Development Life Cycle

Introduction

The main indicator of a complete software product is the presence of all necessary features. It is the working functionality that end-users, investors, testers and managers see and use.

Generally, features are quickly added at the initial stages of development, while the system is stable and a small QA team is enough to test it. It is also easy to predict the price of the added functionality in terms of money and time.

Developers don’t always have enough time to create a proper project architecture and think about the technical debt. Furthermore, it often occurs that customers try to reduce the project cost and hire developers with doubtful skills.

When the project reaches a certain degree of complexity and is deployed to the production environment (where there already are active users), it becomes more difficult to add a new feature and predict its cost, the system loses its stability and requires more effort from developers and testers.

Eventually, the system can become so unstable and difficult to maintain that it’s easier to create a new version from scratch which in turn requires huge one-time expenses. It’s also likely that the new version of the system will end up like the old one.

The reason for that is the following: when the number of lines of code and the project complexity increases, developers will need more time to introduce changes and add new code. Thus the technical debt of the project grows.

However, if the development team follows certain rules when coding and creates an accurate system architecture, adding new features will cause little or no increase in technical debt.

In order to manage the technical debt, software engineers must establish a development process with certain quality requirements to the source code, which also must be systematically controlled (by senior employees and automatic systems).

To write high-quality code, one needs a strong team of developers with good technical culture. However, it is sometimes difficult to clearly understand the real level of the team at the initial development stages. In order to determine the skills of the team, one can assess code according to certain metrics as well as use automatic tools for code analysis and high-level reports generation that can help track problem issues of the system on time.

The good news is the fact that we can obtain all the necessary information for tracking through automatic tools for static code analysis. We work on projects for various platforms in various programming languages, and that’s why we need a tool for analyzing all of our projects. We use the following ones:

SonarQube – for code quality control.

Jenkins – for automating building processes, Unit Tests, and code quality control.

Unit testing for checking the app logic automatically as well as the operability of the app according to its requirements.

We use the following metrics for assessing code quality.

Code coverage

In an ideal project, any code must be covered with low-level tests according to white-box testing. In order to reach broad test coverage, we use TDD and BDD development methodologies that imply creating tests before the (working) code is written.

As mentioned above, 100% test coverage is desired for an ideal project. However, in reality, we have to make concessions to make software product development faster, that’s why it is good enough to have code that can be at 80% or more covered with tests.

Vulnerabilities

There are no perfect apps. There are no computer systems with perfect security. For project success it’s necessary to track any potential security problems: from an absent-minded employee who has forgotten to check and screen the input data to published famous vulnerabilities on platforms, languages used libraries. Security is a cornerstone in the projects of any level of complexity and we must be sure that user data will be protected.

Code style and cyclomatic complexity

These characteristics influence the readability of code and how all developers in the team perceive it. This in its turn affects the project's velocity as well as the speed and costs of introducing changes. The cyclomatic complexity of code also affects its performance predictability.

Code cohesion (complexity and size of dependency tree)

That’s quite simple – the higher the cohesion, the more system components are affected by changes. High code cohesion can result in project instability and increase the cost of each change by tenfold.

The number of code lines

This metric allows determining positions in code with a higher number of changes as well as detecting the most bloated code fragments that must be carefully examined during refactoring.

SonarQube

SonarQube consists of two parts:

- Server with a web interface, analysis reports and analysis results storage

- Scanner analyzing the project source code and sending the analysis results to the server

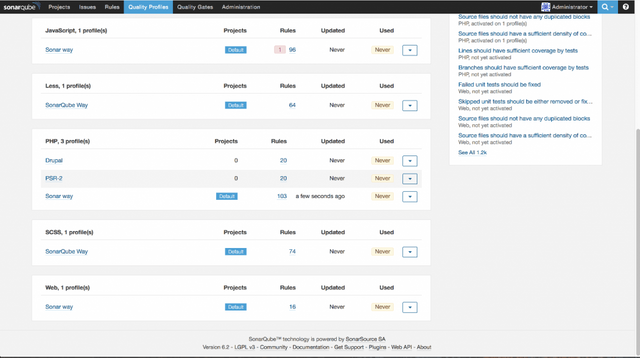

Quality Profiles

This is a set of rules and metrics to be used for automatic code analysis. This set of rules is combined in profiles for certain programming languages.

A general list of profiles and platforms

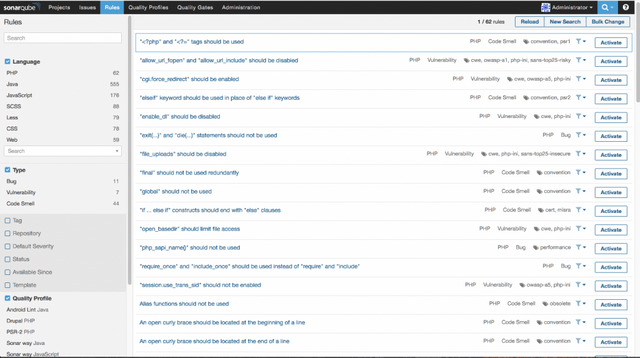

Managing rules for profiles

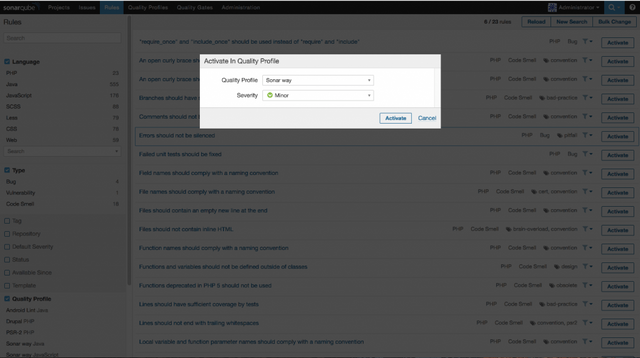

Connecting and setting one of the rules

The rules can be flexibly modified for each programming language and project allowing to adjust the analysis to our quality standards at a company- as well as project-level. We keep in mind whether these rules are followed during automatic analysis, which affects the final project assessment.

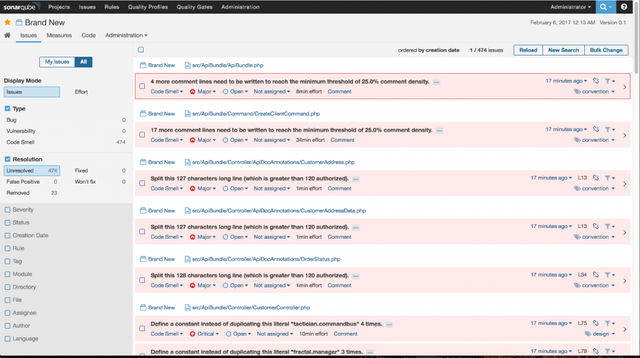

Errors found during analysis based on the set rules

The number of errors and broken rules during analysis is summed up and, based on these figures, the system determines whether the project meets our quality standards set in Quality Gate.

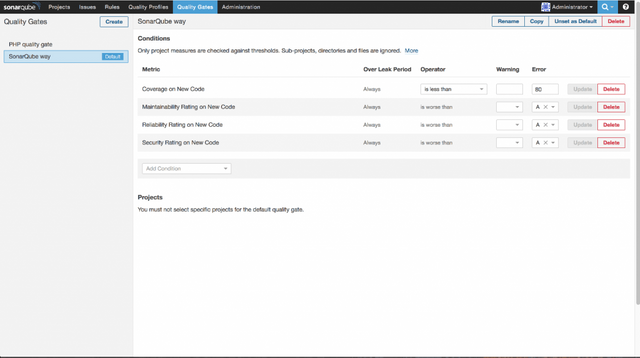

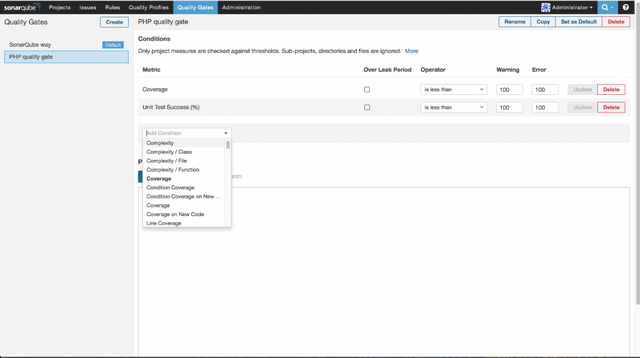

Quality Gate

Statistics gathering would not be complete if we could not get a rapid response from the system determining if the project meets our quality criteria. SonarQube allows to create so-called Quality Gate, that is, a set of conditions used by the system to determine whether the project has passed the test or not.

Standard Quality Gate

We can create an unlimited number of Quality Gates and flexibly set conditions when the project is considered as failed during the quality control.

Thanks to Quality Gate we can not only get a rapid report on code quality (passed/failed), but we can also integrate into CI systems that can react automatically in case the code fails the test. This allows incorporating SonarQube into automatic project build and deployment process (CI and CD).

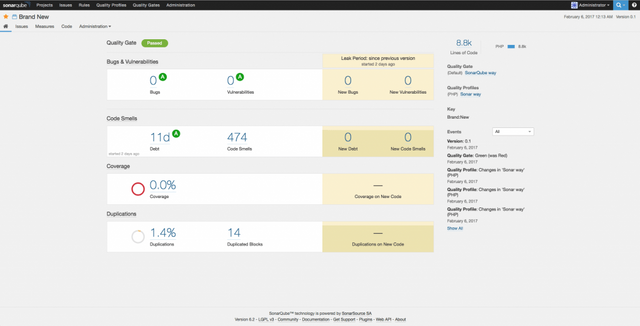

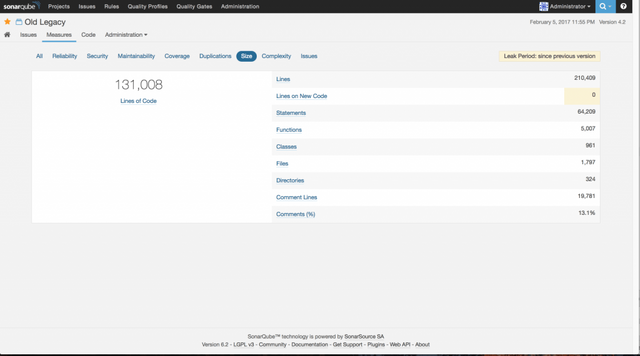

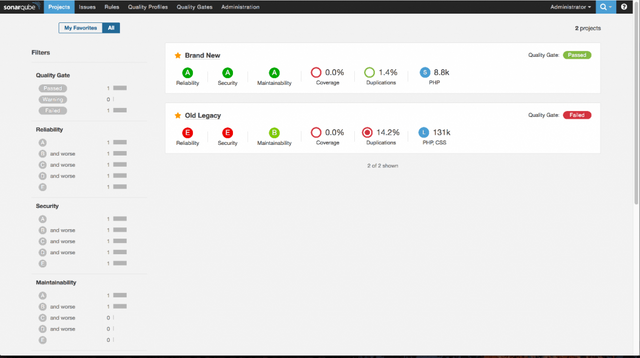

Comparative analysis of code quality

We are going to analyze two projects (let’s call them old-legacy and brand-new), pick the above-mentioned metrics and see how they affect code quality, project quality, and technical debt level.

For example, old-legacy failed to test with Quality Gate

On the screen we can see the number of bugs, security vulnerabilities, duplicate code percentage and the value of technical debt in man-days required to solve the problems, it can increase depending on code complexity, cohesion and coding style violations.

Size of old-legacy

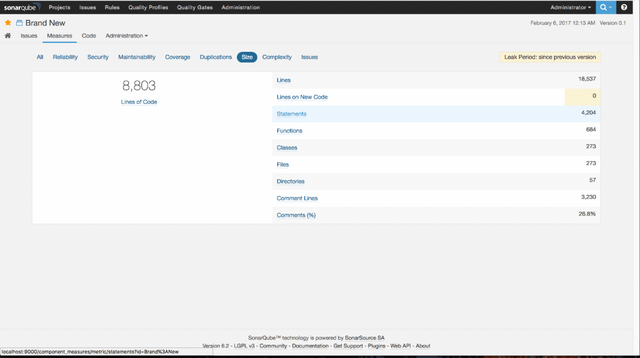

Size of brand-new

Detailed project size statistics. Here we can see comments percentage in code which is a very valuable metric.

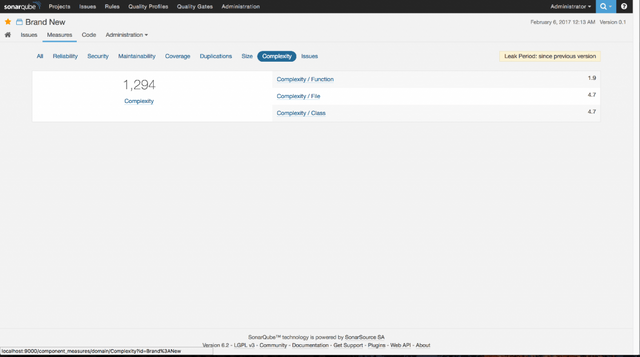

The average cyclomatic complexity of brand-new

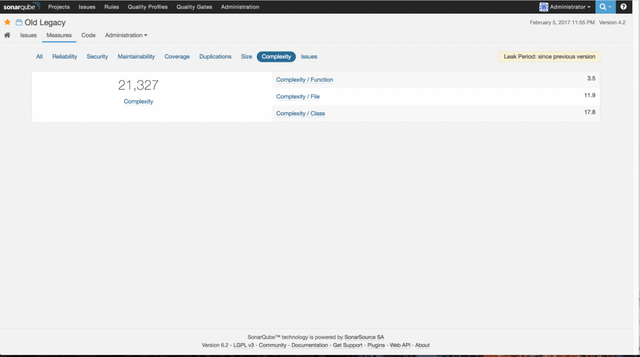

The average cyclomatic complexity of old-legacy

As we can see, old-legacy has more code lines and has been developed longer without code quality control. brand-new was started not long ago, it is integrated into code quality control process and we can see that it has fewer problems and all of them can be detected at early stages.

Unit Tests

As we can see on screenshots, both projects completely lack test coverage. In the case of the old-legacy project that has been developed for several years and is quite mature, this is unacceptable and causes system stability issues and raises a question of whether it is reasonable to support the system later.

Automatic Unit Tests are a great tool to make sure that the code performs the functions stated in requirements and meets stakeholders’ expectations. Covering code with unit tests significantly reduces the number of errors that are passed to the QA team and helps developers detect the most issues at the development stage.

Writing unit tests also forces developers to write more supportable (testable) code which is, in general, beneficial to the project for it reduces technical debt. This is a necessity on every mature and fairly large project since it allows the project to be stable for a long time and predictable to introducing changes.

However, since writing unit tests is usually the responsibility of the development team, writing them increases the development time, which is not acceptable for every project.

Neither will unit tests serve for prototype development (which allows the presence of bugs) which however require high development velocity. They are practical during the development of the first version of the product when the project budget is quite limited. Our brand-new project is exactly at the stage of the first version development, thus it is fine not to have unit testing at this time.

Automation (CI)

Jenkins is a tool for automatic project building and deployment. It operates the processes of project build that can consist of unit testing, migrations, project compilation. If issues arise on any building stage, the build is considered to be a failure and the development team receives a corresponding notification.

If the building was successful, Jenkins deploys the build to testing and production servers.

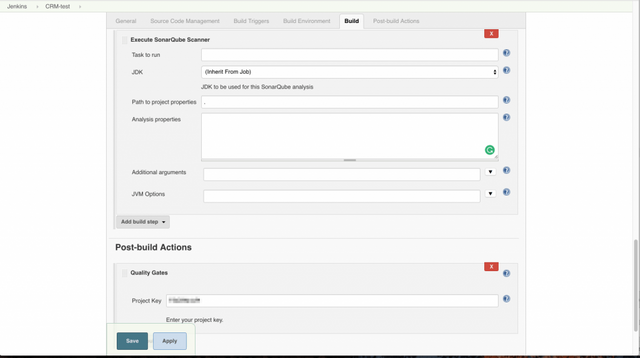

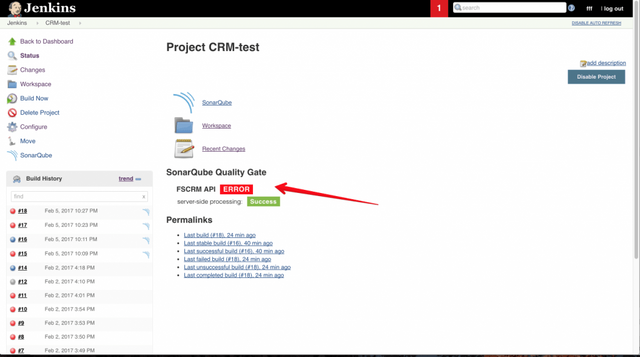

For the SonarQube to be maximally beneficial to us, we need to incorporate it into our build process. Integration of SonarCube with Jenkins allows to automatically analyze code during every building process and mark a build as failed if it didn’t pass Quality Gate.

Jenkins

Adding code analysis with SonarQube Quality Gate to the project build and getting the results of scanning afterward.

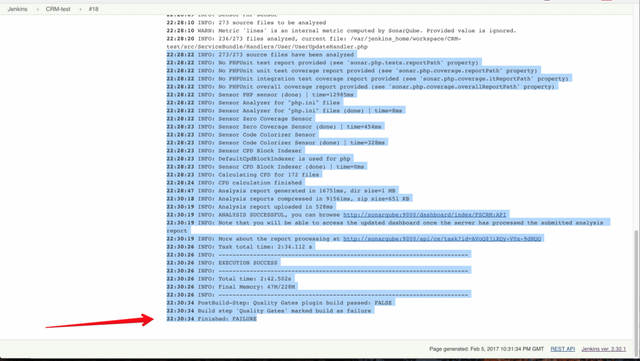

Project analysis during the build process and getting inspection results with SonarQube.

The build didn’t pass Quality Gate and is considered to be failed.

Takeaway

When the software product development process is established appropriately, if the right analysis and automation tools are used, one can be sure that the quality of the project implementation will allow it to be supportable and stable for a long time.

The above-mentioned metrics for code quality assessment are universal for almost every platform and enable to control the quality of code on any project. Project assessment according to these metrics are objective and can be automated well, letting assess the quality of work of any development team.

Congratulations @smartum! You have completed the following achievement on the Steem blockchain and have been rewarded with new badge(s) :

You can view your badges on your Steem Board and compare to others on the Steem Ranking

If you no longer want to receive notifications, reply to this comment with the word

STOPTo support your work, I also upvoted your post!

Do not miss the last post from @steemitboard:

Vote for @Steemitboard as a witness to get one more award and increased upvotes!