Putting Wikipedia on the blockchain

There's this pet project I have, the Daisy blockchain, which uses SQLite databases as blocks. Literally, each block is a completely normal SQLite database and can be accessed (read-only) as such. It progresses from time to time, when I find the round tuit to work on it, mostly on weekends. One of the things I envisioned Daisy would be good for is periodic distribution of authored (and authoritative) data, since it's primarily a PoA blockchain.

This is how it plays out: to distribute something which is digitally signed today, the receivers of this data need to be familiar with PGP, or even clunkier proprietary systems. If this data is in the form of a database, it's even worse. So a blockchain which is actually made from scratch to work as a database, which would work "plug & play" without special configuration, which would guarantee that data is indeed authored by the party it's supposed to be authored, and where the data is stored in a fairly standardised and easy to access format, would be beneficial to distribute practically any widely-read data.

Wikipedia's data is definitely in this category because Wikipedia's articles have a fair bit of a vetting and review process. The end-result (though not perfect and certainly not immune to trolling, fake news and other entropic decay) is much better than a random collection of articles pulled from anonymous wikis and forums. As such, there is worth in having them authenticated as coming directly from Wikipedia.

How it's done

Over the last few weekends I've been working on pulling Wikipedia articles into a blockchain powered by Daisy (as it's configurable and can easily be used to create and maintain custom blockchains). After searching around, it became clear that the only practical way of getting actual article texts was from XML dumps, so that the way I went. I made a utility project W2B which imports such dumps into a SQLite database and can update an existing database from a newer dump, while at the same time creating a "diff" database containing only new and updated articles. By repeating this step for each new XML dump published by Wikimedia, we get a series of "diff databases" which can be imported into Daisy as blockchain blocks.

Since these blocks are normal SQLite databases, they can be easily queried. While this does mean that multiple blocks need to be queried to find a particular article, I think there's also an upside: if blocks are queried in the order of most recent to the oldest, there's a fair chance that the article will be found in the last few most recent blocks, as popular articles tend to be updated often.

I've chosen the "Simple English" Wikipedia to start with, as it's much smaller than the original English one, containing less then 270,000 records at this time. The first block, containing a whole data import, is around 575 MB in size. The few subsequent blocks I made weigh in at around 40 MB each. Note that the bzipped XML dump for the Simple English Wikipedia is around 150 MB, so there's quite a bit of expansion, as expected.

Participating in the Wikipedia blockchain

Here's a cookbook:

- Download and build Daisy (it's written in Go)

- Download this archive with the blockchain seed directory and unpack it somewhere

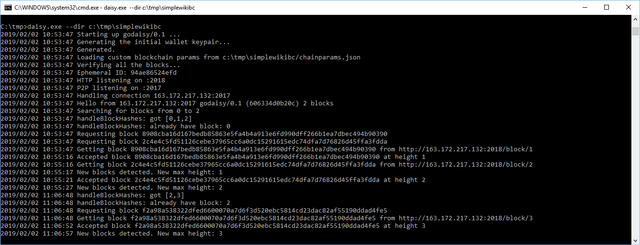

- Start Daisy as ./daisy --dir /path/to/simplewikibc

That's it - your instance of Daisy will start receiving existing and new blocks as they appear in the network.

You can query the blockchain either manually by inspecting individual sqlite files which are stored in the above directory, or by using the daisy tool as:

./daisy --faster query "select text from page where title='Tetris'"The --faster argument tells Daisy to skip blockchain integrity verification at start.

The output of this operation will be a set of newline-separated JSON objects, each containing a single found result. In this case, there will be at least two rows, as there have been two revisions of this article.

What's next?

There's a lot of stuff I'd like to do with this, like:

- Build a real web front-end for these Wikipedia blockchains, which would make them generally usable out-of-the-box by less technically-minded people

- Make Daisy more robust and feature-full, especially with regards to networking -- as well as do a fair amount of refactoring (it was kind of my first project in Go ever...)

- Make the Wikipedia import process faster and more automated

- Solve world hunger?

I've been toying around with the idea that, if there's enough interest for something like this, I could ask for crowd-funding to pay my salary for 6 months or so, to make it a polished, easy to use solution which would be as much plug&play as possible - just download this package (or an installer), click on a few things - and boom - you're participating in distributing Wikipedia data world-wide, making the whole idea more resilient, and have your own local copy (we'll need to see what can be done about images, etc.).

What do you think? If this is an idea worth pursuing, leave a comment below.