How facial recognition algorithm works

If a surveillance system could recognize faces, it would be much more useful. It could help prevent crime, as well as find terrorists. This idea inspired us, so we started to build intelligent software which can make surveillance even smarter.

We began to work on facial recognition algorithms about two years ago. Before that, our researchers worked with computer vision solutions and developed a library for bank cards data recognition — pay.cards. It took about one year to complete that project.

So, we have more than four years of experience in neuronets and deep learning within the computer vision field.

When we decided to develop Faceter, we began to research and test all of the libraries available on the market. At that moment, we could only find either opensource libraries for face recognition for pictures (not in the video stream) with low accuracy or proprietary solutions with high pricing and high technical requirements.

Therefore, we decided to make advanced facial recognition available for everyone, creating our own algorithm for video stream analysis in real time. It is the core technology of our product.

Faceter is a software which makes video surveillance smarter. We want to make Faceter work as a human operator of CCTV, who is sitting in a special room with many screens, watching the video stream from numerous cameras simultaneously. Like in the movies.

But this is not just an ordinary operator, but it is an employee with superpowers. Faceter can remember millions of faces and recognize them; this guy rarely sleeps, doesn’t smoke or take a break, can’t be upset or distracted, and never loses focus.

Our intelligent software can follow a concrete person through the cameras, while he or she moves. It can also understand if this person shouldn’t be there at this hour, and it can look at the history — when this person was noticed there before.

But we have much more in mind. In 2–3 years, Faceter will also react on sounds, will recognize people by silhouette and just a small visible part of the face. Our system will be connected to municipal special forces (emergency, police and fire-alarm systems). It will even understand emotions, will “read” movements and detect if someone is violent or is going to do something bad. It will really help to prevent crime and dangerous situations and to catch terrorists and criminals.

The core technology is already here — Faceter can recognize faces quite well. According to the LFW benchmark, our algorithm’s accuracy is 99.75%. This result was achieved by using only one neuronet, which we really use in the product.

It was tested in real life as well — we have recently successfully finished three proof-of-concept projects. One of them was realized in fast-food chain in Johannesburg. Faceter was implemented on video surveillance systems in restaurants, which are very famous in Southern Africa. The case of Debonairs Pizza is especially interesting — the company is going to use Faceter as a part of their CRM system — to improve relationship with customers and to increase their loyalty. We will tell more about it in details in one of our next posts.

Now we would like to focus on core technology and explain exactly how our FR algorithm works.

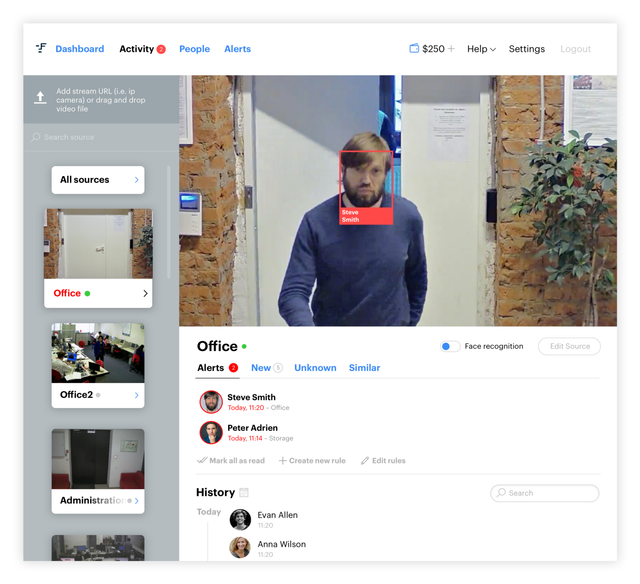

This is a screenshot which shows our office video camera stream.

The red square shows that the face was detected. Faceter recognizes this face and identifies the person. If it were an unknown person, Faceter would name the picture “Person [number].”

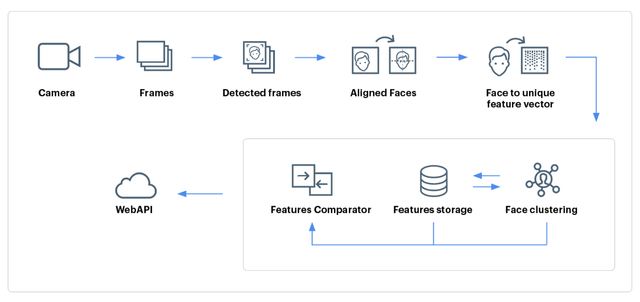

The entire facial recognition process goes through several stages.

The system receives the video stream and analyzes all of the frames in real time. When a face is detected, Faceter catches several shots of it and cuts faces from them. In real life, the person can move, turn and even lower the head. So, on the next stage, the system aligns the faces on each image so that the algorithm on the next stage can analyze the face with a front look for higher accuracy. After that, the system builds a unique vector which consists of the description of the facial features (for instance — the distance between eyes, length of forehead, nose, skin tone, etc).

The actual process of vector creation is, however, much more complicated, but very similar to the way that we notice features in faces. Vector is not a simple table of parameters; rather, it is a complex “idea” of what defines this concrete face and how it is different from others, converted into data-vector through certain mathematical process.

This vector is created for each frame with this face. As a result, we have a bunch of vectors. The system will analyze them, clustering and extracting key vectors which will be sent to storage. Then this cluster of vectors is compared to others. The comparator then sends the results through API to any other system.

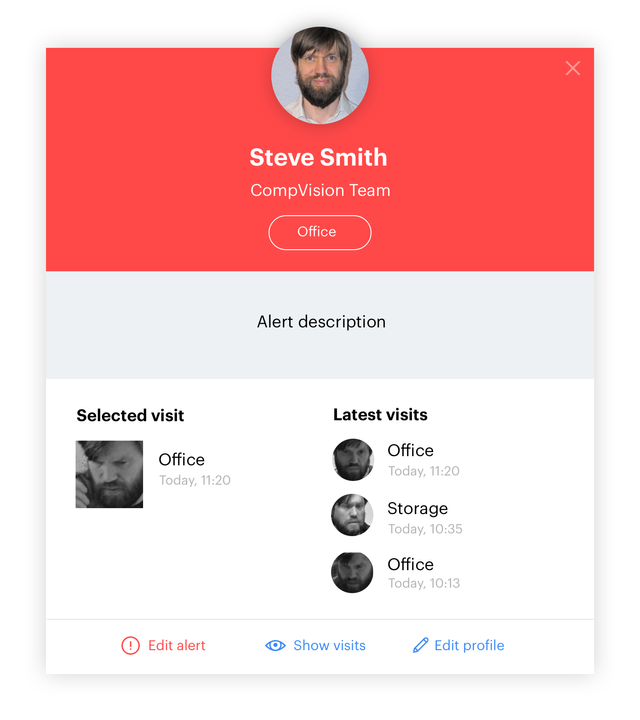

In our case, we receive the results and display them on the screen:

So, you see the name, job title and place where the person was detected. You can also check when Steve was in the office the last time and how many times he visited the office this week. Customers can build specific rules for each person, white and black lists and time limitations. Additional information can be shown on the screen. For instance, shop or restaurant employees can see the history of purchases, type of loyalty card,favouritee dishes or goods. This means that waiter can come and greet the customer by name, to offer a discount for theirfavouritee dishes.

Faceter can be installed on-premise — inside of the customer’s infrastructure. The more cameras that the company wants to use for face recognition, the more servers and computing power it will need. Also, the number of faces which must be recognized matter.

This online solution is quite easy to use — you just need to sign up and add RTSP, HTTP or RTMP links to your video camera. Very soon, we will add the ability to connect cameras through an ONVIF interface.

Right now, Faceter is available for business customers only. But at the end of this year, we will launch a beta-version for the mass market — so that anyone who has a camera with an internet connection will be able to use it.

We feel that advanced technologies must be distributed equally so that everyone can benefit from their advantages.

Originally published at becominghuman.ai on October 16, 2017.