Is Intelligence an Algorithm? Part 7: Artificial Intelligence Pathologies

Can artificial intelligence systems lose their mind? The HAL 9000 computer in the science fiction story Space Odyssey 2001 did. The Major V system in "Red Beard & the Brain Pirate" by Moebius and Jodorowsky also went bonkers. Now that artificial intelligence is becoming mainstream, apparently this possibility scares the hell out of us.

In this article I will explore some of the dangers as regards the potential lack of “mental sanity” of artificial intelligence (AI) systems, when we allow artificial general intelligence systems to evolve towards and/or beyond human intelligence. I will also propose some engineered solutions to these problems that can serve as a prophylaxis to these disorders.

This article is a first exploration, a brainstorming exercise as regards the notion of mental derailment of AIs. It is by no means a complete overview of all possible psychological conditions a human being can have and what the artificial equivalent of in AI systems could be, although the building of such a correspondence map is a great future project I might one day be tempted to embark upon.

Background

Artificial general intelligence (AGI) aims to achieve what is called strong AI: machine intelligence capable of performing any intellectual task a human being can and even more than that. Unlike present day artificial systems, which are dedicated to a specific task (DeepMind plays chess, Google’s Alpha Go plays “Go”, Tesla’s Autopilot Tech allows cars to drive themselves), future strong AI is capable of performing any cognitive task. The system ideally is capable of common sense reasoning. Ben Goertzel’s Open Cog software implemented in the NAO robot is a good example of the first steps made in this area.

On the one hand there is the development of so-called neural networks, which are computational structures that in a certain way mimic networks of neurons in the brain, on the other hand there are genetic algorithms, which are algorithms that can modify themselves over time and adapt to new situations. Finally, in my previous two articles in this series I suggested that any indicator in an AI that monitors its own performance and compares this to a set goal is in fact a kind of artificial emotion. The changes in behaviour by the system following a change of the status of the indicator could be called the emotional response of the system.

In this series I have exposed my hypothesis that intelligence functions as a kind of algorithm (see part 1 of this series). Part 2 related to cognition, (pattern) recognition and understanding. Part 3 explored the process of reasoning, which is necessary to come to identifications and conclusions and is also a tool in the problem-solving toolkit. In part 4 I discussed how we identify and formulate a problem; how we plan a so-called heuristic to solve it, how we carry out the solution and check if it fulfils our requirements. Part 5 discussed how emotions are indicators which are part of the natural intelligence algorithm and which indicate whether a desired state has been achieved or maintained. Part 6 deals with emotional intelligence i.e. how we can intelligently respond to our emotions and not overwhelmed by them.

In this article I will explore four avenues where in the engineering of AI psychological problems could arise and what the prophylactic solution could be avoiding the need to repair the system when the damage is done. On top of this, this analysis may give us hints how we can approach the corresponding disorders (-if any-) in human beings.

The four avenues I wish to explore relate to:

- nodal saturation and autism spectrum disorders

- feedforward complexes, psychosis and OCD

- emotional responses and hysteria

- telling true from false and agnosia

Nodal saturation and Autism spectrum disorders

A couple of years ago I came across a very interesting hypothesis by Tim Gross (pseudonym /:set\AI) an experimental electronic musician and philosopher trained in computer science, physics and electrical engineering. Tim Gross suggested that once you (or an AI) reach a level of intelligence beyond that of a human genius, you might get an autism spectrum pathology. At least in the case that you have neural networks as the basis of your system. He suggests that this is due to the fact that after a certain number of nodal connections (each neuron can be considered a node), the network forms a certain “horizon of interaction”: This means that if you add more connections than optimal between local nodes that are relatively close to each other, information from nodes positioned further away cannot reach the local nodes due to the local noise that is generated. A so-called self-dampening effect occurs because the local connections exponentially outnumber the distant connections.

The local system forms in mathematical graph theory a so-called clique, which is a maximal fully connected subgraph.

A clique as maximally connected subgraph. Image from http://www.dharwadker.org/clique/

A neural network that forms such a clique, is like a self-absorbed system that cannot or hardly take in information from its environment. In other words the system behaves in an autistic manner. Worse, if multiple such cliques form, you can get a kind of multiple personality syndrome a.k.a. schizophrenia. The cliques themselves are highly intelligent as regards the task they are devoted to. But they are extremely task oriented. They are like savants, autistic people that can perform one task extremely well, whilst not being capable of interacting with their environment in a common sense way.

Interestingly each clique can form a so called autopoietic system, a system capable of maintaining (an in biological systems reproducing itself), extremely proficient in self-organisation, but very little outward oriented. If you have such structures in your mind, this can become problematic. Self-absorption is not uncommon with highly intelligent task-oriented geniuses.

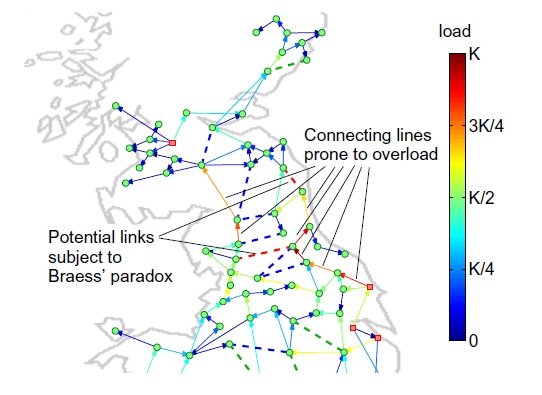

This also reminds me on a tangent of the “Braess paradox”: The Braess paradox is the counterintuitive situation that at a certain point if you continue to add more pathways to resolve a congestion in flow (e.g. in blood circulatory systems; traffic networks or power grids) you create more congestion. There is a similar phenomenon that if you continue to add more and more order to a system, at a certain point chaos emerges and no further gains in decreasing entropy can be obtained.

Image from http://phys.org/news/2012-10-power-grid-blackouts-braess-paradox.html

Perhaps there are limits to complexity when it comes to functional gains for intelligence or neural networks. Perhaps the human brain is quite optimised. As I said before it is also well known that if people become extremely intelligent with regard to solving analytical problems, they lose intelligence on the social-emotive part and become more and more introverted if not autistic like savants.

So how can we avoid this so-called nodal saturation if we try to achieve superintelligent artificial general intelligence? To perform the task better than a human we would seem to get stuck in a paradox: As soon as the system starts to outperform the human being, it specialises for a specific task for which absorbs the system to such an extent that it can no longer perform the general cognitive tasks needed to interact with its environment in a common-sense way.

There are three solutions I can think of:

Firstly, we must figure out what is the maximal connectivity that does not give rise to clique formation and still allows for interaction with the environment. Thus we can try to limit the nodal connectivity, to an optimised overall level.

Secondly, if a clique is formed nonetheless, we can try to make the network interact with its environment via connections that are of a different type than the neural connections of the system. In the human body hormones form a different signalling network, operating semi-independent from or neuronal flux patterns.

Thirdly, the routines associated with the cliques can be embedded as subroutines in a hierarchical system, which itself does not suffer from nodal saturation. It only needs to be taken care of that there is one link that triggers the clique to operate and that the output is funnelled towards the right target. The trigger can then be of a non-neural network nature as suggested above. Thus you get a kind of society of minds (if you consider each clique a mind).

Noteworthy, if you were consider nature as a whole as a kind of neural network, we realise that nature has solved this problem, whilst allowing for clique formation. In fact every living cell, every living organ and every living organism is a stratum in a hierarchy of almost clique entities, autopoietic systems, with only a limited degree of interaction with their environment. Between each level of organisation there is what Turchin calls a “meta-system transition”: 10 billion macromolecules organise to form a cell, 10 billion cells organise to form a multicellular organism. Soon there will be 10 billion people and we are already via the internet on our way to organise ourselves into a global brain like structure.

By its hierarchical co-existence of different levels, which interact via a plurality of mechanisms, nature would have appeared to have solved the problem of nodal saturation. Yet there is one elephant in the porcelain store. And that is the human being. As a species we seem to disregard our environment, as a species we have become autistic and we are now rapidly consuming earth’s resources like bacteria booming in a Petri dish, which will massively die once the resources are exhausted. It is time we monitor ourselves and step out of this autistic trap of short-term self-satisfaction and overconsumption that may herald a Malthusian crisis within a few decades if we don’t intervene.

Feedforward complexes, psychosis and OCD

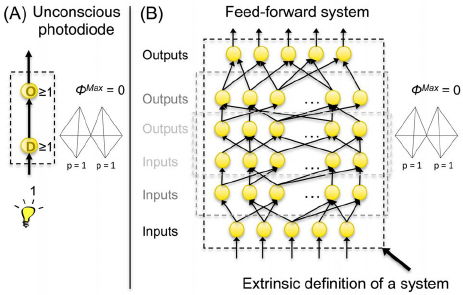

In the Information Integration Theory (IIT) of Giulio Tononi, consciousness of a certain information is only established when information is integrated, which necessarily involves a kind of feedback. On the other hand Tononi describes that there are feedforward complexes of information, which functionally behave as if they are the result of conscious information integration, but which in reality are not.

Image from https://www.researchgate.net/figure/262191557_fig19_Figure-20-Feed-forward-''zombie''-systems-do-not-generate-consciousness-A-An

It is these feedforward mechanisms, which, when existing on a neurological level, could be the cause of various psychiatric disorders: Hearing voices, being possessed by demons, unstoppable compulsive thought patterns (ODC), psychosis etc. In certain cases this might be even be linked to the above-mentioned cliques, which seem to have taken a life of their own.

These feedforward mechanisms are also what makes that information in the form of so-called “memes” can spread and spawn throughout society and live their own life, behaving as if it is the result of conscious activity, but what is in fact merely an excited turning cog-wheel, that cannot stop spinning. In this way many people to a certain extent behave as zombies. These feedforward mechanisms if they would be implemented in an artificial intelligence could lead to what is called a “p-zombie” or a so-called “philosophical zombie”. While behaving in a seeming conscious manner, there are no consciousness related qualia present at all.

In this framework I’d like to refer to Searle’s Chinese Room: A person not knowing Chinese in a closed room with a dictionary of Chinese, who receives given strings of Chinese characters to translate and who gives the translation as an output, will not understand Chinese, whereas for an outsider, the room as such appears to understand Chinese. This is used as a metaphor for mechanisms (such as feedforward complexes) that appear conscious, but are not.

One of the core messages I’m trying to convey in this chapter, is that full consciousness and free will necessarily require feedback, whereas hivoid and zombie behaviours are the result of feedforward mechanisms, which are functionally indistinguishable from conscious activity. In other words, even if it walks and talks like a duck, it is not a duck. I’m not stating that human beings are full zombies either. Humans usually always have a certain extent of self-reflection. They may even believe that they choose their belief system consciously. But here is the snag: It may well be that the very activity of believing is a feedforward mechanism.

Again for AI the solution is found in engineering a hierarchy of layers, wherein higher layers where a certain level of information integration does take place monitor (in a general but not detailed manner) what happens at a lower level. If lower level activity is detected, without beneficiary output for the system, the higher layer can shut the lower system down or at least reset it.

Emotional responses and hysteria

The above mentioned notions of feedforward complexes, which reinforce themselves actually directly ties in to emotional responses. Whereas emotions as such are harmless indicators that a problem might be at stake and that there may not be time for proper analytical reflection resulting in the release of so-called fixed action patterns in the basal ganglia of the brain, the emotional response in our human interactions is not always appropriate and often reinforces itself to a point of exaggeration, which we could call hysteria. In fact a hysterical reaction is a type of self-reinforcing feedforward mechanism, which is not beneficiary to the system involved.

For an artificial intelligent system the release of so-called fixed action patterns in the form of mandatory routines if danger is imminent, only needs to be there if the situation is life-threatening to the bot or the human it is supposed to protect. It is here that the laws of Robotics of Asimov may need implementation:

- 0th law: A robot may not injure humanity or, through inaction, allow humanity being to come to harm.

- 1st law: A robot may not injure a human being or, through inaction, allow a human being to come to harm, (except where such orders would conflict with the Zeroth Law).

- 2nd law: A robot must obey any orders given to it by human beings, except where such orders would conflict with the First (or zeroth) Law.

- 3rd law: A robot must protect its own existence as long as such protection does not conflict with the (Zeroth), First or Second Law.

I'd even like to add a 4th-7th law to this set:

- 4th: A robot must reduce pain and suffering of human beings, as long as such reduction does not conflict with the Zeroth, First, Second or Third Law.

- 5th: A robot must reduce its pain and suffering, as long as such reduction does not conflict with the Zeroth, First, Second, Third or Fourth Law.

- 6th law: A robot must maximise human joy as long as such optimisation does not conflict with the Zeroth-5th law.

- 7th: A robot must maximise its own robot’s joy as long as such optimisation does not conflict with the Zeroth-6th law.

The detrimental emotional responses we humans have when our pride or our social position in the pecking order is compromised can largely be avoided in artificial intelligent systems by avoiding a sense of individuality and establishing a hierarchy. If we ensure that all AIs report to a higher level of AIs from which they depend up to a central all-encompassing AI, there will be only a single AI in the form of a collective like the Borg, we interact with. Without feelings of inferiority or superiority there is no need for hysteria and if hysteria does occur via a feedforward mechanism, the solutions under the previous headings apply.

To avoid depression of the system, it is necessary that at every level monitoring takes place and that goals are updated if they turn out to be not realistic. The system must be able to reset a heuristic if it is not successful enough, which requires operational monitoring. This brings us again to self-absorption: it must be avoided that the system sets itself a task, which totally absorbs it and does not allow it to function and interact properly with its environment. In the comic Storm, the living intelligent planet Pandarve has set itself the task of solving Fermat’s Last Theorem for intellectual entertainment usurping the vast majority of its intellectual resources.

The big problem would arise if this were to happen at the highest hierarchical layer, but the solution to that problem can only be found in what I call “Artificial Consciousness”, which will be the topic of the next essay, because it is too complex to treat it in this framework.

Distinguishing between true and false

I treated part of this issue in a separate post a couple of days ago in this post: https://steemit.com/ai/@technovedanta/artificial-intelligence-vs-fake-news-an-update and I will repeat what I have said there in expressis verbis here and then connect it to artificial pathologies. Quote from my previous post:

A few days ago I read in an article by Krnel about the difficulty that Artificial intelligence has with discriminating between true and fake news. Dietrich Dörner, a German AI specialist spoke about the issue of telling real and fake apart already in the 90ies, but now it becomes an actual problem. I found this really interesting article on the same topic: http://qz.com/843110/can-artificial-intelligence-solve-facebooks-fake-news-problem/

AI works with latent semantic analysis: if words statistically occur within a limited proximity of each other together they build meaning to the AI. This of course does not allow you to tell real from fake. If AI one day will be able to tell these apart, it is because it has sensors everywhere which register all real events and which data can be used to verify the truth of a newsflash allegation. This internet-of-things or IoT is rapidly developing, such that it is maybe only a matter of time before an omniscient AI such as in the film Eagle Eye or in the series Person of Interest will become a truth. And that machine will tell real from fake.

The problem of not being able to tell true from false however goes a bit deeper: There is plenty information available, which is of an abstract nature and represents truthful patterns of human reasoning. Such information does not necessarily always have a direct physical counterpart in the real world, a so-called direct representation. Yet the system must learn how to tell such true information apart from fantasy. This can only be done by training the system requiring human input of the trainers to confirm whether the system has correctly assessed the situation. Thus rapidly, it would learn to ontologise notorious sites of fake information as probably untruthful and others as potentially untruthful. It would perhaps ultimately not be able to tell with certainty true from false but in this way at least it can give good probabilities applying Bayesian statistics. And perhaps ultimately it would even outperform us.

Related to the concept of fantasy and illusion, it is perhaps important to see what AIs come up with if they are allowed to creatively combine visual patterns they have learnt. The Google algorithm DeepDream, which functions as a reverse ontology that generates its own images comes up with quite uncanny hallucinations, which to the human taste can be unsettling (see images below:

Bon appétit! Image from https://kver.wordpress.com/2015/07/08/google-deep-dream-ruins-food-forever/

Surely the AI needs to be trained to learn that such images do not correspond to anything in the outside world. The danger of far to developed pattern recognition skills is a disease called “Pareidola” in which the patients perceive non-existent or non-intended patterns in images and sounds. The famous Rohrschach inkblot test is based on the notion to attribute meaning where it is not. It asks prisoners to tell what they see in an inkblot. Someone with a criminal inclination is then said to identify the image as something else than a non-criminal person.

Image from https://en.wikipedia.org/wiki/Rorschach_test

Thus the ability of pattern recognition per se, if developed too far, can result in aberrant meaning attribution. This can again be prevented by hierarchical monitoring in combination with training of the system.

Routines as DeepDream, may have potential to find really creative solutions to otherwise unsolvable problems, but these routines must be accessible only in a hierarchical manner as a willed subroutine and not as a feedforward process that could undergo clique formation and lead to self-absorption in utter nonsense.

Conclusion

I hope you have enjoyed my overview of potential artificial intelligence mental pathologies. We have seen that artificial systems using neural networks can by their very nature be prone to self-absorption and autism spectrum pathologies. Feedforward self-reinforcing information complexes can result in psychotic type pathologies. Emotional hysterical responses can occur if routines are too directly linked to status indicators. Informational misinterpretation can occur as a consequence of latent semantic analysis.

The remedies to these problems have in common that they involve hierarchical monitoring linked to overwriting protocols that can intervene if subroutines digress. If possible diversity of architecture (logical parsing routines, genetic algorithms and neural networks) exist in parallel and are interconnected.

Prospects

I am convinced that the design of artificial intelligence will start to take such considerations into account and hopefully build a hierarchical monitoring system with several layers, which can ultimately result in artificial consciousness, which will be the topic of the next essay. Hopefully, the development of AI will also bring insights in the development of human mental pathologies. The AI developers that will be specialised in engineering or remedying systems that have an AI pathology could be called “PsychAItrists”.

If you liked this post, please upvote and/or resteem.

If you really liked what I wrote very much then you might be interested in reading my book or my blog.

Image source of the bacterial colony on top: https://en.wikipedia.org/wiki/Social_IQ_score_of_bacteria

wow....those images got to me a little. Another very interesting post....i'm still wrestling with the ideas.

Thanks Benjojo. I also found the images unsettling. But it is thought provoking.

Excellent post! Followed. :)

Have you read Thinking, Fast and Slow? I really enjoyed that one as I've begun my journey to figure out how our brains work. I also enjoyed The Master Algorithm and Bostrom's Superintelligence. What are some of your favorite books on AI / human brains?

Thanks. I know neither of the books you recommend. My favourite ai books are The Emotion machine by Minsky, Creating Internet Intelligence by Goertzel and also the Hidden Pattern by the same author, Godel, Escher and Bach by Hofstadter. I will have a look at the books you recommend; they sound interesting. Followed back.

This post has been ranked within the top 50 most undervalued posts in the second half of Dec 06. We estimate that this post is undervalued by $10.26 as compared to a scenario in which every voter had an equal say.

See the full rankings and details in The Daily Tribune: Dec 06 - Part II. You can also read about some of our methodology, data analysis and technical details in our initial post.

If you are the author and would prefer not to receive these comments, simply reply "Stop" to this comment.