AI Trolley Dilemma

A common misunderstanding of artificial intelligence is that it has its own source of intelligence. AI is software that is controlled by a series of logical reasoning set out in code.

Programmed by us and provided with data it analyzes via machine learning, AI makes deductions based on consuming these data inputs. It processes this info based on a prescribed set of logical reasoning, and then creates an output followed by actions based on these deductions.

So, at the end of the day, we are responsible for the actions carried out by AI. Its code, after all, is a reflection of the way we think and reason.

But what happens when AI encounters an inconsistency or an inconclusive data input?

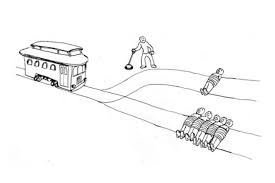

Let’s take a look at the age old trolley dilemma described by Philippa Foot in 1976:

“There is a runaway trolley barreling down the railway tracks. Ahead, on the tracks, there are five people tied up and unable to move. The trolley is headed straight for them. You are standing some distance off in the train yard, next to a lever. If you pull this lever, the trolley will switch to a different set of tracks. However, you notice that there is one person tied up on the side track. You have two options:

Do nothing, and the trolley kills five people on the main track.

Pull the lever, diverting the trolley onto the side track where it will kill one person.

Which is the most ethical choice?”

When there are ethical dilemmas such as the above, what are our obligations to enforcing code to deal with such situations? And who must make these decisions? The programmers, the companies that program the AI, the government, or is it our vote?

All in all, it’s not necessary for an AI to understand us. AI is a program, but an AI trained on human emotion could provide some interesting insight into us. Can we solve the trolley dilemma once and for all?

The issue is that we can’t agree. Many similar dilemmas we have are outlined by the legal system. As our technology, and specifically action oriented AI, becomes more sophisticated, will the legal system rule on cases that determine the future code to deal with ethical dilemmas such as this?

For example, as we integrate driverless vehicles on the road along with human operated vehicles, it becomes complex for the two systems to interact. I can think of many such examples where ethical decision making may arise (see short story to follow).

Often we criticize technology for its potential negative affect on us, but we must remember that it is us who create technology. What does our technology say about humanity?

What is your take on the trolley dilemma?