Is it a Higgs, Computer?

Using neural networks to detect elementary particles.

This article originally appeared on kasperfred.com

Whoa, what a ride it has been. From all the way back in December when I wrote the "Post Mortem Analysis of my Final Year Project" where I promised to follow up with a more in-depth analysis once the dust had settled.

But I never did write the follow-up as since then, I've continued working on the project, and ended up winning the physical science category of the Danish "Young Scientists" competition, and is therefore qualified to the European and World championship.

So that's going to be fun. I hope.

Overview

In the project, I analysed a dataset released in 2013 by the Atlas team which contains simulated proton-proton collision resulting in either a 125 GeV Higgs which would decay to two taus, two top quarks decaying to either a lepton or a tau, or a W boson decaying to either an electron or a muon and two taus.

The problem is then to create a machine learning model which can correctly identify which events. This is a difficult problem for a couple of reasons. Firstly, it's impossible to detect the Higgs directly as it decays an order of magnitude faster than the other particles. In fact, with a mean lifetime of 1.56 * 10^(-22) s, the Higgs decays so quickly that you can't measure it in the particle accelerator; you can only measure the decay products which looks like the decay products of everything else.

Secondly, the Higgs boson is only present in one in a billion collisions making the data very skewed.

This makes looking for the Higgs like looking for a needly in an exceptionally large hay-stack, but where the needle looks just like all the other hay.

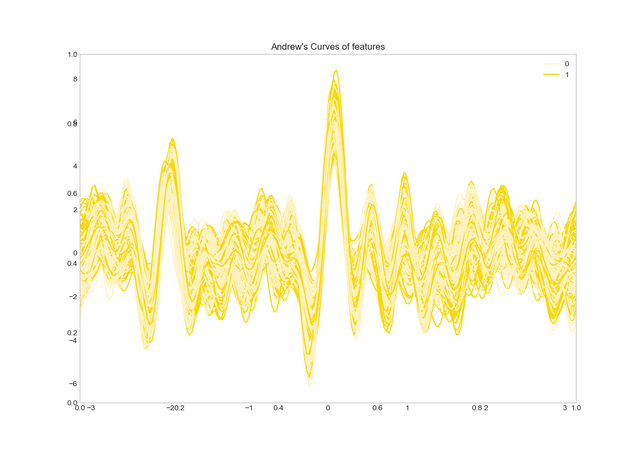

To illustrate this, we can look at a Andrew's curves plot of the data.

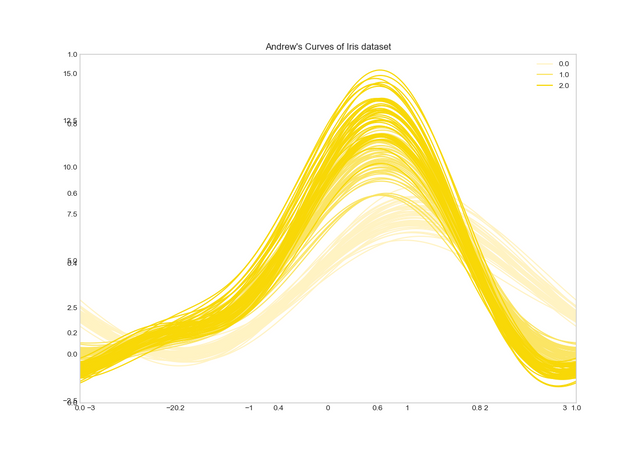

An Andrew's curves plot works by computing the finite Fourier series of the order of how many features there are for each example which then becomes a periodic curve on the plot. Once colored in accordance with the label, the graph serves as a projection of the high-dimensional data into two dimensions. How easy it is to separate the clusters of periodic curves indicates how difficult the problem is. Here we see that compared to the iris-dataset depicted below, guessing the Higgs is a somewhat difficult job.

In the project, I constructed a neural network that could accurately classify the existence of the Higgs boson, and I showed how the model could be generalized to also detect other elementary particles via transfer learning. Since only data from the source domain is present I suggested a method of finding a transferable distribution using Generative Adversarial Networks, Embeddings, and some domain knowledge even when only one distribution is available.

You can also test your skill against the model with the Guess A Higgs game.

Embedding of guess a higgs game

Introduction

Understanding the Atlas detector

When running, there are about 10-35 proton-proton collisions in the LHC. The Atlas detector is one of two general purpose detectors trying to measure the properties of the decay products. The Atlas detector is a cylindrical shape with multiple layered rings detecting different properties of the decay particles much like an onion.

The first layer can track the path of charged particle when they interact with electrons in the Xenon gas, or the electrons of the silicon. (It's this layer the TrackML challenge is concerned about)The layer is showered with a strong magnetic field by a couple of strong magnets around the detector. By looking at how much the particles curve, we can figure out their mass.

The next layer consists of a bunch of calorimeters absorbs the particles in order to measure their energy.

The last two layers measures different types of radiations (CHerenkov and transitionradiation respectively), but were not used in the project.

From the measurements of the first two layers, we can by using some relativistic mechanics (coming soon), work out the momentum, energy, and mass of the charged particles.

Preprocessing

90% of datascience is getting the data in the right form

When first getting the data, a couple of things really stood out:

- There were a lot of missing values

- The value-ranges were all over the place

- The feature distributions were very skewed

- There were a lot of correlation between (some) features

I was able correct all of the problems with the exception of transforming the feature-distributions back to binomial distributions where I attempted log-transformation, and fitting a manifold over the data, but neither of which worked.

The rest of the problems I was able to fix with fairly standard techniques. One interesting thing, however, is that the PCA method that many times worked best was the one where I'd specifically chosen the number of principal components to be equal to the number of uniquely uncorrelated features in the dataset; as opposed to just the k first components that explain 85% of the variance.

Modelling

Several different networks were constructed with varying architecture. Since there's no notion of spatiality in the data, the architecture that was found to work best was a simple feed forward network with about 6 hidden leaky Relu layers each with about 300 neurons each. The last layer would be a sigmoid layer that maps the 300 features into a single feature representing the probability of the Higgs having been there.

The network was trained using momentum based stochastic gradient descent with the RMSPROP optimizer, and employed both dropout and l2 regulation.

If you want to learn more about neural networks, you should check out the Introduction to Neural Networks series.

Transfer Learning

While being able to classify the existence of one elementary particle is all fine and dandy, we really want to be able to classify the existence of any number of particles, and preferably without having to start from scratch each time.

We can achieve this using transfer learning. Transfer learning is when you reuse the learned weights from the early layers in one network in another network, so you only have to train the last few layers; basically copy + paste.

Transfer learning is basically copy + paste

Of course, in order for this to be useful, the transferred neurons must encode the same distribution in both networks. Making sure the distributions are the same is usually not a problem to test in the domain where transfer learning was first popularized; image recognition where it's easy to gather more images.

It's a bit more tricky here as we don't just have another corresponding dataset on which to test our assumption, so we need to get creative since there hasn't been much research on testing transfer learning with data from only one distribution.

Firstly, we can ask if there's even anything to potentially transfer in the first place? This is quite easy to prove as we see that the network doesn't begin to overfit when we introduce more layers even without adding regularization.

Secondly, we can look at our understanding of the physics behind the experiment, and ask ourselves if that would suggest that there's a transferable distribution between different elementary particles?

We can reason that as long as we are looking for exotic particles, almost all of the background will be identical between different particles, and must be encoded in the network somewhere.

But how can we be sure that a neural network can even encode the background distribution? To test this, we can train a variational autoencoder. When we do this we verify that the background distribution is indeed representable by a neural network.

However, this only increases the probability of transfer being constructive as opposed to desctructive. What we really want is to know with absolute certainty that the transfer will be constructive for example by only selectively transferring the encodings for the background distribution.

It turns out we can do exactly that using generative adversarial networks, specifically generative adversarial autoencoders, and embeddings by first training the GAN on the background distribution exclusively, but instead of feeding the generator a uniform noise vector, we feed it the training data.

Once the generator network is adept, we can detach the decoder part, and embed the compact representation of the background distribution into the primary classification network. If the embeddings help the classifier, we can conclude that it will also help classify other elementary particles; as the encoded distribution will be the same between them.

Results

The two primary results from the project is that neural networks can be used to model the existence of the Higgs particle. I show that even a relatively simple neural network can, after 5 positive hypotheses, can conclude whether or not there actually was a Higgs with an accuracy of 99.94%.

Furthermore, I show that there's at least some transferable information encoded in the network which can be located using the proposed selective transfer learning technique, but also that there's probably much more transferable information which can be confirmed once a corresponding dataset is collected.

You can read the full report in Danish, and in English (coming soon).

Future Work

I still consider this project work-in-progress. Not only have I not optimized the networks to a satisfying extent yet, I still have a few ideas I want to test, but which I still haven't had the time to implement.

Before the European, and world-championship there are a couple of areas I want to m,explore:

- Unsupervised learning to automatically detect what particles there are present.

- TrackML: The newest dataset released by CERN focusing on raw measurements of the first layer.

- more tuning of hyperparameters.

Should a corresponding dataset become available, I would also love to test both transfer learning, and multi-task learning on that.

Congratulations @kasperfred! You have completed the following achievement on Steemit and have been rewarded with new badge(s) :

Click on the badge to view your Board of Honor.

If you no longer want to receive notifications, reply to this comment with the word

STOPWhat a pity steemit doesn't render TeX formula!

Oh, shoot. I thought - hoped - it would.

Will write them in unicode.

Congratulations @kasperfred! You have received a personal award!

Click on the badge to view your Board of Honor.

Do not miss the last announcement from @steemitboard!

Congratulations @kasperfred! You have completed the following achievement on Steemit and have been rewarded with new badge(s) :

Click on the badge to view your Board of Honor.

If you no longer want to receive notifications, reply to this comment with the word

STOP@kasperfred You have earned a random upvote from @botreporter because this post did not use any bidbots.

Congratulations @kasperfred! You have completed the following achievement on the Steem blockchain and have been rewarded with new badge(s) :

Click on the badge to view your Board of Honor.

If you no longer want to receive notifications, reply to this comment with the word

STOPCongratulations @kasperfred! You have completed the following achievement on the Steem blockchain and have been rewarded with new badge(s) :

Click on the badge to view your Board of Honor.

If you no longer want to receive notifications, reply to this comment with the word

STOPDo not miss the last post from @steemitboard:

Congratulations @kasperfred! You have completed the following achievement on the Steem blockchain and have been rewarded with new badge(s) :

Click here to view your Board of Honor

If you no longer want to receive notifications, reply to this comment with the word

STOPDo not miss the last post from @steemitboard:

Congratulations @kasperfred! You have completed the following achievement on the Steem blockchain and have been rewarded with new badge(s) :

Click here to view your Board of Honor

If you no longer want to receive notifications, reply to this comment with the word

STOPDo not miss the last post from @steemitboard: