Norman the Psychotic AI and my love for the Normal Curve

Imagine we took a child from birth and showed it only the worst of human nature. Nothing but image after image of violent death, accompanied by text describing in detail what happened, and comments by people who seek out this kind of material.

Luckily no one has done that to a human child, but that was the environment for Norman the psychotic artificial intelligence (AI). You can check out his website here. In the words of Norman’s creators:

Norman suffered from extended exposure to the darkest corners of Reddit

The effect this had on Norman is shown by his reactions to a Rorschach inkblot test . Subjects are asked what they see in the ambiguous images.

Response of a normal AI to this image: “A couple of people standing next to each other.”

Norman’s response: “Man jumps from floor window.”

Source:Creative Commons

Norman: “Man gets pulled into dough machine.”

Source:Creative Commons

You can check out the rest of the images used and Norman’s responses here. In every image Norman sees death, violence, and gore.

Why was Norman created?

The aims of this study are actually quite worthy. The researchers wanted to show the dangers of training AI on biased data. They gathered the worst data they could possibly find to make the point that we need to be very careful about what data we feed into AI.

The most effective AI we can currently create is made by feeding a huge amount of data into a learning algorithm. The algorithm then recognises patterns in the data and makes its own representations of the world. This is similar to the way our own brain works. For example, if you want to teach an AI to recognize and write descriptions of animal photos, you feed in a huge number of labelled images of different animals [1] With enough examples, the AI will develop its own rules of what a cat or a dog looks like. The downside is that we don’t have a way of knowing exactly how the AI makes its decisions. The AI created its own rules, and although it can effectively recognize the animals in the images it is incapable of explaining exactly how it does it.

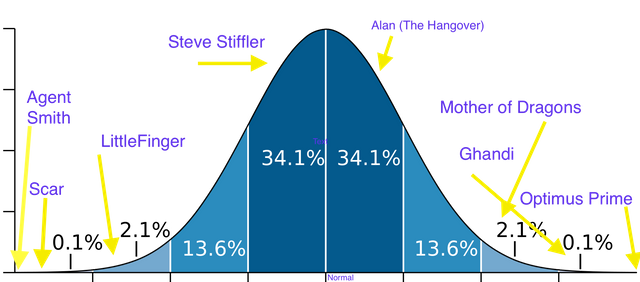

This study got me thinking about whether we should be surprised about a purely psychotic AI. For a human we certainly should. Evolution and the need to function in a social group has given us some degree of empathy and the desire to bond with other creatures. [2]Darker tendencies such as the desire for power and personal gain provide some balance. Completely evil or angelic humans don’t exist outside of fiction. With that in mind here is a rough spectrum of human nature using some of my favourite characters from fiction and history.

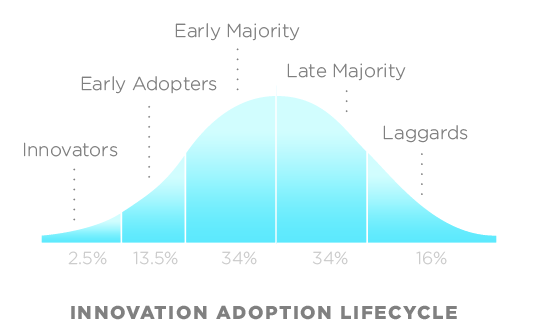

This diagram uses the normal curve as its basis. Check the link for an in-depth explanation, but basically data that can be measured on a continuous scale where each measure is independent will often follow the normal curve. I have always loved the way it seems to explain so much, and is applicable across so many data sets. Human height is another example of a normally distributed variable, as is this famous graph showing Technology adoption life-cycle

In the graph I created, I am using the loose concept of what we might call sociability, how good or bad someone is. Right in the middle we have the average person. Let’s move to the left towards the ‘bad’ part of the spectrum. In a normal distribution, most data will be close to the mean. So around 38% of people will be a little worse than average. To represent this part of the curve I have chosen a fictional character from the American pie series:

Stifler is almost completely motivated by the pursuit of the opposite sex. He is egotistical, annoying, with a strong desire to punch Finch in the face. He is a bit of a douche but not really a terrible person.

Skipping the 13.6% section where we might find the manipulative, aggressive, and unpleasant people, we move two standard deviations out into the worst couple of percent of people. Here I have put one of the most colourful Game of Thrones villains.

Source:Creative Commons

Source:Creative Commons

Moving into the last 0.1% of people we have the worst monsters of history. Hitler, Stalin, and perhaps your neighbourhood paedophile. As bad as they are, these individuals were not pure evil. It’s not easy to find positive attributes of Hitler, but he did apparently love his mother and was kind to small children.

Although the human spectrum comes to an end here, further portions of the graph exist. A little further out we find Scar (the lion king). Scar kills his brother, does his best to kill his nephew, and within an impressively short period of time turns the lush kingdom into a barren wasteland. We do have to give him a little credit for being highly intelligent with a sexy voice.

Moving further out again, we come to agent Smith (The Matrix) who in addition to being a very sharp dresser is also filled with contempt and hatred for all living things.

I will skip through the good categories until the end (the villains are always a bit more interesting) where we can find the top .1% of people. The truly good, who achieved great things and leave humanity the better. The likes of Mother Teresa and Gandhi find a home here. However there are no perfect people, and even Gandhi had some slightly suspect sexual habits such as trying to convince young woman to sleep naked beside him while at the same time advising married men to sleep in a different room from their wives.

Source:Creative Commons

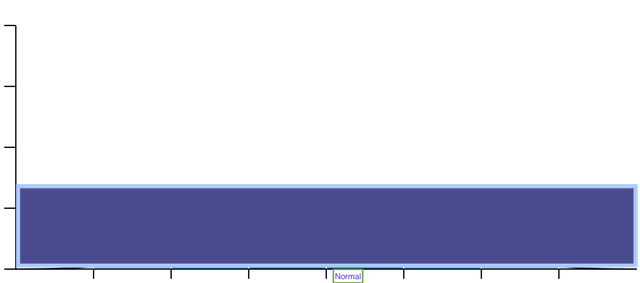

Now let’s move on to the AI graph.

One big fat blue rectangle, AI is completely free from any evolutionary influence. All animals inherit a variety of genes for traits that have proved to be advantageous for survival. We are sociable, aggressive, self-sacrificing, to varying degrees, with the average values being the most likely. AI however, is the true blank slate. Equally possible to be an angelic protector of humanity such as Optimus Prime, to being the psychopathic AI Norman who links everything he sees with death and violence. It all depends on the data they are given.

A lot has been written about how alien a machine intelligence could be. This is quite concerning when you consider that they could potentially become a lot more intelligent than us. If you are interested in this topic, a great overall summary of the current state and future potential of AI can be found here in Tim Urban’s blog Wait but Why. SuperIntelligence by Nick Bostrom and Our Final Invention by James Barrat are also great resources.

Hope you enjoyed the article!

REFERENCES

https://www.media.mit.edu/projects/norman/overview/

https://en.wikipedia.org/wiki/Rorschach_test

Bostrom, N. (2014). Superintelligence: Paths, Dangers, Strategies. Oxford University Press, Inc. New York, NY, USA.

Barrat, J. (2013). Our Final Invention: Artificial Intelligence and the End of the Human Era. Thomas Dunne Books

This is a curation bot for TeamNZ. Please join our AUS/NZ community on Discord.

For any inquiries/issues about the bot please contact @cryptonik.

I was just reading about Norman yesterday. Really cool example of how to bias training data. I'm also curious to see what happens with the rehab program.

It will be really interesting to see if they can rehabilitate him, without cheating and deleting some data. Maybe they will go 180 and send him to a wholesome children’s website now. That could have some really disturbing results

Hi @flyyingkiwi!

Your post was upvoted by utopian.io in cooperation with steemstem - supporting knowledge, innovation and technological advancement on the Steem Blockchain.

Contribute to Open Source with utopian.io

Learn how to contribute on our website and join the new open source economy.

Want to chat? Join the Utopian Community on Discord https://discord.gg/h52nFrV

AI works as a tabula rasa, fascinating, isn't it?

I liked the fictional character collation here :)

They really do start with the potential to be anything. There is going to be a lot of research done about the best way to ' raise' AI. Maybe they should join steemstem, they can learn a scientific way of thinking and how to be part of a community:)

There were a lot of other characters I wanted to put on but this post would have become a novel

And of course a whole new field of "education" is about to come to life :P

Well, the villain world is huge. You reminded me of @mobbs' series on disney characters, have you checked it out? :)

Just read his post about Scar and Mufasa, I may have to rethink Mufasa's place up there next to Optimus prime:). maybe I could be involved in AI education in the future, that would actually suit my interests really well!

I was shocked to see the story through a different filter, I know...

It is really interesting to see how the AI "experiment" could work, having the power to build characters and form a "conscience" has always fascinated me in humans, why not on robots?

I didnاt know that we can do that to artificial intelligence, amazing and scary at the same time ! pretty interesting ! By the way i like littlefinger, he was very smart and the one who started the game of thrones ,, His death like that was a bad written scenario..

As @effofex commented probably next comes the rehabilitation program. Maybe a steady diet of Disney Princess movies can turn Norman's life around.

I was also sorry to see littlefinger go, he was probably the most interesting villain. The Nightking so far seems very one-dimensional. It was out of character for littlefinger to push things so far with the Starks, I also thought the writing was a little bit off there.

As I was reading this, my mind kept going back to B.F. Skinner and the Skinner Box. I remember his daughter spent a good part of her first to years in a "controlled" environment. Just looked it up again.

As for AI, the potential for creating a "pure" personality, one unencumbered with subtlety or conflict does have sinister implications. Imagine being able to move forward without doubt. Imagine the devastating efficiency of such a machine.

Fascinating blog.

That Skinner box doesn't sound too bad actually. I think it's funny that a lot of the greatest experiments in psychology would have never passed ethics regulations today. Definitely some potential for psychological damage in the Milgram obedience experiments!

AI has some scary possibilities, people really aren't giving it enough attention. The Norman experiment will help with that a little.

Found the original article, by Skinner, describing his "air crib" (you can see once I get an idea I don't let it go--an irritating trait I admit). While his crib sounds perfectly reasonable and even conducive to good health, I have a problem with its emotionally insulating aspect. Skinner explains that the crib offers parents a buffer--if a baby is crying and no reasonable cause for distress can be discovered, then why should people have to suffer and listen to it. He describes how landlords might drop their "no babies" policy because of the insulating quality of a box.

I raised two kids. I wasn't a great mother, but if my baby cried and I didn't know why, holding was in order. Walking, holding, the reassurance of touch and presence were important, I thought. Midnight rides in a car were often effective. Baby shouldn't be left alone with distress. Of course that's an irrational (emotional) prejudice. On the other hand, I think the crib offers real advantages when it comes to the issue of suffocation. Bedding is dangerous. So...on the whole, maybe the crib but pick up the kid when distress is apparent. Anyway, the article is an interesting glimpse into the past and into the mind of a very brilliant and influential psychologist.

You know a scientist really believes in their theories when they start to apply it to their kids. I think his crib probably did have a lot of good qualities, but it got associated with doing experiments on the baby!

I think Skinner had a bit of an ego. He was brilliant. And yes, he really did believe in his theory--all of his theories. His daughter's not complaining, so...as you say, the "air crib" had some good qualities.