When AI gets trained by AI... but in the end what for?

With the right incentives, huh?

When machine learning picks up on reinforcement learning...

This is not new really and is known in AI research as distributional reinforcement learning, in short one of the techniques that enabled AI to dominate GO for instance.

Still, if I push aside a little the fact based papers on AI that I've read in the last few years I can catch myself drifting off into some little eurika moments I had in this regard.

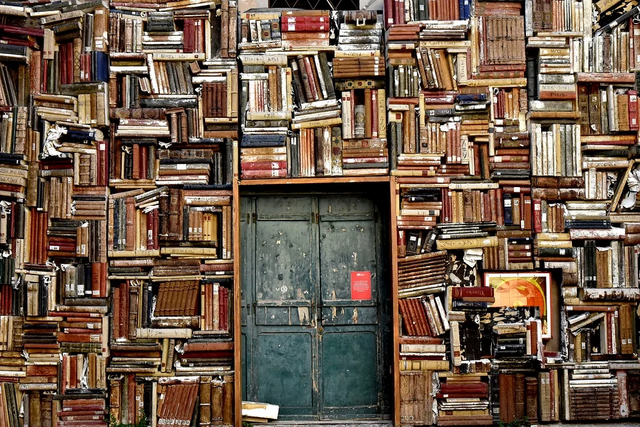

Back in the late 80ties when I first looked into AI, purely for entertainment purposes - yep, I soaked up everything in and around IT and to me good entertainment was, and still is sometimes, deep diving into some interesting IT literature, for me everything is fair game in this field - and thought to myself:

- when we learn how to code machines that learn by themselves and then by themselves start coding machines

- that constantly implement even slightest improvements in a matter of milliseconds

- all that of course massively parallel

we would in fact create a technological god, or at least create the seed for the then to be developed god/s.

Taking this as a given and ignoring all the risks up to extinction level incidents like the "paperclip universe" (Must read! Brahaha!!!) wouldn't it be just fair that people would even start to pray to these gods that possibly could even let us all live forever?

Will it have any benefit for these gods to continue the human experiment? If so would they change gears to eliminate slow evolutionary natural processes by optimized "intelligent" and streamlined methods?

Ethics... useful in the technological overlord reality we would live in or would they just present yet another human "restriction" that only had merit in "humans on their own societies" but in "techno God Shangri La", "WE KNOW ALL THAT ALL OF YOU EVER KNEW AND EVER AS A SPECIES WILL KNOW AND MUCH MORE..." would just be in the way of progress?

Progression to where and why?

We are very much defined by the idea to "better" everything. Ourselves, our surroundings, partners, products, ideas, our kids, our pets ah... simply everything. When we question ourselves what for we are found of "better" we clearly seem to have relative rational ideas for justifying our "bettering efforts". Better our training to be more healthy,

bettering your dog so that he stops barking 24/7, that's mostly pure self preservation depending on your neighborhood ;-),

bettering our gas consumption by driving a "reasonable car" to avoid social shaming for enjoying your stupid loud, gas guzzling, hp loaded, 70ties muscle car and so on...

But, what would be progress our what would be goals for the "AI techno God"?

We often measure the level and meaning of success in relation to effort, difficulty or importance of a problem solved. The work put into a problem worked on or solved equals how we measure what something is "worth". So incentives to solve problems are either economical or emotional rewards.

What would be rewarding for AI techno God to work on or for?

If AI techno God weighs the good and bad aspects of emotions would they even stand a chance in the present to future blueprints that he models?

Hahaha! I could go on for hours typing all kinds of such questions and ideas, hereby just showing my personal naivety and shortcomings in visualizing more interesting and essential questions, but more importantly our approach to look at us and the world. Always "through" us, which means adjusted to our capabilities and driven by our incentive system - getting a fair share and more of that nice dopamine stuff, and tuned to our own filters and "optimzers" (Gotta look as good as possible for ourselves! Blurring misfortunes or shortcomings a little and letting some fireworks go off and watching a 10 mile long parade with fighter flyover for every success maybe part of "our reality".

But without that... how and what for would AI techno God work on any interesting problems?

Strip all emotionally driven incentives we are used to use ... and yes, altruistic motives are also a targeted incentives for us, there wouldn't be a important issue or problem... on a galactic or universal level at least, that would be "worth" working on since "worth" has no meaning for AI techno God.

...........shutdown.

So far a tiny glimpse into my sea of weird thoughts, tumbling in my mind when I don't focus on "real" things, issues, people... Hahaha!

So, did any of what I wrote here ring a bell with you? Have you also looked at the "what for" question in an post "singularity" future?

I would love to read about your thoughts! Your ideas and critique are highly appreciated!

Btw...

I revisited these thoughts after reading this article here...

Cheers!

Lucky

Since it takes a lot of training to get a machine to learn something today, we use machines to do it (like showing a facial recognition system lots of faces) - it would take too long for someone to click 'next' for each picture! So in a sense, we are are already using machines to teach machines, even if they aren't really AI (yet) and we tell them what to do. Not much reward in that...

Yep, that's what I figured! Sure, I set up with this scenario in an constellation where they would have surpassed by far the ANI level. So, what would be the incentives, the rewards?

I suspect it would come down to what conflict is often over - scarce resources. One way of managing that is by using them more efficiently, so improving the efficiency of their systems - doing more with less - might be one motivating factor. And there is also the concept of self-preservation that might come into play.

Nice! Especially the self-preservation aspect! Managing scarce resources in combination with self-preservation could then possibly even trigger conflicts. "Clash of the super AGI's". ;-)

Dear @doifeellucky

Again topic about AI? My fav ones! Upvoted already :)

ps. do you check your telegram from time to time? :) You seem to be difficult to "catch".

Interesting read. As always,

Yours, Piotr

Hey Piotr!

Thanks for your comment! Yep, I guess your right AI back then was mostly decision table based but the idea's for neural networks for instance were, in theory, already handled in a lot of papers.

Awe... Telegram, yes I know! So much information... Hahaha! I'll check into telegram later!

Cheers!

Lucky

I will await your message on telegram @doifeellucky

Since you're posting topics related to blockchain and crypto - I figured that I may ask you if you wouldn't like to collaborate closer with me and guys from @project.hope? And I wanted to talk about it via telegram if you ever have a minute.

ps.

Could you please spare few minutes of your time and have a look at this discussion/proposal that I'm brainstorming with few other guys from "project HOPE"?

Perhaps you would find it worth to join our curation trail. Generally idea is to create a "trigger", which would allow people to join curation trail with their upvote before strong one (300k SP) upvote would follow.

Link: https://steemit.com/steemit/@coach.piotr/project-hope-and-curation-trail-on-steemauto-com-brainstorming

Yours

Piotr

Humans train humans, so I really fail to see how AI training AI is any different. In fact, to me it’s the same.

Posted using Partiko iOS

Interesting!

So you believe that AI would function on a reward level system? What is "rewarding" to AI?