Popular neural network types

Multilayer Perceptron

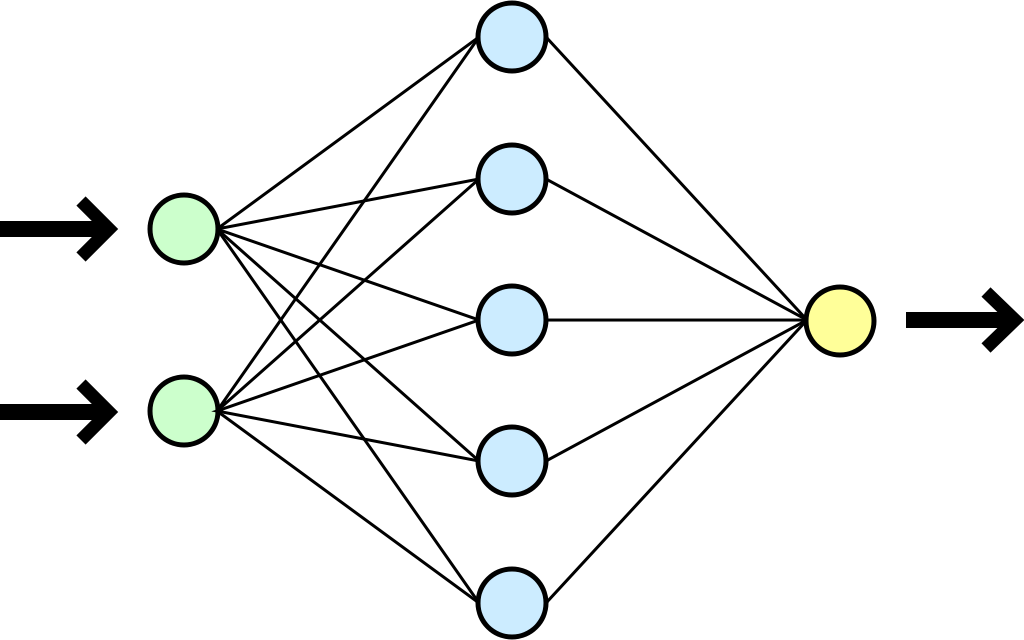

This is the most popular type of neural network. It consists of an input layer, then a hidden layer and an output layer. The structure of such a network is as follows:

Here we have just one hidden layer (blue) - this special case is called a single-layer perceptron or simply a perceptron. The green color indicates the input layer and the yellow indicates the output layer. But what exactly happens in this type of network? A single step is:

- placing data in the input layer

- calculation of each layer's output from the previous layer

- calculation of an error at the output

- updating of neural network weights in the process of back-propagation (= changing the weights so that the net result is closer to the expected result).

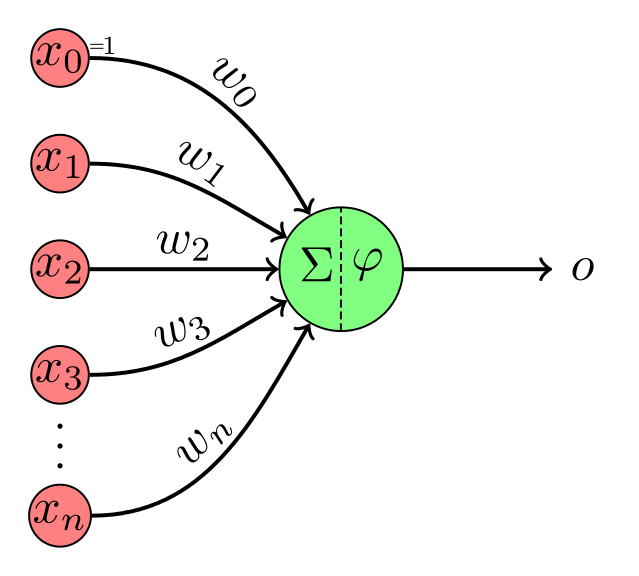

If we look from the perspective of a single neuron, it looks like this:

The layer output is simply the output of its individual neurons. And for each neuron it is calculated as a the input vector x times weight vector w plus bias b. The activation function is then calculated from this value and transmitted as the output of the neuron (and this output will be the input of each connected neuron of the next layer).

Such networks are usually used for classification problems (i. e. assigning to a particular group). As far as the classification of images is concerned, there is another type of neural network which is usually used - convolutional neural network

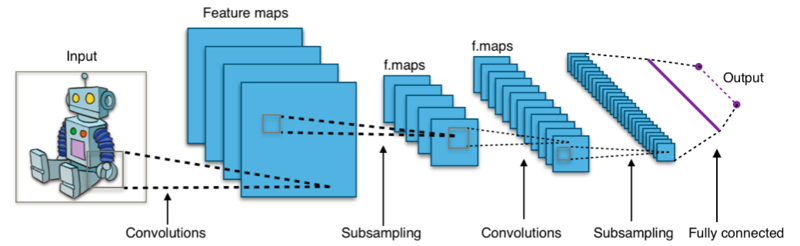

Convolutional neural network

This is a network type for image classification. It is due to the fact that using a standard network for high-resolution images makes the network too large and the calculation would be very slow. For example, for 1000x1000 pixels the input layer would have 3,000,000 neurons (including 3 components of the RGB image). Therefore, the network does not take the whole image at the input, but a selected fragment (e. g. 32x32). As a result, the size of the whole network is significantly smaller (= acceptable).

The structure looks like this:

Well, but why is it working then? When detecting a face, this may looks as follows:

- the first layer detects the edges.

- the second layer detects simple geometric shapes from the edges

- the third layer detects more complex figures from the geometric shapes

- the fourth layer detects such elements as eye, ear and nose

- the fifth layer detects combinations of the above

- the sixth layer (last layer) detects the entire face from the previous layer.

Of course, this is an illustrative example. In the case of large nets it is usually difficult to determine exactly what happens in which layer.

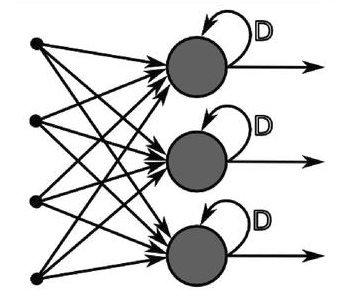

Recursive neural network

These are neural networks where the network output is tranferred as an additional input for the next record. So we can put it that way:

In the first two types of network, this was not the case, data only moved in one direction (hence also often referred to as feed-forward neural networks). The main applications are Natural Language Processing, where word sequences matter. Thanks to this recursive connection, the network also takes into account data from previous steps.

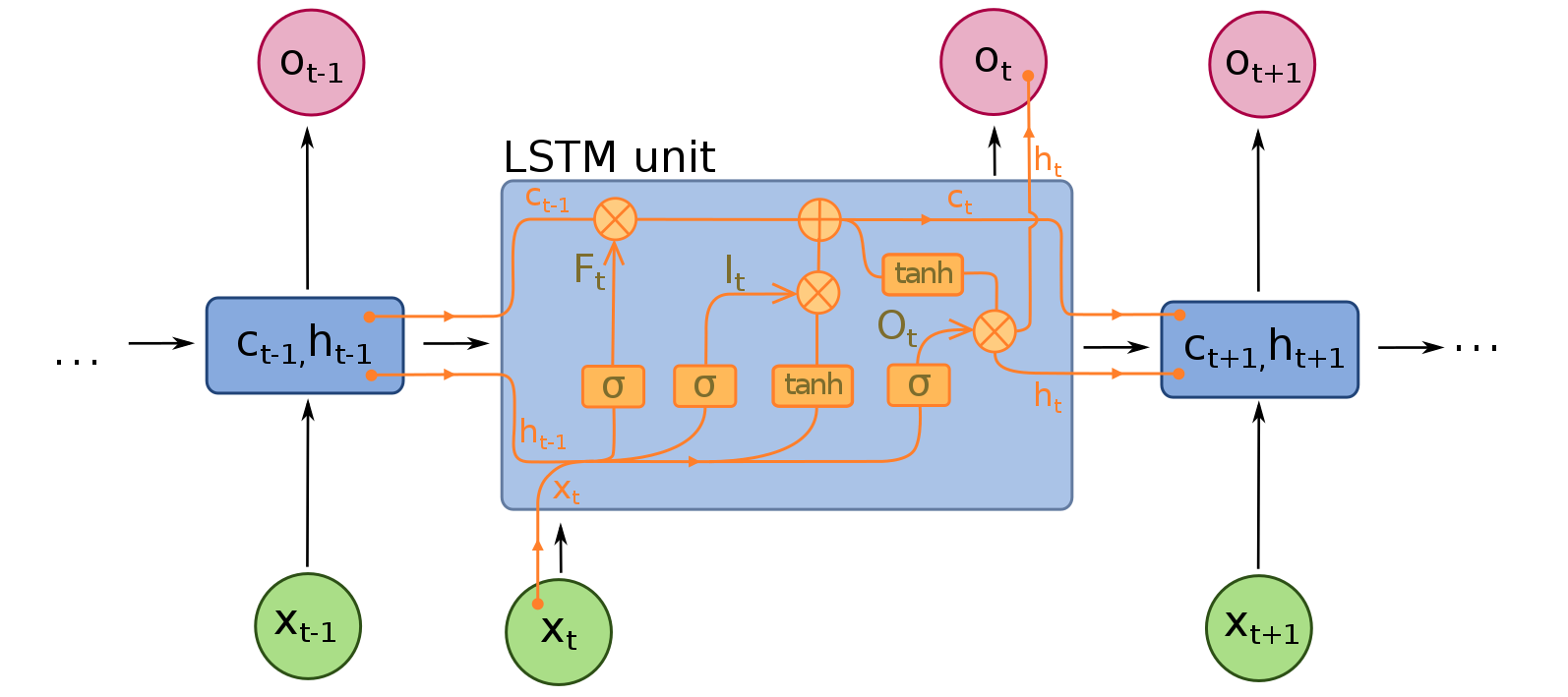

LSTM

LSTM is an abbreviation for Long short-term memory. It is a subtype of recursive networks and, as the name suggests - it is a network with memory. It is used when the network has to take into account information from many of the previous steps (i. e."remember what happened before") and an ordinary recursive network is insufficient. The diagram looks as follows:

They are used for example in translations, describing images with words, predicting changes in graphs.

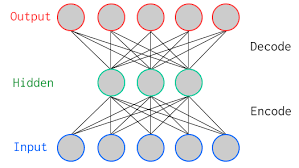

Autoencoder

This is a neural network learning data representations, usually for compression purposes. The network tries to remember the most important characteristics of the input data so that it can reproduce them as well as possible. The structure of such a network is relatively simple. As we can see, the number of hidden neurons is smaller than the number of input/output neurons.

Very nice introduction.

Can you please elaborate a LSTM works? Looking at the schema gives me a rough idea, but I'm not sure if it's correct.

I am going to prepare a separate post for each of these networks soon. So far here is the best description I know: http://colah.github.io/posts/2015-08-Understanding-LSTMs/

Good stuff!! Come check us out we love AI as well!! :)