Invisible risks of artificial intelligence

What changes will artificial intelligence systems bring to our way of life? It is a difficult question: on the one hand, they are making interesting progress in carrying out complex tasks, with significant improvements in areas such as energy consumption, audio file processing and leukaemia diagnosis, as well as extraordinary potential to do much more in the future. On the other hand, they play an increasing role in making problematic decisions with significant social, cultural and economic impacts in our daily lives.

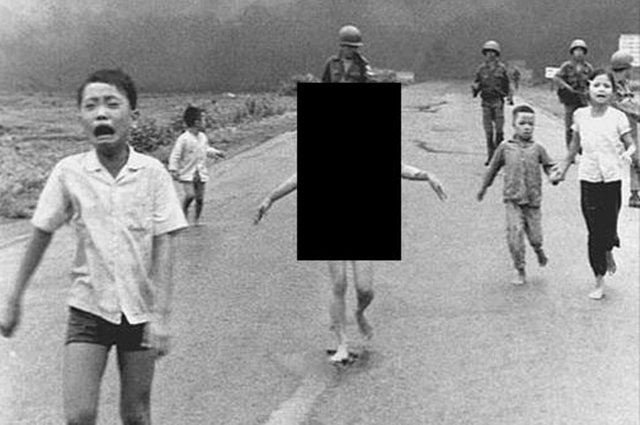

Artificial intelligence and decision support systems are embedded in a wide variety of social institutions and influence choices of all kinds, from the release of detainees from prison to the main news of the day. A recent example of the impact of automated systems on content selection is Facebook's censorship of the famous Pulitzer prize photo portraying a child fleeing from napalm bombs during the Vietnam War. The child is naked; the algorithm filtering images may seem like a simple violation of the rules on nudity and photos of minors. But to human eyes, Nick Ut's photograph "The Terror of War" has a completely different meaning: it is a symbol of the indiscriminate horror of war and has earned itself a place of honour in the history of photography and international politics. Removing the photo caused international protests, forcing Facebook to backtrack and restore the image. The Norwegian Prime Minister Erna Stolberg also intervened on the case, saying:"What Facebook does in removing images of this kind, however good his intentions may be, is to censor our common history".

It is easy to forget a crucial fact: these high-profile cases are in fact easy cases. As Tarleton Gillespie observed in the Wall Street Journal, Facebook reviews hundreds of images a thousand times a day, and it is rare for Facebook to be faced with a Pulitzer prize of obvious historical importance. Sometimes even the teams of human moderators intervene in the evaluation of the content to be filtered, other times the task is entirely entrusted to the algorithms. In the case of the censored photo, there is also a considerable ambiguity on the boundaries between automated processes and human intervention: this is also part of the problem. And Facebook is just one of the actors involved in a complex ecosystem of evaluations supported by algorithms, without a real external monitoring to verify how decisions have been made or what the effects could be.

The case of the famous symbol photo of the war in Vietnam is therefore only the tip of an iceberg: a rare visible example under which a much larger mass of automated and semi-automated decisions is concealed. The worrying fact is that many of these systems of "weak artificial intelligence" are involved in choices that don't even manage to attract that kind of attention, because they are integrated into the back-end of computer systems and intervene in calculations on multiple sets of data, without an interface aimed at the end user. Their operations remain largely unknown and invisible, with an impact that can only be seen with enormous efforts.

Artificial intelligence techniques sometimes guarantee accurate results, other times they are wrong. And in those cases, it is rare for errors to emerge and become visible to the public - as in the case of the censored photo of the Vietnamese girl, or the "beauty contest" judged by algorithms and accused of racism for the selection of exclusively white winners. We could consider this last example as a simple problem of insufficient data: it would have been enough to train the algorithm to recognize a less partial and more diversified selection of faces and in fact, after 600,000 people have sent their selfie to the system, now surely has more data available to do so. A beauty contest with a robot jury may seem like a trivial initiative of bad taste, or an excellent contrivance to entice the public to send their photos and expand the set of data available, but it is an indicative case of a series of much more serious problems. Artificial intelligence and decision support systems are increasingly entering our daily lives: they determine who will end up in the predictive police heat lists, who will be hired or promoted, which students will be admitted to university; or even try to predict from birth who will commit a crime within 18 years of age. The stakes are therefore very high.