Programming Diary #35: Steem's fundamental challenges

Summary

This post describes my programming activity during the last two weeks. Nearly all of my activity focused on enhancements to Thoth, a curation bot that's intended to create new incentives for authors to create content with lasting value. Updates included new screening capabilities and improved formatting of the curation post that it produces. The post also discusses my plans for the application in the future.

Additionally, the post contains reflections on the two challenges that I believe are fundamental to the Steem ecosystem: improving the quality of the top-ranked posts; and delivering rewards to authors after the 7-day payout window.

Finally, the post makes the argument that the best forms of Steem curation will combine information that's provided by humans and also by automatons.

Background

As anticipated in Programming Diary #34, when I found time for coding in the last two weeks, my focus has been almost entirely on Thoth. This is because I think that Thoth (or the idea behind it) has the potential to be the most impactful of any of my projects. And this is because it is targeting, in my opinion, two of Steem's most fundamental challenges. These are:

- The trending page sucks. This is the site that visitors see when they first arrive, and it is definitely a case of Steem not putting its best foot forward; and

- Posts stop paying rewards after seven days, which destroys the incentive for an author to create something of lasting value.

Enter @etainclub and eversteem, back in December, and there is now a conceptual solution to problem #2. Namely, beneficiary rewards on active posts can be redirected to deserving authors of posts that already paid out. Almost by accident, Thoth now has the potential to extend that solution in a way that I believe may also lead to improvement on the first challenge.

I'll talk more about this in the "Reflections" section, and also about the importance of automated curation, but for now let's move on to the activities and plans from the last two weeks.

Activity Descriptions

Here were my goals from the previous diary post:

In the next two weeks, I expect to continue focusing on improving the pre-AI screening by implementing some of the other constraints that are specified in my config.ini file. I'll also be thinking about how to deal with the apparent limit on beneficiaries.

Thoth updates

Functional Changes

As expected, I implemented some more screening. Specifically, I added the ability to filter by language and process multiple languages, instead of just English. I also added a tag blacklist.

Additionally, I made some changes to the prompt that goes to the LLM.

Finally, I improved the post format to make it more attractive, and to let the reader see all of the titles near the beginning so that they can decide which LLM responses they want to view without scrolling through the whole article.

I also created the @thoth.test account, which I'll be using in conjunction with the publicly available @social for future testing. I don't have a detailed strategy picked out, but generally I think that @thoth.test will be mostly looking at the article screening characteristics whereas @social will be about changes to the underlying functionality.

Screening observations

You can see the current post format, here. In this post, along with some of the typical content filters, I also restricted the authors to those who are 1.) Inactive on Hive for at least 4 years; and 2.) Active on Steem within the last 30 days. With those restrictions in place, it ran for more than 24 hours in clock time and spanned 7 or 8 days of blockchain time before it was able to find 5 posts (during July, 2016).

About the cap on beneficiaries

I found this issue from 2018 confirming my previous observation. Apparently, there is a "soft limit" of 8 beneficiaries and a "hard limit" of 127.

The soft limit is a pretty big hurdle to overcome if Thoth is going to succeed as a gen-5 voting service. It could be overcome by adding individual replies to the post for each of the included authors, but that starts to feel kludgy, and it starts to look spammy. (Though it would still be less spammy than the current bots that run around commenting on every post that they vote for.)

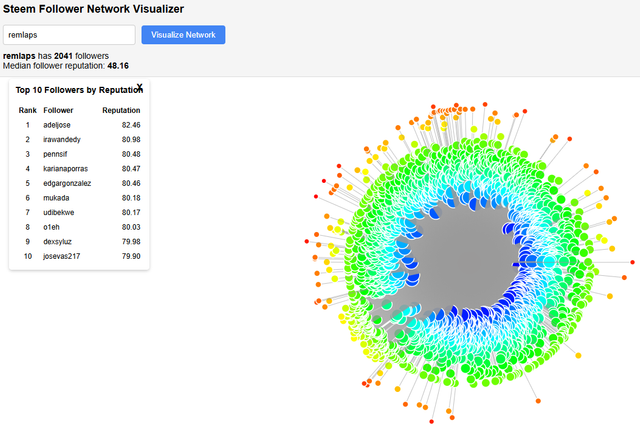

Steem Follower Visualizations: An unexpected diversion

Tuesday, on an impulse, I used Claude to slap together a browser extension that creates visualizations of an account's follower network. Amazingly, I didn't read a single line of code. I simply copied and pasted according to Claude's instructions.

I suppose I'll put this up on my github site, but anyone can make their own in an hour or two. I'm not sure if it's really worth saving it.

Next up

In the short term, I don't see any change in direction coming. I plan to continue working on additional screening capabilities in Thoth and probably also revising the post contents.

In the long term, if Thoth ever gets to the point where it can support the expense, I'll add API screening for plagiarism and AI authorship, and better (non-free) LLMs. Eventually (as suggested by @the-gorilla), I'd like to also add reinforcement learning, so it can train on its own posts and results in order to learn more about what human curators are looking for.

Also, I invite anyone who wants to participate to please do so. Thoth is intended for the Bazaar, not for the Cathedral😉.

On screening

Two of the parameters that I'm playing with are Steem activity time and Hive activity time. Both of these are tricky.

If we're delivering rewards to inactive Steem authors, in the worst case it's equivalent to burning the rewards. It might have potential to reactivate that author, or the rewards might just leave the ecosystem. If we're only delivering rewards to active Steem authors, that places a huge limitation on the amount of historical content that can be discovered.

Similarly, there are some Hive participants who also add real value here on Steem, and we'd like to share historical rewards with them. However, there are also many Hive participants who are actively hostile to Steem, and we don't want to deliver rewards to those accounts. Also, the Hive activity screening is easy to beat in the future. Users would just need to use different account names on the two platforms, so the value of this capability (if any) is limited.

For both of these points, I don't have any real idea for how to find the right balance, so I anticipate a lot of experimentation.

Reflections

Steem's two fundamental challenges

In today's ecosystem, the most successful "authors" are clearly the author-investors who collaborate with the voting services to collect daily rewards without respect to post quality. They operate in a closed ecosystem where rewards are distributed on a 7-day timeline in exchange for payments or loans.

Under its current design, this system requires these pseudo-authors to post daily, in order to get their share of the reward, and the size of the rewards means that they dominate the trending page.

The major drawback here is that the investors are devaluing their own stake (and everyone else's) in a Tragedy of the Commons sort of race to the bottom. I'm not making any moral judgments here. It's just the way that the incentives are aligned.

By extending the reward window past 7 days (to forever), the method that was reintroduced by @etainclub has the potential to rearrange those incentives. If an author knows that a post might keep getting rewards for the next five years, the incentive to beat the 7-day clock goes away, and it suddenly starts making sense for author to focus on producing content that delivers long-term value. Yes, there will always be people seeking short term rewards, but incentive changes should reduce the trend. I think, by a lot.

Imagining a future where posts earn rewards for life

Even in testing, @thoth.test has already started delivering small amounts of rewards to authors of posts from years ago. Imagine what happens, if that continues and grows every day.

- An inactive author comes back to Steem just out of curiosity, and discovers that they have rewards waiting for them from a post that they wrote in 2018. What do they do? Well, they probably tell other authors from the time period to go check their own wallets for some "free STEEM". And maybe they also decide to try writing again.

- Let's say there's an active author who came to Steem and posted good quality articles for a time period, but gave up when they saw lower quality content outperforming - so, they decided, "If you can't beat 'em, join 'em.". Now, however, they get a payout from a 2019 article that they wrote before they gave up. If they start to believe that lasting value will be rewarded, maybe they decide to go back to their original writing style.

- As authors start experiencing the benefits of permanent reward payouts, the tragedy of the commons comes to an end. Investors don't need to chase the 7-day fix. Instead, they can delegate to the bot operators that find the best content, support the best authors, and strengthen their stake instead of diluting it.

Maybe Thoth and eversteem are the vehicles to usher in these changes, maybe not, but I think that some implementation of the perpetual reward idea is the key to optimizing Steem's singular capability - it's ability to deliver blockchain rewards in exchange for social media activity. And I think that harnessing that capability is the key to growing STEEM's value.

The best curation involves humans and automatons

From Steem's earliest days, there has been a commonly held idea that only humans can do a good job curating Steem's content because machines can't understand written language. I have disagreed with that since the beginning, and the (relatively) recent emergence of LLMs has only strengthened my opinion. The trick is finding the right mix of human and automaton.

Steem curation is just a ranking system.

If post A earns more rewards than post B and post B earns more rewards than post C, then we should be able to conclude that there is some sense where A is "better" than B and C.

What was the breakthrough that made Google into a tech powerhouse? Page Rank. They take human input - in the form of links - and use it to develop an automated ranking system. Yes, it's less than perfect, but does anyone think it would be better (or even possible) if they had an office full of workers reading each page manually and assigning a score? I certainly don't. I think machine ranking with human input is optimal for Google.

In chess, computers can now beat the best humans, but humans coupled with machines can beat the computers. My own writing is better with a spell-checker and with advice from an LLM. So, why should Steem ranking (curation) be any different? I argue that it's not.

Some tasks are necessary, but humans don't do them well

Abuse detection

Many Steem curators are already using automation for things like plagiarism checks, AI checks, and looking for "content farms". But, those tasks are tedious, and they don't scale when a human must be involved in every single check.

In the future, I hope to put checks like that into Thoth so that human curators can review its output with the knowledge that it's recommending posts that probably weren't plagiarized or created by LLMs. It will never be perfect, but I think it will be more reliable than most human voters (myself included). Also, when combined with rewarding of historical posts, Thoth will have the advantage that it's using state of the art AI detection against AI posts that were produced using obsolete technologies. Thoth abuse-detection will get better as time passes, but the "abusive" content is locked in time.

A surprise about languages

As I worked to incorporate multiple languages into Thoth, one of the things I noticed is that it can automatically give me an English-language summary of an article in a language that I don't know (Example: Post #5, here). In turn, this can help me with the decision of whether I click through to the article and go through the (minor) annoyance of translating it into English. This sort of capability can help the human curator to focus their own attention onto the posts where it will be most useful.

A twist on content discovery

There are two possible models: "Vote for the content that attracts the votes" or "Bring the votes to the content". The second model is too time consuming for manual curators, but bots can do it easily, and if they do it well they can save time and effort for the manual curator.

If "curation bots" are trying to use the Steem reward system, until now they typically try to guess what content the bigger voters will support. But, it doesn't have to be that way. If a "curation bot" like Thoth creates articles about the posts that it finds, then it can influence the things that people vote for. By finding good content, it will receive more rewards - both as a virtual-author and as a curator.

We have seen some precedent for this with @realrobinhood and with @trufflepig, but now it needs to be coupled with the distribution of perpetual rewards.

Conclusion

Bottom Line: The Steem blockchain is loaded with valuable content that is effectively invisible. We can let it remain invisible, or we can find tools to leverage it that bring readers and creators to the blockchain and increase the attention economy's contribution to the Steem ecosystem.

To that end, this post highlights my recent efforts to address two fundamental challenges that exist in the Steem ecosystem: the low quality of top-ranked (Trending) posts; and the seven day payout window that discourages creators from producing articles of lasting value.

I believe that a key to addressing both of these problems is the "perpetual reward" concept that was reintroduced by @etainclub in eversteem and extended in Thoth.

Combining perpetual rewards with automated content discovery and LLM summaries produces, I believe, a powerful new tool to compensate authors for valuable content that would otherwise remain long-buried.

Finally, this post reiterates my long-standing argument that - like Google PageRank - Steem curation needs to incorporate efforts by humans alongside of effort by automatons.

Thank you for your time and attention.

As a general rule, I up-vote comments that demonstrate "proof of reading".

Steve Palmer is an IT professional with three decades of professional experience in data communications and information systems. He holds a bachelor's degree in mathematics, a master's degree in computer science, and a master's degree in information systems and technology management. He has been awarded 3 US patents.

Pixabay license, source

Reminder

Visit the /promoted page and #burnsteem25 to support the inflation-fighters who are helping to enable decentralized regulation of Steem token supply growth.

Personally I only use Hive for Actifit posting, maybe there's a way to differentiate between transactional users and those who are deeply invested in the war. (And saying the word "invested"... maybe just checking someone's Hive Power can be a rough estimate for how strongly they care about Hive).

It strikes me as wasteful that curatorial efforts, because they tend to independently consider each post, can end up examining and re-examining the same posts for abusive content. It's like mining for precious metals but dumping the chipped-through rock back down the shaft to be dealt with by the miner on the next shift. I wonder if there's a way to more easily acquire knowledge of what known-bad content is out there.

I don't know exactly how this can play into the social dynamics, but one thing that perpetual-rewards-via-beneficiaries does is bypass the curation rewards on the original post.

That's probably worth looking at. I suppose it could also look for STEEM to HIVE transfers in the wallet history. I've also been thinking about using whitelisting to address this. I even had to put a specific exclude filter on an account that has been inactive on Hive for more than 4 years because the account owner was so aggressively hostile to Steem at the time of the split. There just doesn't seem to be a 100% way to handle it. In the long run, I think whitelisting coupled with decentralized operation would probably get the closest, but multiple layers of checks are also good.

IIRC, for a while before 2020, Cheetah would lay down a 1% vote on content that it thought was plagiarized, then other curators could disregard the post if they saw the cheetah vote. I never thought Cheetah's plagiarism detections methods were great, but the signaling method was good. That could be useful for both plagiarism and AI detection.

We also have the current method where community mods/admins post their findings in a comment. That feels spammy to me, though, and it makes automation more challenging since natural language processing would be needed.

This is a good point that I hadn't thought of. I'm also not sure how how that would influence the incentives, but it bears consideration.

I've long wondered why the upvote services don't create a one-off post themselves, generate 10 comments there every day and upvote them by 100%. The profits could be paid out to the investors without them having to create a shit post. That would be a simple solution to problem 1. Presumably the old track will be retained, because extra rewards can be generated through any additional votes on the shit post.

I think this is also because it is intended that new posts are written. Similar to the VP, the normal user who does not use a voting service is motivated (one could also say forced) to visit steemit every day. The number of new posts and the number of visitors are important indicators of the value of a domain, so I could imagine that this is the reason why the mechanisms were set up in this way.

It seems to have been forgotten that in the days of steempeak it was possible to set beneficiaries for first-level comments. So I assume that this option still exists today and is just not offered by the Steemit frontend. Or - I'm not sure about this - that comment beneficiaries are only paid out in SBD.

If the comment beneficiaries work, then that would also be a good way to vote on old posts. With the @thoth.test posts, the votes are distributed among all the authors mentioned, with the comment beneficiaries you could decide for yourself which of the old posts you want to vote for.

Finally, why do you send 70% to @null on @thoth.test posts because it's just a test?

0.00 SBD,

0.95 STEEM,

0.95 SP

Me, too. It would improve the spam problem, and it would offer an avenue for passive rewards - making their product more attractive to potential clients. And I have also wondered why their clients don't demand it.

I agree, here, too. At one time, there were two payout windows - one after 1 day and one after 30 days. When they switched to 7 days, they said that there was almost no significant voting after 1 day. IMO, this is because they're buried from people's feeds after that. If we want posts to have longer lifetimes, I think that some sort of recommender is needed (In a way, Thoth is a first pass at creating a decentralized recommender).

It's definitely still possible. This is how eversteem rewards posts after payout. I'd love to see @the-gorilla give us a beneficiary option in replies through condenser. Something new might be to let the commenter set a default beneficiary amount that gets assigned dynamically in each reply to whatever account created the post that the commenter is replying to. Maybe that would create a more collaborative and engaged environment(?).

I have thought about making use of comment beneficiaries so that Thoth can have more beneficiary slots (for delegators), and so that voters can direct rewards to a single historical post/author, instead of splitting them among all authors in the top-level post. It's a tradeoff between not wanting to create spam comments vs. customizing the results and including more delegators. Not sure if I'll set that up or not. The ideal thing might be to create the capability, and then let each Thoth operator experiment to see whether it makes sense to use the capability on or not.

3 reasons:

0.00 SBD,

0.46 STEEM,

0.46 SP